On Cloud Nine

Large industrial plants and their need for higher accuracy are increasing the computational intensity of simulation in process industries. But on most systems, with added complexity comes sacrifices in time and ultimately money. So when F L Smidth's multiphase flow model for their new flash dryer took about five days to run on its local infrastructure, the company decided to look to the cloud.

F L Smidth supplies complete plants, equipment and services to the global minerals and cement industry. The most recent addition to the company's offerings is a flash dryer designed for a phosphate processing plant in Morocco. The dryer takes a wet filter cake and produces a dry product suitable for transport to markets around the world.

F L Smidth supplies complete plants, equipment and services to the global minerals and cement industry. The most recent addition to the company's offerings is a flash dryer designed for a phosphate processing plant in Morocco. The dryer takes a wet filter cake and produces a dry product suitable for transport to markets around the world.

The company was interested in reducing the solution time and, if possible, increasing mesh size to improve the accuracy of their simulation results without investing in a computing cluster that would be utilized only occasionally. So the company decided to participate in the Uber-Cloud Experiment, which was initiated by a consortium of 160 organizations and individuals for the purpose of overcoming roadblocks involved in remotely accessing technical computing resources in high-performance computing (HPC) centers and in the cloud.

The aim of the first round of the Uber-Cloud Experiment was to explore the end-to-end process of accessing remote HPC resources and to study and overcome the potential roadblocks. The project brought together four categories of participants: industry end-users, computing and storage resource providers, software providers such as ANSYS, and HPC experts. ANSYS was engaged with multiple teams and this article is a summary report of one of the teams

Simulating a Flash Dryer

The flash dryer procedure was designed by F L Smidth’s process department; the structural geometry was created by its mechanical department based on engineering calculations and previous experience.

Nonetheless, before investing large amounts of money and time to build the dryer, the company wanted to verify that the proposed design would deliver the required performance and evaluate if alternatives could be found that would cost less to build and operate.

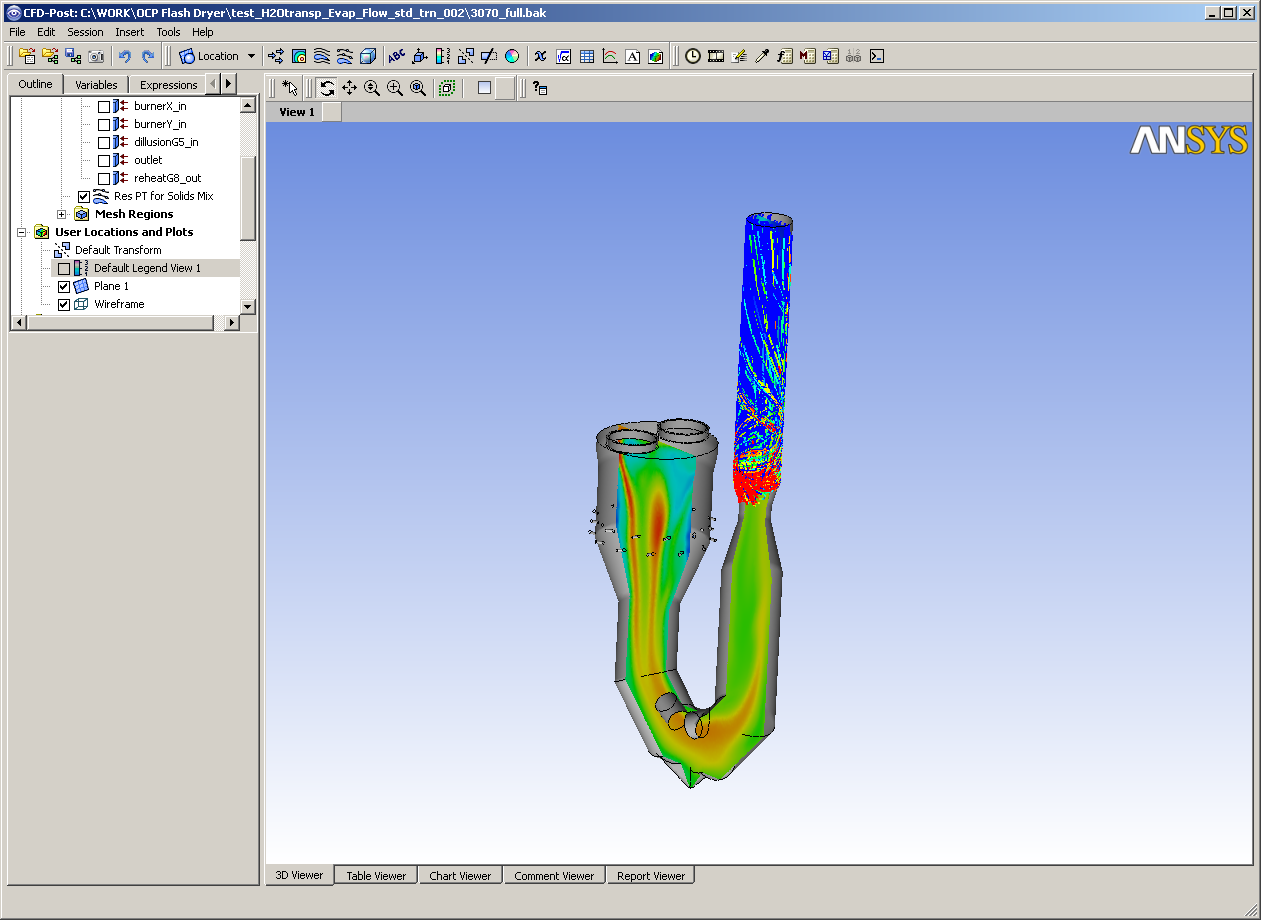

To evaluate the dryer before it was every installed, F L Smidth used ANSYS computational fluid dynamics (CFD) tools in addition to extensive pilot testing. This approach helps identify designs that reduce construction and operating costs and reduces the risk of having to modify the dryer during the installation phase.

Accurately simulating the performance of a flash dryer requires modeling the flow of gas through the dryer, tracking the position of particles as they move through the dryer and calculating the moisture loss from the solid particles. For example, the multiphase flow model of the dryer used in the phosphate plant uses Lagrangian particle tracking to trace five different species of in time steps of 1 millisecond for a total time of 2 seconds.

Accurately simulating the performance of a flash dryer requires modeling the flow of gas through the dryer, tracking the position of particles as they move through the dryer and calculating the moisture loss from the solid particles. For example, the multiphase flow model of the dryer used in the phosphate plant uses Lagrangian particle tracking to trace five different species of in time steps of 1 millisecond for a total time of 2 seconds.

Using a local machine with a 3.06 GHz Xeon processor with 24 GB RAM, the simulation required five days to solve. Naturally, companies like F L Smidth want to speed up time to implementation, but the hardware limitations of a local machine held back the simulation's accuracy as well.

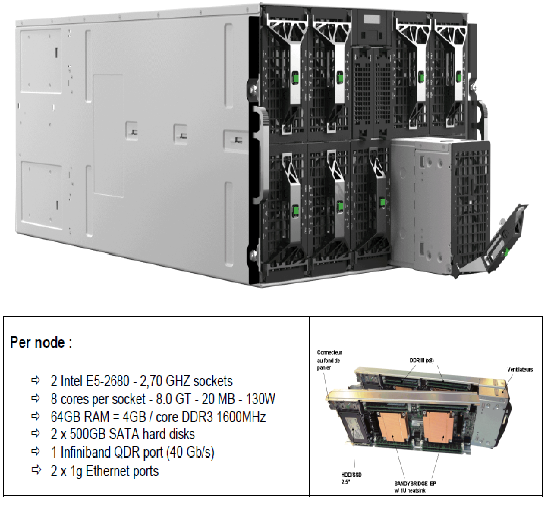

To address this, F L Smidth recently ran this same model on a cloud solution provided by Bull extreme factory (XF). The model ran on 128 Intel E5-2680 cores in the cloud in about 46.5 hours. Not only did the cloud solution demonstrate the potential to run models faster by increasing the speed of sensitivity analysis, it also reduced the amount of internal resources that needed to be devoted to IT and infrastructure issues.

The XF resources used are hosted in the Bull data center at the company’s headquarters outside Paris. They feature Bull B510 blades with Intel Xeon E5-2680 sockets with eight cores, 64 GB RAM and 500 GB hard disks connected with InfiniBand quad data rate (QDR) serial links, which can be seen below.

Running Simulation in the Cloud

To bring the CFD solver to the cloud, the XF team integrated ANSYS CFX into their web user interface, which made it easy to transfer data and run the application. In total, this took around three man days to set up, configure and execute, while F L Smidth engineers in turn spent around two days to set up and utilize.

Because the ANSYS CFX solver was designed from the ground up for parallel efficiency, all numerically intensive tasks are performed in parallel and all physical models work in parallel. So administrative tasks, such as simulation control and user interaction, as well as the input/output phases of a parallel run were performed in sequential mode by the master process.

After a few early run failures due to hardware or software glitches, the model was successfully solved on 128 cores. The runtime of the successful job was about 46.5 hours ― meeting F L Smidth’s primary goal of running the job in one to two days.

Within the first round of the Uber-Cloud Experiment, there unfortunately was not enough time to perform scalability tests with the ANSYS CFX solver. In future experiments, however, this could help determine how additional resources could be deployed to reduce run times even further.

Regardless, when looking at the quick turnaround between migrating the CFD solver to the cloud and reaping the benefits of more powerful infrastructure, Marc Levrier, HPC Cloud solution manager for XF, was confident that the future of simulation lies in the cloud. “This project demonstrates the feasibility of migrating powerful computer-aided engineering applications to the cloud,” said Levrier.

About the Authors

Sam Zakrzewski is a fluid dynamics specialist at F L Smidth A/S in Copenhagen, Denmark. Wim Slagter is the lead product manager at ANSYS, Inc.