Green Power Management Deep Dive

During Adaptive Computing's user conference, MoabCon 2013, which took place in Park City, Utah, in April, HPC Product Manager Gary Brown delivered an in-depth presentation on Moab's Auto Power Management capabilities. Moab is the policy-based intelligent scheduler inside Adaptive's family of workload management products.

The latest Moab HPC Suite Enterprise Edition (version 7.2.2) includes a variety of green policy settings to help cluster owners increase efficiency and reduce energy use and costs by 10-30 percent.

The latest Moab HPC Suite Enterprise Edition (version 7.2.2) includes a variety of green policy settings to help cluster owners increase efficiency and reduce energy use and costs by 10-30 percent.

While this presentation is targeted at Moab users, there are principles here that will apply to general cluster management. The talk is laid out in three parts: power management, green computing (aka auto power management) and future power management.

The core of Moab power management is the tracking of two node power states: on and off. "On" means node power has been reported as on by the power resource manager, but "off" does not necessarily mean the power is off – to Moab it just means that this node cannot be used, although there is the option to completely remove the power.

To run a job Moab requires that the node power state be on and the resource manager (e.g., TORQUE, SLURM, etc.) be running and have reported node information.

Power management relies on system jobs, which are distinct from user jobs. A system job won't appear in a queue, but it does execute on the Moab head node or server and it is usually script-based and uses asynchronous operation.

Brown remarks that when there is a large cluster with a large number of nodes, the system jobs may have to handle large quantities of node hosting. They may therefore have to be self-throttling to manage initial demand spike, and require a daemon process to maintain power state for reporting purposes.

There are two system jobs that are used for power management. The first is defined by the ClusterQueryURL parameter, used by Moab to query the power state of all nodes in the cluster. Moab runs it at the start of the scheduling cycle. This synchronous operation reads every node's power state and passes it to Moab. It blocks Moab from scheduling until all node power states are reported or the operation times out.

The other power system job is defined by NodePowerURL, which is used by Moab to power on and off compute nodes. The mutually-exclusive parameters are on and off. In green computing schemes, this is normally run at the end of the Moab scheduling cycle, but it can be run on an on-demand basis, says Brown. This script needs to interface with the power management system in order to turn nodes on and off. Each system vendor usually has its own power management software, ex. HP has iLO, Dell is DRAC, etc.

Adaptive recently created new power management reference scripts in response to customer demand. These are all Python-based and work with OpenIPMI. They have all been regression tested on Adaptive QA system hardware – and are active, working scripts.

Brown explains that if there's an administrator-initiated script, Moab immediately runs the script and powers on or off the node. When the script is green policy-initiated, Moab runs it as part of the scheduling cycle.

There's a potential issue with this setup in the case of large clusters. If there are a lot of nodes, it can take a long time to query the power state, during which Moab's scheduling activities are blocked. The solution is a power state "monitor" daemon that gathers node power states at regular intervals and records them in a file. Then the ClusterQuery script can read the power states out of the file, providing Moab with the information it needs without excessive slowdown.

The first thing the power ClusterQuery script checks for is that "power monitor" daemon. If it doesn't find it, it starts it up. The daemon gathers the power state every "poll" interval, which can be set by the admin independent of the scheduling interval. While polling nodes, it stores the power state data in a new temporary file. After polling all nodes, it replaces the file read by the Power ClusterQuery script with this new file. When Moab goes to read it at the start of a scheduling cycle, it basically reads these power states and reports them to Moab very quickly.

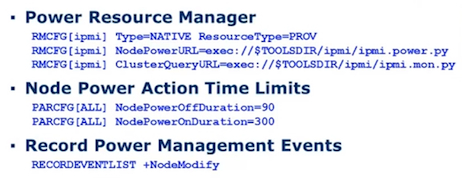

Brown goes on to the topic of configuring power management inside Moab. He says that admins must define a power resource manager. Brown emphasizes that this is not TORQUE, which is a workload management tool.

The first step is to define the power resource manager, then the node power action time limits, as in this example:

Furthermore, there's an option in the Moab configuration file to "record node modification events," which creates a notification when Moab powers on or off compute nodes (see above).

The next step in configuring power management is to edit the config.py file and set the variables inside the file. The reference scripts make the assumption that every IMPI interface uses the same user name and password, which is normally the case, says Brown.

The next action item is to customize the power management scripts. There will be two of them. The user needs to go into the script that performs the node power control and replace the node power commands with the ones that are appropriate for their system. At this point, there is the option to replace "off" with another choice, for example "sleep," "hibernate" and "orderly shutdown." Then next step is to edit the monitor daemon script, to replace commands in the script with the user's system-specific power commands. If the NodePowerURL management script "off" commands were changed, then the monitor daemon settings should be likewise adjusted.

Green Computing

In the second section of the talk, Brown gets into the theory of operation behind Moab green computing, the policies used to configure it and the use cases. He says the first thing to understand is that green computing absolutely requires power management, which is why he dedicated the first half of his talk to that topic.

"You must configure the power management and must manually verify that it works correctly before you go implement the green computing, because if you don't do that and something's wrong, you aren't going to know if it's the power management or the green settings," he says.

There are three main ways that Moab performs Auto Power management for green computing:

- Green Pool

- Green Pool Maintenance

- Green Pool Operation

The green pool is comprised of idle nodes that are kept on standby for jobs. This is intended for cases when there is a queue that is sometimes empty – if there is never an empty queue, then auto power management is not needed.

The purpose of the green pool is to balance the need to start a job quickly with power savings. If there were no green pool, and jobs were released, the nodes would be immediately powered off. Then when jobs came in, the nodes would have to be powered back on, which takes a long time, even longer than it takes many jobs to run.

As for the configuration, the administrator defines the green pool size and Moab uses this as a target that it's supposed to maintain. If a job takes some of the idle nodes out of the pool, Moab will power on nodes that are off to return the pool back to its prescribed size. Conversely when jobs are complete, idle nodes are returned to the pool and will be turned off after a specified time. The default size of the pool without configuration is zero, which is the same as having no green pool. In this case, Moab still operates, but must power up nodes for every job unless idle nodes just became available.

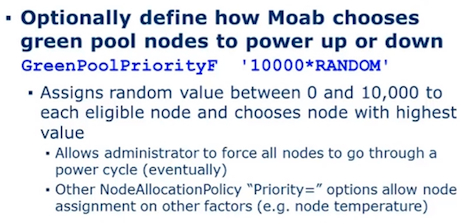

One feature that is brand new to Moab 7.2.2, says Brown, is a green pool parameter called GreenPoolPriorityF, which allows the admin to assign a priority of 0 to 10,000 to each eligible node. Moab uses the function to determine which idle nodes to power off, and which powered-off nodes to power on. If this setting is not specified, Moab's default behavior is to go from the first node to the last node.

The way the green pool operates, according to Brown, is when a job gets started, if there are enough powered-on nodes for the job, Moab will select them first but only if the node allocation policy is so configured. If there are green pool idle nodes, Moab will also choose them. If there are no powered on nodes available, Moab will choose from the powered-off nodes based on the prioritization parameter. The important point here is to make sure that the policy settings direct Moab to prioritize the selection of available powered-on nodes.

As soon as Moab has allocated nodes, it puts a job reservation on them, all of them. If off-nodes were chosen, Moab will start a power system job for just the "off" nodes. Remember, Moab starts the job when nodes are "on" and when the workload RM reports that the nodes are "idle."

Green pool replenishment uses GreenPoolPriorityF to identify powered-off nodes and Moab starts a power system job to power up the selected nodes – this increases the idle node count up to green pool size. When jobs complete, the node returns to the green pool. After an idle node waiting period, Moab uses GreenPoolPriorityF to choose which excess idle nodes to power down.

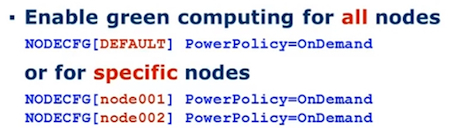

Enabling green computing policy in Moab requires a power policy configuration setting called "on demand." This can be enacted for all nodes or specific nodes as the chart below shows. The default power policy is static.

It's also important to set green pool size to desired idle node count. This takes some experimenting to each system's unique needs. When setting the idle time wait threshold, not that it should be longer than the power on and power off duration limits.

One way to force all nodes to go through a power cycle is to use a random element in the node priority policy settings. Adaptive developed this capability for an HPC administrator who wanted to ensure that all his nodes over time went through a power cycle to make sure they still worked.

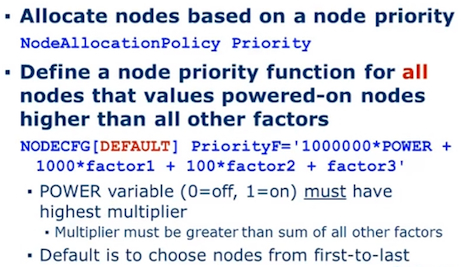

Configuring the NodeAllocationPolicy so that it selects powered-on nodes first requires using a NodeAllocationPolicy of Priority. There are 20-30 variables on which to base priority assignments, but the POWER variable is especially important here.

If only specific nodes are enabled for green computing, the PriorityF function must be defined on those nodes.

Use Cases

The main use case for Moab-style green computing is to lower operation costs by spending less money on power. Potential future use cases that Adaptive has identified include additional power-saving states, support for performance states (P-states), power caps and the ultimate advancement: bi-directional communication with the power grid.

Brown hints that future versions of Moab may include additional states (C-States), such as Suspend, Sleep, Hibernate and Orderly Shutdown, which will enable a more customized power profile.

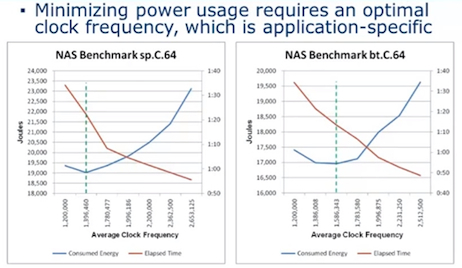

Enacting Performance states (P-states) is somewhat more complex. It involves active control of the clock frequency of the processor. This can be done via a job submission option or through an administrator configuration in Linux. Brown explains that changing the clock frequency requires overhead. The reason to allow a user to specify clock frequency is to optimize power usage. Depending on the application, frequencies have different power profiles. In the example given, a NAS benchmark running on a processor with increased clock speed had a 10 percent reduction in job runtime for only a 1 percent increase in power.

"That's one sweet deal in my book," says Brown.

As stated earlier, another possible use case for the green power measures are power caps, which are required in Europe and increasingly in the US. There are individual compute node limits, which could be administered with Moab policies and new RM capabilities. The other way of enacting these power caps is site-wide, where power companies restrict power to a certain total (e.g. 4 MW). This method can also be administered with Moab policies and new RM capabilities.

The last use case is where Moab communicates directly with the power grid. In the US, this implementation falls under the smart grid concept. Say Moab has a large job it needs to run, it relays its energy needs to the power grid. The power grid can also communicate directly with the HPC cluster to send messages regarding available power. The way that Moab responds to this information depends on the policies that are in place.