Consolidate Virtual Machines without Sacrificing Performance

Average server utilization in many datacenters is low, estimated between 5 percent and 15 percent. This is a huge waste for datacenters because an idle server consumes more than half the power of a fully loaded server. For example, two servers at 15 percent load will consume nearly twice as much power as one server loaded at 30 percent. Low utilization also means that more servers have to be purchased, installed, wired, and maintained than necessary, leading to wasted capital expense.

Consolidation is the most obvious solution to this problem, and virtualization technology makes it easy to consolidate. Server virtualization splits a single server into multiple virtual machines and these virtual machines (VMs) can now host the same applications as the individual servers. In effect, software previously hosted on multiple servers can run on multiple VMs on the same server. The recent growth in deployment of virtualization technologies is a testament to the appeal and power of consolidation.

Consolidation is the most obvious solution to this problem, and virtualization technology makes it easy to consolidate. Server virtualization splits a single server into multiple virtual machines and these virtual machines (VMs) can now host the same applications as the individual servers. In effect, software previously hosted on multiple servers can run on multiple VMs on the same server. The recent growth in deployment of virtualization technologies is a testament to the appeal and power of consolidation.

So what's the catch? Why isn't every datacenter going virtual and consolidating their software onto fewer servers? It is not that the cost of virtualization software is higher than the wasted server capital and energy. And it is not that datacenter admins do not know about virtualization. They only know it too well: consolidated VMs may not have the same performance as when each hosted application has its own dedicated server. This is because consolidation inevitably introduces resource contention among the VMs sharing the same server. Current virtualization technology is good at splitting the CPU cores, memory space, disk space, and even network bandwidth. But it does not do well on shared memory bandwidth, processor caches, and certain other resources. Since virtualization does not prevent all forms of contention, it cannot completely eliminate performance degradation. Measurements on both benchmarks and real datacenter application workloads show that execution times for a given computation increases by several tens of percent when consolidated, even with dedicated processor cores.

New technology developed at Microsoft Research is able to address the performance problem. The prototype, aptly named Performance Aware Consolidation Manager, PACMan, allows datacenter admins to set a performance threshold and then automatically minimizes the number of servers used or energy consumed. While customer-facing applications prioritize performance, batch processes, such as Map-Reduce, may prioritize resource efficiency. For such situations, PACMan can accept a threshold number of servers or power budget and then automatically maximize performance within that budget.

Understanding VM Performance Degradation

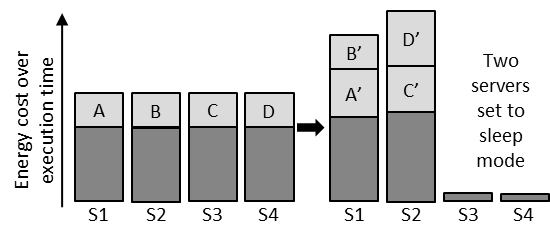

Let us start with an example. Consider a toy datacenter with 4 VMs: A, B, C, and D (see Figure 1). On the left, the 4 VMs are placed on a single server each. Suppose the task inside each VM takes 1 hour to finish. The shaded portion of the vertical bars represents the energy used over an hour; the darker rectangle represents the energy used due to the server being powered on (i.e., idle power consumption) and the rectangles labeled with the VM name represent the additional energy consumed in VM execution (i.e., increase in server energy due to processor resource use). On the right, these VMs are consolidated on two servers (the other two are in sleep mode).

|

|

| Figure 1 | |

The setup on the right is more efficient. However, due to resource contention, the execution time goes up for most of the VMs. Both the server idle energy and the additional energy used by each VM increase due to VM degradation. The increase in energy consumption due to contention may wipe out some or all of the energy savings obtained by turning off two servers, and longer running time may violate quality of service (QoS) requirements. Hence, it is important to delicately balance the trade-off between energy savings obtained by shutting down servers and the energy costs incurred due to performance degradation.

Pack Smart

PACMan intelligently selects VMs that will contend for resources the least. For instance, if a VM uses a lot of memory bandwidth but is not affected by the size of the processor cache, while another one uses a lot of cache space but not much memory bandwidth, it will select these to place together. Of course, real life VMs do not fall into such cleanly separated sets. Rather, each one would use both memory bandwidth and cache space, as well as other shared resources to varying extents. This is where the PACMan smarts come into play. It chooses which VMs are best to place together, and just how many of them can be packed on a single server.

|

|

| Figure 2 | |

We now explain the performance priority operational scenario with an example (see Figure 2). We have 6 VMs to be placed on servers. A simple placement of one VM on each server will require 6 servers, which is very wasteful. A better option would be to pack them more tightly, on 3 servers, as shown at the top of the figure. The dashed line indicates the performance threshold. Here, the yellow VM degrades too much and violates the performance threshold. PACMan computes a solution that places the VMs such that the performance degradation threshold is not violated, as shown at the bottom of the figure. It uses as few servers as possible while still satisfying the performance constraint. The numbers in the figure below each server indicate the energy cost of placing VMs on servers. In this example, we incur an energy cost of 50 for each active server and 10 for each VM assigned to a server. The top placement has a total cost of 210 while the lower one only has a total cost of 160, resulting in savings of 23.8% on energy in addition to the cost of the server not used.

For the performance priority mode, PACMan is able to efficiently find a placement of VMs to server cores such that the energy cost is provably close to the theoretically optimal solution, even though the optimal solution is NP-hard to find (that's computer science jargon meaning that it is highly unlikely an algorithm which can find optimal solutions in a reasonable number of years exists).

Figure 3 explains PACMan's batch mode setting, again with an example. Here, we have a resource constraint on the number of machines and available cores (each machine has 3 cores). There are 9 VMs to be arranged on the server cores. Each VM degrades in performance by some amount, which depends on which other VMs are assigned to the same machine. In the top schedule, the last VM to complete is the yellow VM, while in the bottom schedule, the last VM to complete is the orange VM (this is measured by the horizontal, dashed line). Hence, since the orange VM in the bottom schedule completes sooner than the yellow VM in the top schedule, the bottom schedule is better in a batched scenario.

|

|

| Figure 3 | |

Experiments performed at Microsoft Research indicate significant potential savings when using performance aware consolidation powered by PACMan. It operates within about 10 percent of the theoretical minimum energy, saves over 30 percent energy compared to consolidation schemes that do not account for performance degradation, and improves total cost of operations by 22 percent. For the batched mode, PACMan yields up to 52 percent reduction in degradation compared to naïve methods. More details about the algorithms and experiments are given in the technical paper here.