New Intel Xeon E5s: 50 Percent More Work For About The Same Dough

Just a few weeks ahead of the launch of EnterpriseTech, chip maker Intel rolled out its "Ivy Bridge" Xeon E5-2600 v2 processors. These chips are used in the two-socket machinery that dominates the server business these days, and provide a significant performance boost over the prior two generations of processors out of Intel. And, they do so at roughly the same price per clock, which just goes to show that, for now at least, Moore's Law is alive and well.

The Ivy Bridge Xeon E5 chips are part of a family of processors that Intel will roll out in the coming months. Low-cost variants in the Xeon E5-2400 family are expected, as are versions for low-cost four-socket machines (those are the Xeon E5-4600s) and for fat-memory machines with four, eight, or more sockets (those are the Xeon E7s).

All of the Ivy Bridge chips are based on Intel's latest 22 nanometer TriGate transistor etching processes, which was used on the Ivy Bridge variants of the Core processors for end user devices last year as well as on the "Haswell" editions of the Core chips that came out earlier this year. The prior Xeon E5-2600 v1 processors, based on the "Sandy Bridge" design, were manufactured using a 32 nanometer process, and the process shrink is allowing Intel to cram a lot more transistors onto the silicon wafer. That means more cores and more cache, which is great for workloads that are more parallel and can handle it, and it is not so great for those workloads that can't. Still, as is always the case with a new chip from any vendor, there is a modest clock speed increase as well as some architectural tweaks that allow the chips to get more single-threaded work done, too. The top-end, twelve-core Xeon E5-2600 v2 processor has 4.3 billion transistors and a surface area of 541 millimeters square.

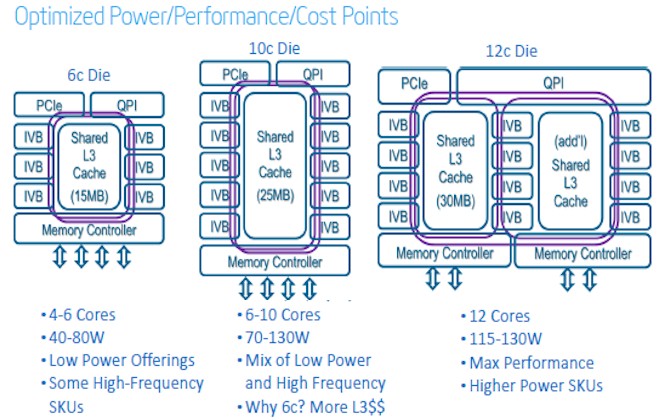

There is not one Xeon E5-2600 v2 chip, but rather three different variants, and this is the interesting bit about what Intel has done this time around. Intel is being pulled in a lot of different directions these days, with many of its biggest customers demanding processor configurations that better match their software. And so, there is not one Xeon E5-2600 v2 processor, but actually three different physical chips. (The one shown in the picture above is the ten-core variant, and there is another one that has six cores.) Each one of these three processors doesn't just have a different number of cores on the die, but also different sized L2 cache memories, cache memory bandwidth, and clock speeds to meet the needs of different workloads. They all fit into the same sockets processor sockets, which are also compatible with the existing Sandy Bridge Xeon E5-2600 v1 chips. For most system makers, this will be a straight drop-in upgrade, but there will be some new machinery tailored to the Ivy Bridge Xeon E5 chips.

Here is what the three variants of the Xeon E5-2600 v2 processors look like, conceptually:

The six-core part can be geared down to only four cores and has some higher clock speed versions as well as lower clock speeds to get it down to a 40 watt thermal envelope. The low-powered parts are important for customers seeking the maximum compute density for the lowest electricity draw and cooling requirements as well as a decent amount of performance in a given space. But they have the same 2.5 MB of L3 cache per core as the Sandy Bridge Xeon E5-2600s did, so there is not going to be a huge performance gain for these chips.

The ten-core variant has 25 MB of L3 cache, which works out to the same 2.5 MB per core, but there is a variant that is geared down to six-cores running at 3.5 GHz with the full 25 MB of cache that is going to be very popular for applications that require higher clocks and L3 cache. This E5-2643 v2 chip is priced at the same $1,552 as the E5-2667 v1, which clocks at only 2.9 GHz and which has only 15 MB of L3 cache. So for the same money, you get 24 percent more clock speed 67 percent more L3 cache. (As always, the prices cited by Intel are the list price of each individual processor when a distributor or server maker buys them in 1,000-unit trays. The actual price distributors and server makers pay is not this, obviously, and neither is the price that end user companies are charged when they either buy upgrade kits or whole new systems.)

The twelve-core version of the Ivy Bridge Xeon E5 chip comes with 30 MB of L3 cache, back to that 2.5 MB per core ratio that Intel's techies say is the right balance for the wide variety of workloads that can make use of more cores and threads. There are exceptions, of course, and that is why that six-core chip mentioned above exists.

The chips have on-die PCI-Express 3.0 controllers and QuickPath Interconnect (QPI) links to hook multiple processors to each other. In the case of the Xeon E5-2600 v1 and v2, the QPI links coming off each chip hook into each other to link the processors into a shared memory system using non-uniform memory access (NUMA) clustering. In the Xeon E5-2400 variants, only one of the QPI links is used, which lowers the bandwidth, but Intel also lowers the price, and with the Xeon E5-4600, Intel uses the dual QPI links to hook four processors together to make a much less expensive four-socket machine than can be done with the Xeon E7-4800 chips.

With the twelve-core variant, Intel put two memory controllers on the die, each with only two memory channels on it instead of the four used with the other two Ivy Bridge Xeon E5 versions, and that was done to scale up the memory bandwidth a little bit to keep those dozen cores fed.

All three versions have four channels per socket and a maximum of three memory sticks per channel, and this time around the DDR3 memory can be cranked up to 1.87 GHz as well as support 800 MHz, 1.03 GHz, 1.33 GHz, and 1.67 GHz speeds. Regular 1.5 volt and low-power 1.35 volt memory is supported on the memory controllers. The PCI-Express controller on the die provides 40 lanes of I/O traffic, which can be ganged up in a number of ways by motherboard makers to create PCI-Express peripheral slots. (With two processors, you get 80 lanes plus an extra PCI-Express 2.0 x4 slot that hangs off the second processor.) The "Patsburg" C600 chipset is used to drive up to eight 3 Gb/sec SAS drives, with an optional RAID data protection feature. Collectively, the processor and chipset are known as the "Romley" platform by Intel.

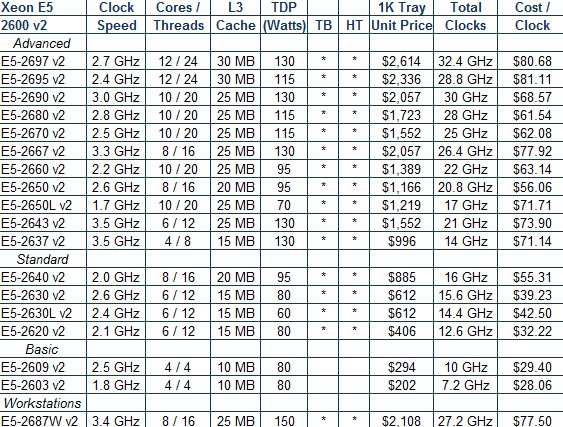

All of the Ivy Bridge chips support Intel's Trusted Execution Technology (TXT) secure execution circuits as well as the AES-NI encryption/decryption instructions that have been part of the chips since the "Westmere" Xeon 5600 processors from three years ago. As you can see from the table below, most of the Xeon E5-2600 v2 processors also support Turbo Boost (TB) and HyperThreading (HT), as did most of the Sandy Bridge and Westmere Xeon variants for two-socket machines. The chips also sport the "Bull Mountain" random number generator, which is called SecureKey by Intel, and this will no doubt be very useful for a slew of enterprise-class applications that need random numbers. (Power and Sparc chips already have random number generators, by the way.) The Ivy Bridge chips also include a new feature called OS Guard, which is designed to prevent the execution of user mode pages while in supervisor mode on the chip, a tactic that is used by Stuxnet and other pieces of malware.

Intel is grouping the Xeon E5-2600 v2 processors into four categories, as you can see from the table above, and this is not just arbitrary but rather is based on the memory and QPI speeds supported by each class. The Advanced chips let memory run at the full 1.87 GHz and the QPI links run at 8 gigatransfers per second (GT/sec). (For some reason, the exception here is the low-voltage E5-2650L v2 chip, which only lets memory run at 1.67 GHz even though its QPI links run at full speed.) With the Standard parts, the QPI links are geared down to 7.2 GT/sec and memory maxes out at 1.67 GHz. The Basic versions of the chip slow the QPI links down to 6.4 GT/sec and the memory tops out at 1.33 GHz. The final category is Workstations, and here the core count is dropped and the QPI links and memory run full throttle.

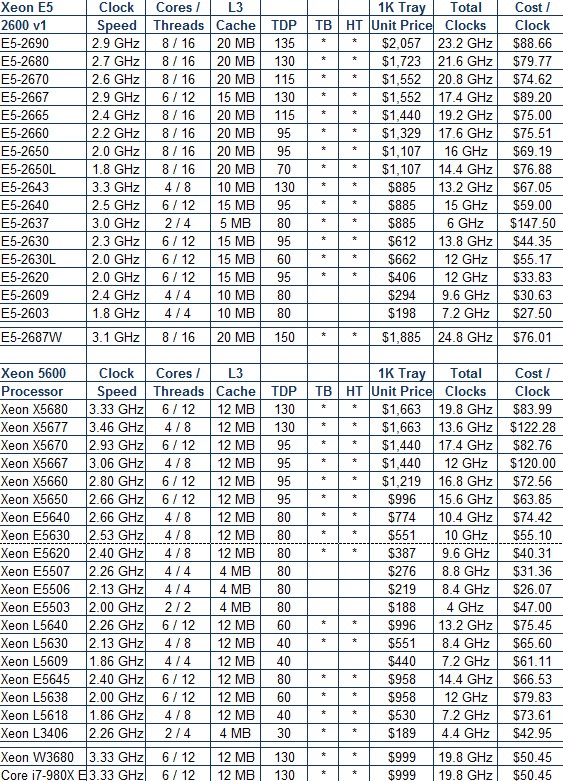

We like to do comparisons here at EnterpriseTech, so to give a rough – and we realize how rough this is – idea of how the different chips stack up, the table above also adds up all the clocks across the cores and calculates the cost per clock for each model. As you can see, bang for the buck is all over the place, and chips get more expensive as you get more and faster features. There are no surprises there, this being Earth. The funny bit is that the pricing per unit of CPU clock capacity is not all that different, generally speaking, from the prior two generations. Take a look:

The top-end and low-end parts in the Xeon E5-2600 v1 and V2 lines for two-socket servers offer better bang for the buck than their Westmere Xeon 5600 predecessors. For the v1 and V2 chips, in a number of cases the chips are a little more expensive, but offer a lot more performance. The top-bin twelve-core part offers about 50 percent more performance on a variety of workloads and does so with a 45 percent improvement in performance per watt. These are precisely the kinds of numbers that are going to get financial services companies – where time is money – to do a processor swap if their workloads can make use of more cores and more threads to speed them up. And for those who need more clocks and cache to speed up their software, that six-core, 30 MB L3 cache chip is going to look very enticing indeed.

The thing to remember is that the processor only makes up between 20 and 30 percent of the cost of a two-socket system, and if you can boost performance on the order of 25 percent on single threaded work or 50 percent on multithreaded work at a marginal 10 percent price increase, then a processor upgrade makes more sense than doing a box swap or even waiting until the next generation of chips come out from Intel. Provided you really need all that extra performance now.

With the future Haswell Xeons, Intel will be moving to a new socket and server platform, so all of the boxes in a cluster are going to have to be replaced to get the next performance bump. If history is any guide, those Haswell Xeon E5-2600 v3 chips are 18 months away, and that is a long time to wait in the extreme scale universe. Many shops won't wait.