IBM Pushing for Java Accelerated on GPUs

If you have been waiting for the Java community to get that popular enterprise programming language accelerated on GPU coprocessors, you are in good company. John Duimovich, a distinguished engineer at IBM and its Java CTO, is right there with you.

Oracle is hosting its JavaOne conference in San Francisco this week, outlining the plans for the future of Java on both client devices and servers, and Duimovich was part of the keynote lineup. And as part of his presentation, he showed off some benchmark results on accelerating Java routines with GPUs and exhorted the community to keep working towards native Java on GPUs.

As one of the dominant forces behind the Java programming language and the largest system supplier in the world, IBM has a lot of sway in the Java community even though Oracle, through its acquisition of Sun Microsystems three years ago, probably has more. But when IBM talks, the Java community listens.

Java, which is nearly 20 years old, is one of the most popular programming languages in the world with over 9 million programmers worldwide. A very large number of enterprise applications as well as a lot of the programs that comprise modern Web infrastructure are written in Java.

Duimovich spent a lot of his keynote talking about using hardware to accelerate Java, and as you might expect, as an IBMer, he started out by talking about the mainframe a bit. But he ended up with GPUs, and that is the interesting bit as far as EnterpriseTech is concerned.

IBM's Java CTO explained how the company had juiced the interaction between DB2 databases and its Java-based WebSphere Application Server on its System z mainframes by switching from TCP/IP links to Shared Memory Communications using Remote Direct Memory Access over Converged Ethernet. (Yes that is <i>quite</i> a mouthful, and it is abbreviated SMC-R to give us all a break.) The upshot was by tweaking the network links IBM was able to reduce latencies by 40 percent between systems and at the same time cut CPU overhead. And CPU overhead is quite pricey indeed on a mainframe. And through offload engines on the latest System z processors, announced in the summer of 2012, IBM is able to offload zlib compression to those on-chip offload engines and reduce CPU usage by 10 percent for this piece of the Java workload.

In both cases – and this is the important point – this acceleration was completely transparent to the Java applications and required no changes in the code.

Like others who are looking to speed up Java, Duimovich and his peers at IBM want to bring even more hardware to bear. "We are thinking about GPUs," he said in his keynote. "I look at GPUs. I have one on my laptop and I have many in my home computers, and they have ridiculously large amounts of CPU, many threads, thousands of registers, thousands of threads, and teraflops – literally teraflops – of performance. And I don't currently get that out of my Java."

The OpenJDK development community is working on Project Sumatra, of course, but this is taking too long as far as Duimovich is concerned.

"There is Project Sumatra, and it is in OpenJDK, and is working to exploit Lambda, which is great to have concise expressions for parallel," Duimovich explained. "There's going to be a roadmap over time and Sumatra is probably looking at JDK 9. So this is the right time to talk about this – JDK 9, in 2016, we're going to be in a good spot to exploit because there is a hardware roadmap to drive this. But I would like to see it done sooner. I would like to see what we could do now and what we could drive to."

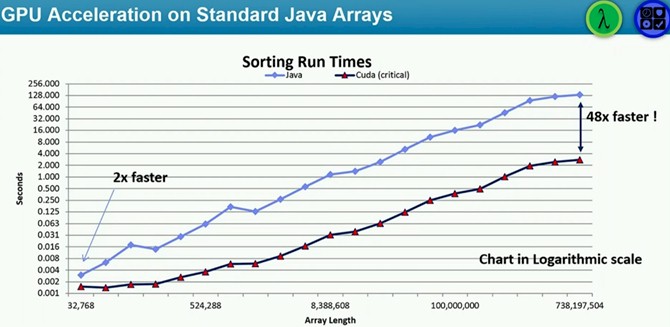

Duimovich did not provide any specifics about how this might be accomplished, but he did throw out a benchmark test to encourage the Java community to move a bit faster on accelerating Java with GPUs. Here is a sorting benchmark that Duimovich said was run on an unspecified system using a GPU from Nvidia using its CUDA development environment:

With an array of numbers that is relative small (32 KB), as the chart above shows, the GPU that Duimovich chose on his systems (the exact feeds and speeds were not available at press time) was able to sort it about twice as fast.

"This kind of surprised us, because we thought that sending the data back and forth was kinda not worth it," he said. "But when you go to the high-end, with three-quarters of a gig, you can get up to 48X faster just for sorting." He pointed out that this was a logarithmic scale and that that developers should be impressed by that speedup.

Generally speaking, GPU accelerators can yield about a 10X improvement in floating point performance on scientific workloads compared to the X86 processors that are mated with inside of modern HPC systems. (The mileage varies greatly depending on the application and the generations of the CPU and GPU.)

For its part, Nvidia, which is one of IBM's partners in its OpenPower Consortium, announced last month, is also eager to get Java running natively on its GPUs and to be a peer alongside Fortran, C, C++, and Python. There are ways to get Java to dispatch work to discrete GPUs, with the open source JCuda bindings project being a popular one.

"Today, if you write Java code, the only way to accelerate it is to explicitly call the CUDA library," explains Sumit Gupta, general manager of the Tesla Accelerated Computing business unit at Nvidia. These libraries are written in C, and these are put inside of a Java wrapper to make them callable from inside of a Java application. This is not, Gupta says, production-grade software but rather an open source project.

It is a reasonable guess – but IBM has not confirmed this – that the tests that IBM ran and that Duimovich showed for accelerating sorting algorithms for Java applications on GPUs took this approach.

The first step, it would seem, would be to take all of the libraries written by Nvidia and third parties and make them callable from inside of Java, and Gupta concurred with this. But in the long run, of course, what everyone wants is to run Java natively on the GPU itself, not just call routines from the CPU.

Gupta says that GPUs could accelerate all kinds of enterprise workloads, such as fraud detection or search engine indexing, which are both compute intensive, as well as Web analytics. "This takes GPUs beyond technical computing," Gupta says with a certain amount of glee.