Unisys Looks Ahead with Forward Xeon-InfiniBand Clusters

What do you get when you mix Xeon servers, InfiniBand switching, and virtualization and workload management software from a venerable mainframe maker? You get the new Forward systems from none other than Unisys.

The Forward systems, which go into beta testing this week, are the culmination of years of work to bring mainframe-class security, virtualization, and workload management from ClearPath mainframes to X86 systems running Windows or Linux.

Unisys has been selling versions of its mainframe platforms running atop Xeon server nodes for several years now. But now, the company wants to circle back and use the same s-Par secure partitioning virtualization layer it created for those ClearPath mainframes (whether they used homegrown CMOS processors or Xeon chips from Intel to run its own OS 2200 and MCP mainframe operating systems) and use it to create InfiniBand clusters suitable for a wider array of customers.

The initial targets for the Forward machines, which will start shipping on December 2, will be the obvious ones inside of the data center: RISC/Unix system replacement and SAP ERP applications. But starting early next year, Unisys will push the Forward clusters as suitable platforms for new analytics workloads such as Hadoop and will expand out from there. The company also plans to have its OS 2200 and MCP mainframe platforms ported to the Forward machines by 2015, Jim Thompson, chief technology officer for the Technology Division at Unisys, tells EnterpriseTech. Getting Unisys to the Holy Grail of having a single platform to run its OS 2200 and MCP mainframe workloads and Windows and Linux workloads.

The first version of the Forward clusters will be built using PowerEdge servers from Dell based on Intel's new "Ivy Bridge-EP" Xeon E5-2600 v2 processors, which we told you about three weeks ago. The nodes in the cluster are connected using 56 Gb/sec InfiniBand interfaces and switches from Mellanox Technologies.

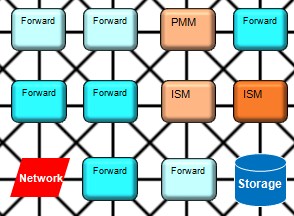

The heart of the Forward system is the Secure Partition virtualization hypervisor, which is called s-Par for short, and it is very clever in that it abstracts the NUMA interconnects inside of a single server node and the InfiniBand network links between processor nodes and presents them as the same abstraction to the partition. The s-Par partition doesn't know the difference, even if the underlying hypervisor does.

Unisys is not trying to do memory coherency across the server nodes in the Forward fabric, but it is using the Remote Direct Memory Access (RDMA) feature of InfiniBand to hook external storage directly into the Forward server nodes and allowing for data to be placed directly from the disk arrays into the main memory of those nodes.

"It is not NUMA across the InfiniBand links, but we have the ability to so that," says Thompson. "There just aren't a lot of operating systems that can support it. The thing about the interconnect architecture is that we are not media dependent. In the initial release, the interconnect is memory and InfiniBand and we treat them uniformly. The upper layers of the software can't tell, they are just using a service. And the reason this is important is that InfiniBand is not necessarily forever. We may want to use 40 Gb/sec or 100 Gb/sec Ethernet, or tin cans and string, or a hyperdrive photon converter - whatever it might be - I need to be able to bring that in on the south side of the interconnect interface without disturbing it on the north side at all. The interconnect very much supports the kind of NUMA you are talking about, but we have to wait for the technology to catch up with optical backplanes and such."

Unisys thinks that the s-Par partitioning technology will be appealing to customers for a number of reasons, even if they are already using VMware's ESX Server or Microsoft's Hyper-V in their infrastructure today. The s-Par hypervisor is lightweight with very little overhead, says Thompson, because it makes heavy use of the virtualization and security features of the Xeon processors. Moreover, the hypervisor allows for peripherals attached to the system to be allocated directly to an s-Par partition and just gets out if the way. And finally, and perhaps more importantly, s-Par provides "deterministic performance," which is a requirement on ClearPath mainframes, and what this means is that the hypervisor allocates a performance level and memory and I/O capacity for a partition and that is it. No other partition on the machine can touch it.

The Forward systems are starting out with two-socket Xeon E5-2600 v2 nodes, but Thompson says that Unisys will eventually deploy clusters using four-socket Xeon E5-4600 v2 processors, which have not been announced by Intel yet. Unisys will be able to add in Xeon E7-4800 and 8800 v2 processors, expected early next year, for customers who need even beefier nodes, too. Because the Xeon E7 chips will support up to 12 TB of main memory in a single NUMA image, Thompson says there is not much of a need to try to do shared memory using InfiniBand as a memory backplane. (We would argue that you could make an economic case, if the performance was not too bad. An eight-node Xeon E7 machine costs a lot more than four two-node Xeon E5 machines.)

While Unisys has been using Dell PowerEdge servers as the foundation for its X86-based ClearPath mainframes, the company is not tied to the Dell iron. Thompson says that as long as a server is running a modern Xeon processor that has Intel's Virtualization Technology (VT) circuitry to help with server virtualization as well as a number of other security features such as Trusted Execution Technology (TXT), the s-Par hypervisor can run on the machine. That means customers who have preferences for IBM, Hewlett-Packard, or other server brands will be able to use them as the building blocks for a Forward cluster.

The initial Forward cluster nodes are configured with two eight-core Xeon E5-2667 v2 processors running at 3.3 GHz plus either 128 GB or 256 GB of main memory. The nodes have sixteen drive bays for local storage, and use a Mellanox ConnectX-3 adapter to hook into the InifiniBand fabric that hooks the server nodes to each other and to external storage. On the Linux side, Red Hat Enterprise Linux 6.4 and SUSE Linux Enterprise Server 11 SP3 are supported, and for Windows, you can use Microsoft's Windows Server 2008 R2 SP1 or Windows Server 2012 in either the Datacenter or Standard Editions.

Pricing for the Forward clusters was not announced, but clearly if Unisys is going to be successful, it is going to have to price aggressively against Dell, IBM, and HP. It will no doubt be easier for Unisys to try to capture X86 workloads running on Windows and Linux in its existing ClearPath mainframe shops, but the company is hoping that the combination of InfiniBand and s-Pars gives it a chance to get its foot in the door at other data centers where there are no ClearPath machines.