Windows Server Plus InfiniBand Speeds Storage, VM Migration

Microsoft will ship its R2 update to the Windows Server 2012 operating system at the end of this week, and one of the key improvements in the platform will be a significant performance boost for InfiniBand networking linking storage to servers.

The gains are so large, say executives at Mellanox Technologies, the dominant supplier of InfiniBand adapters and switches, that companies are starting to use InfiniBand rather than Fiber Channel to link storage to servers.

Microsoft worked with Mellanox to get support for the Remote Direct Memory Access (RDMA) protocol tuned up for the initial Windows Server 2012 release, which came out in September 2012. RDMA allows for network cards in one system to reach out over the InfiniBand network and directly access memory in another system without having to go through the operating system's network stack. This RDMA capability is one of the reasons why InfiniBand is a preferred interconnect for parallel supercomputer clusters because it dramatically lowers latency.

You don't have to use InfiniBand adapter cards and switches if you want the low latency that comes from RDMA, by the way. You can run the RDMA over Converged Ethernet, or RoCE, protocol over 10 Gb/sec or 40 Gb/sec Ethernet switches and adapters. This provides the same direct memory functionality, and if you really want to, you can use the iWARP protocol to encapsulate InfiniBand and run it over the TCP networking stack. (Hardly anyone does that any more.) Mellanox sells both InfiniBand and Ethernet products, so it is neutral in that regard. However, the latencies are lower and the bandwidths are higher for InfiniBand running native RDMA compared to Ethernet running RoCE.

Microsoft and Mellanox ran a generic Windows Server 2012 R2 benchmark test for an application stack with relatively heavy network usage. The CPU overhead associated with running the operating system and network interface card drivers was on the order of 47 percent of the total processing capacity of the machine, leaving only around 53 percent of the capacity for the actual applications. By offloading the network I/O to network cards supporting RDMA, the total overhead on the system dropped to a mere 12 percent of the CPU capacity, leaving 88 percent for the applications to play in.

Both Microsoft and Mellanox are particularly interested in accelerating storage for Windows Server 2012 R2, which has a new release of the Server Message Block (SMB) network file system that was created for OS/2 more than two decades ago and eventually made its way to Windows (with some name changes along the way). The latest update is SMB 3.02, and it has been tweaked and tuned to run in conjunction with RDMA with less CPU overhead, explains Kevin Deierling, vice president of marketing at Mellanox. (When SMB is paired with RDMA, it is called SMB Direct.)

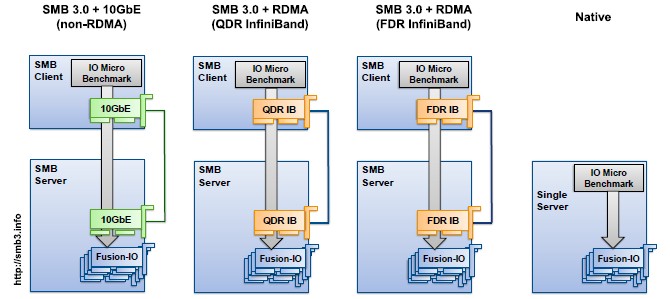

The comparison that Mellanox is excited about pits plain vanilla Ethernet running at 10 Gb/sec against to InfiniBand sporting RDMA because these are the two technologies that companies deploying new systems and networks are chosing between. Mellanox tested four different scenarios with the new Windows Server 2012 R2 and RDMA driver software, as shown below:

In the first three cases, a server reaches out over the network to a storage server crammed with Fusion-io flash-based PCI cards. In the first scenario, Windows Server uses plain vanilla SMB 3.02 with a 10 Gb/sec Ethernet link and no RDMA between the server and the storage array. The two middle scenarios have InfiniBand links between the machines with RDMA turned on, with two different speeds of InfiniBand. And the final scenario has the I/O benchmark running on the storage server itself so there is no network delay at all. (All four tests used 512 KB block sizes for the data transfers between the machines.) And here is what the relative throughput and I/O operations per second came out to be:

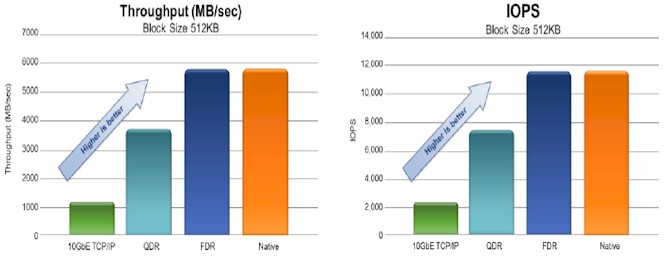

The Ethernet setup without RDMA can do around 1 GB/sec of throughput and a little more than 2,000 IOPS on the file serving test. The Quad Data Rate (QDR) InfiniBand setup, which has RDMA turned on over connections running at 40 Gb/sec, can push something on the order of 3.7 GB/sec of throughput and 7,500 IOPS.

The two last columns are where it gets interesting. The combination of SMB Direct and Fourteen Data Rate (FDR) InfiniBand, which runs at 56 Gb/sec, has the same throughput and IOPS over the network that is possible inside of the storage server itself. Yes, this sounds like magic. But this is what can happen when the operating system is kept out of the networking loop between machines. Incidentally, the CPU overhead from the network stack fell by 50 percent between the 10 Gb/sec Ethernet and 56 Gb/sec FDR InfiniBand scenarios. So that meant a big chunk of the processing capacity in the server was given back to applications, which drove the network connection harder.

The enhanced RDMA support with Windows Server 2012 R2 is not just about SMB Direct and storage. Other parts of the Windows stack are being optimized to take advantage of RDMA or RoCE and SMB Direct. For instance, live migration of Hyper-V virtual machines between two physical machines can also make use of it.

In one test, which allowed for live migrations to stream over multiple network links between two machines, it took a little more than 60 seconds to do a live migration of a Hyper-V partition. If you turned on compression and squished the files to be migrated, you could get it down to maybe 14 seconds. But using SMB Direct as a Hyper-V live migration transport (without compression) over top of InfiniBand with RDMA allowed this live migration to take place in around 8 seconds.

The main thing, says Deierling, is that customers are getting to the point where they only want to see one kind of cable linking servers to each other and to outboard storage. And in a battle between InfiniBand and Fibre Channel, in many shops – particularly those building new infrastructure clouds – Fibre Channel switches are losing to InfiniBand.

It will be interesting to see if this trend gains traction in enterprise data centers, which generally have Ethernet to link servers to each other, to the outside world, and to network file systems. Enterprises bought into the idea of storage area networks and their Fibre Channel switches to link to them back to servers many years ago, and they are still prevalent. But that doesn't mean it will always be this way.

One early adopter of the Windows Server-InfiniBand combo is Pensions First, a British company that sells risk management software for defined-benefit pensions. This software is not sold off-the-shelf, but rather it runs on Pension First's own cloud. This cloud is built using the very latest releases of the Microsoft stack: Windows Server, Hyper-V, and SQL Server.

The cloud has Mellanox switching gluing it together, starting with ConnectX-3 adapters in the application servers and storage servers. Several 108-port SX6506 InfiniBand switches (which run at 56 Gb/sec) link the application servers to the storage servers using SMB Direct. Legacy Dell EqualLogic disk storage hooks into those Windows-based storage servers through SX1036 Ethernet switches (using iSCSI over Ethernet), and the outside world links into the whole shebang over 36-port SX6036 switches. The latter switch has a virtual protocol interface that lets it speak either Ethernet or InfiniBand to the outside world and translate it to InfiniBand on the uplinks to the SX6506 core switches.