Exar Compresses HDFS, Boosts Hadoop Performance

Here at EnterpriseTech, we are always on the hunt for any technology that can be used to push performance on large-scale systems.

At the Strata/Hadoop World conference in New York we came across a compression card made by Exar for the Hadoop Distributed File System that not only cuts back on the amount disk capacity that is needed for a Hadoop cluster, but also has the side benefit of boosting the performance of the cluster.

Exar, if you are not acquainted with it, is a semiconductor and subsystem component maker based in Fremont, California, that has a broad line of products that span networking, storage, industrial, and embedded systems and provide power management, data compression, connectivity, security, and mixed-signal processing. The AltraHD card announced at Strata/Hadoop World is a compression product that is focused on two key parts of Hadoop where crunching the data makes a big difference.

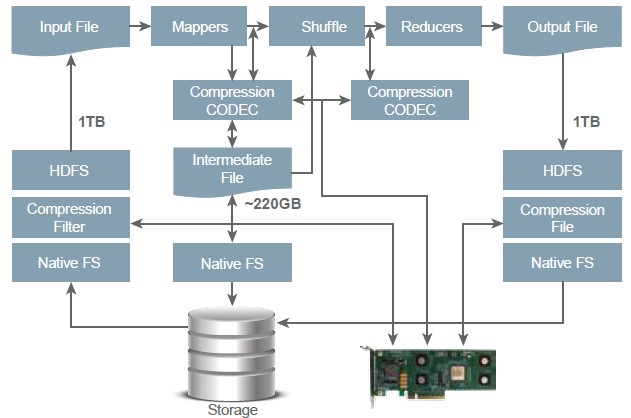

Hadoop generally keeps triplicates of data chunks spread around the cluster for both performance and resiliency reasons, and the terabytes can quickly add up on a production cluster with hundreds or thousands of server nodes. Hadoop uses a number of different software-based compression algorithms, including zlib, gzip, bzip2, Snappy, LZO, and LZ4, and it is common to use one of these codecs, and these programs are called. The zlib codec is the default in the open source Apache Hadoop distribution. No matter which codec is used, any intermediate data that is generated during the map stage is compressed, and during the shuffle phase when the data has been mapped, it is compressed before being sent out over the network to the reducers, where it is decompressed for further processing. This packing and unpacking of data is normally done by the processors in the Hadoop cluster running one of the above pieces of software, and the idea is to offload this compression and decompression work from the processors to the codec software and hardware on the AltraHD card.

The AltraHD card is also compressing data in HDFS itself. Exar has also created an application transparent file system filtering layer, called CeDeFS, that sits below HDFS and compresses all of the files stored there, transparently to HDFS and all of the applications that run atop it. Rob Reiner, director of marketing for data compression and security products at Exar, tells EnterpriseTech that a single AltraHD card delivers 3.2 GB/sec of compression and decompression throughput. If you need to boost the speed of the compression on your Hadoop clusters, you can put multiple AltraHD cards into a system and gang up the cards for parallel compression and decompression.

Based on early benchmark tests using the Terasort benchmark, Exar has found that the AltraHD card can boost the performance of MapReduce jobs by 63 percent. And when it comes to data at rest inside of HDFS, the compression algorithms used in the Exar card can reduce the storage footprint by a factor of 5X. The benchmark test that Exar ran had a NameNode with two four-core Xeon E5520 processors running at 2.3 GHz with 16 GB of main memory plus eight data nodes, each with two six-core Xeon E5-2630 processors running at 2.3 GHz and 96 GB of main memory. These machines ran CentOS 6.2, and the data nodes had 12 SATA disk drives with a total of 3 TB of capacity. The machine with the native LZO compression ran the Terasort test in 20 minutes and 44 seconds. And putting one AltraHD card in each server node reduced the Terasort runtime to 12 minutes and 28 seconds and crammed the data down to 600 GB.

To hedge a bit, Exar is saying that customers should see a 3X to 5X reduction in the disk capacity needed for their existing Hadoop jobs thanks to the AltraHD card. What that means is that you can get your Hadoop triple-copied data on a third of the disk drives you would normally need, and then maybe more depending on the nature of your data. Given that most Hadoop clusters are disk and I/O bound and not CPU bound, this could mean a radical reduction in the number of nodes required for a given Hadoop workload. And that can turn into big savings, depending on the configuration of the machines and the nature of the data.

The AltraHD card only compresses HDFS files and the internal codec operation in the MapReduce layer, but Reiner says to stay tuned for support for other file systems that are often used underneath Hadoop. The AltraHD will work with any Hadoop distribution, but thus far only the distributions from Cloudera, Hortonworks, and Intel are certified for it.

Exar did not announce pricing for its card, and when pushed Reiner said it was well below $10,000. How much less, he would not say. EnterpriseTech does not like playing the pricing guessing game, unless we have to. Let's take a ProLiant DL380e Gen8 server as a compute node. It has two of the less-expensive E5-2400 processors in it, and let's be precise and put two six-core chips in it. This server has room for fourteen 3.5-inch SATA drives in its 2U chassis. A six-core E5-2440 processor running at 2.4 GHz costs $850, and a 3TB SATA drive spinning at 7,200 RPM costs $635.

The AltraHD card basically saves you most of one of those processors and saves you from buying eleven of those disks based on the speedup and compression from the Terasort test. By our math, that works out to a value of somewhere around $7,700 just holding the performance steady. And because of the added complexity and added cost, perhaps a price somewhere between $3,000 and $4,000 can be justified to customers. As you use more expensive processors or disks, the relative value of the AltraHD card goes up.