Researchers Benchmark MPI, Hadoop On Autobursting Cloud

Cloud computing has emerged as a popular model for providing on-demand, network-based access to a shared pool of configurable computing resources, including networks, servers, storage, applications, and services.

While there are many commercial services providing computing resources as-a-service, among them Amazon Web Services, Microsoft Windows Azure, and Google Cloud Platform, these services typically do not have performance guarantees associated with them. Furthermore, shared production environments on cloud infrastructures have the potential to introduce unexpected performance overhead.

A team of researchers from the Department of Computer Science at the University of Texas at San Antonio, working in partnership with the cloud provider Joyent, explore how Joyent's private cloud management system, SmartDataCloud, is addressing some of these issues. The Joyent cloud has the ability to burst CPU capacity automatically, which should appeal to performance-minded users.

To demonstrate that this cloud system offers scalable performance for both compute-intense and data-intense workloads, the researchers conducted a set of computational and communication experiments on FlexCloud, a SmartDataCenter installation at the University of Texas at San Antonio. Naturally, they chose MPI and MapReduce as their workloads. The entire process is described in their paper, Benchmarking Joyent SmartDataCenter for Hadoop MapReduce and MPI Operations.

Despite initial resistance from the HPC community, cloud computing is increasingly being used to solve computationally intensive jobs; the same holds true for large-scale analytical jobs. One of the main benefits of the utility model is that it provides extreme compute power without the extreme price tag of owning your own parallel distributed system. In some cases, cloud solutions are being used as an alternative to private clusters and grids and in others they are used to extend in-house computer power. While virtualization is not a mandatory cloud technology, virtualization is part of the magic sauce used to create a near infinite pool of resources.

"For HPC applications, this means using clusters of virtual machines," the researchers write. "These virtual machines can share the same physical hardware with varying compute loads. Each cloud system uses their own resource contention algorithm for providing fairness to the shared resources: CPU, memory, disk, and NIC."

"In SmartDataCenter, Joyent uses what is called a fair-share scheduler which balances compute loads on a system based on contention and priority," they add.

Due to cost concerns and limited resources, many cloud platforms employ heterogeneous compute environments, for example combining Intel Xeon and AMD Opteron parts. Others use different generations of CPUs. Even when the cloud is based on homogeneous hardware, the virtual cluster provisioned to an user can experience interference from other customers, causing it to act like a heterogeneous computing environment some of the time. The researchers recommend taking this heterogeneity into account by focusing on improving resource utilization and reducing load imbalance.

Mitigating this issue led the team to explore two techniques: MapReduce, a parallel programming framework for scheduling jobs, and the MPI concurrent programming model, which they hypothesize may yield higher performance than MapReduce. When analyzing the overhead caused by virtualization, previous research looked at message passing (MPI) parallel applications on different cloud systems, such as EC2, and reported that communication overhead is a substantial slowdown factor. The researchers wanted to explore the feasibility of scientific computing on the Joyent SmartDataCenter cloud. Having these benchmarks will help developers make more informed choices.

For all of the experiments, the researchers initiated 32 virtual machine instances, each with one virtual core instance, 1 GB memory, and 10 GB storage. Each instance was outfitted with an Ubuntu Linux 10.04 image.

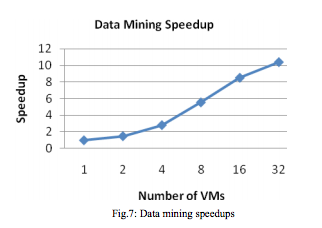

For the Hadoop MapReduce experiments, the team installed Apache Hadoop on the FlexCloud and ran two benchmarks: A matrix times matrix multiplication benchmark and a data mining benchmark using files of crimes data for Austin, Texas.

The results show excellent scalability for both applications from 2 to 32 virtual machines. For matrix multiplication, the best speedup was for 32 VMs, which yielded about a factor of 11X speedup over two virtual machines. For the data mining, the best speedup was also for 32 VMs, with a factor of 10.39X increase.

The results show excellent scalability for both applications from 2 to 32 virtual machines. For matrix multiplication, the best speedup was for 32 VMs, which yielded about a factor of 11X speedup over two virtual machines. For the data mining, the best speedup was also for 32 VMs, with a factor of 10.39X increase.

In the MPI experiment series, the computer scientists ran the basic OpenMPI communication operation and also matrix times matrix, and matrix times vector multiplication algorithms. The results demonstrated respectable scalability from 2 to 32 virtual machines, and in some cases it was slightly higher than MapReduce. With Matrix*Matrix, for example, the speed up was 16.01X and with Matrix*Vector it was 7.64X.

Based on these benchmarks, the Joyent cloud system may be a good choice for scientific applications that require MPI or MapReduce functionality. But it is difficult to say just how well the Joyent cloud stacks up against competing options. It would be helpful to run these same benchmarks on other private clouds, like ones built with OpenStack or CloudStack or vCloud, and for good measure to get some benchmarks on a bare metal cluster setup as well.

In the future, the research team plans to extend their investigation to the performance of storage services combined with computations for the FlexCloud system.

Related

With over a decade’s experience covering the HPC space, Tiffany Trader is one of the preeminent voices reporting on advanced scale computing today.