SAP HANA Wrings Performance From New Intel Xeons

The in-memory HANA database designed by SAP to radically improve the performance of queries and transaction processing has been a boon to the company. It is the fastest growing product in the company's long history – and that is saying a lot considering how popular its R/3 enterprise resource planning software was in the 1990s.

Unlike many parallel workloads, getting extreme performance out of a HANA in-memory database does not always require a scale-out cluster of servers, and it doesn't often require a big shared memory system, either. Customers often end up with such configurations, but they generally do not start out there. In fact, the HANA sales cycle doesn't start with a discussion of hardware and databases at all, but rather with the problems that companies can't easily solve.

"We ask customers to tell us about all of the tech problems they have and can't solve," Chris Hallenbeck, global vice president of the Platform Solutions Group at SAP, explains to EnterpriseTech. "What we tend to find, much like you may see with Hadoop, that there are all of these problems and they talk around problems that they see because they don't want to sound like an idiot asking for the same thing over and over again. They know that batch windows are full. They know about aggregates and they don't want to get pounded on again. We try to go in prove to them that things have shifted, that high performance computing is now something that is available standard inside of a system and a database, and that this means the world has changed. That you can actually do real-time on massive quantities of data streaming in, with analytics. That batch windows do not have to exist."

The typical initial HANA engagement, says Hallenbeck, starts with what it calls an agile datamart sale, which is really a proof of concept for lots of different projects. In a typical large enterprise, there might be 20 real pesky problems, and maybe half of them can be addressed by HANA systems in one form or another. This initial machine starts out with a Xeon E7 server with 64 GB of main memory, which with compression can hold around 150 GB of user data. Because HANA doesn't need indexes or aggregates, this is roughly equivalent to a 300 GB relational database. This is not huge, mind you. But remember, HANA is an example of scale-in computing, where data is being stored in main memory rather than in disk to get a factor of 1,000 or more speedup in the access of data. The confluence of X86 processors that can address more memory and the relative cheapness of that memory allow for a lot to be done inside a single box of modest size. And for those who need more, servers can be scaled up with more processors and memory or HANA databases can have their schemas span multiple nodes to scale out.

The typical entry production HANA system, says Hallenbeck, is a single quad-socket Xeon E7 server with 512 GB of main memory. Customers tend to grow linearly from there, either adding more memory to the system, racking up more nodes, or both. Dell, Hewlett-Packard, IBM, and others have pre-configured appliance setups that allow for HANA clusters to be ordered. IBM has built a HANA cluster for SAP itself that has more 100 TB of main memory across more than a hundred server nodes. The largest commercial installation of HANA thus far, says Hallenbeck, is a 56-node system that has 1 TB on each node; he is not at liberty to divulge who the customer is.

Customers doing analytics tend to go for scale out clusters for their HANA workloads, but those who want to use the in-memory database for the back-end of their transaction processing systems tend to use scale-out platforms – generally fatter NUMA machines. HANA is not supported on Oracle's Sparc systems (no surprises there) and is similarly not supported on IBM's Power systems. There is no technical reason why HANA could not run on either box, according to Hallenbeck. But Oracle and SAP are rivals in databases and application software, and thus far SAP and IBM have not announced any intention of supporting HANA on Power-based systems. Now that IBM is selling off its X86 server business to Lenovo and focusing on its Power iron, perhaps this will change.

SAP has been particularly picky about the hardware that HANA can run on, mainly because with everything stored in main memory the system reliability and resiliency has to be high. To that end, HANA has only been available on Xeon E7 processors, the top-of-the-line chips that feature extra protection on main memory, allowing for recovery from chip failures and soft-bit errors that a normal Xeon E5 chip can't handle. SAP picks one processor that all vendors have to use, in this case the ten-core E7-8870 v1 running at 2.4 GHz. Vendors can pick the memory capacity and vendor they want to install in the HANA systems, but the memory modules have to be identical across all nodes in a HANA cluster. The nodes also have to be configured with Fusion-io flash memory PCI-Express cards for storing logging information, and according to Hallenbeck, IBM has done something clever and clustered these Fusion-io cards using its General Parallel File System (GPFS) to improve resiliency. All of the servers have to run SUSE Linux Enterprise Server, which is understandable given the close relationship between SAP and SUSE Linux, which are both German companies. (SUSE Linux has also been a popular option among supercomputer labs and that's another reason this is a good choice.)

Unlike most X86 systems out there in the datacenter, HANA machines typically run at 90 percent or higher utilization rates on both processors and memory. Once customers start running real-time queries and analytics, they get addicted to it.

Being tied tightly to the "Westmere-EX" generation of Xeon E7 processors has been somewhat problematic for customers who want to scale up their cores or memory inside of a single system. These Xeon E7 v1 processors debuted in April 2011, which is nearly three years ago. They were supposed to be refreshed in the fall of 2012 with a "Sandy Bridge-EX" kicker. But these Sandy Bridge-EX chips were slated for a 32 nanometer process, which meant only modest performance boosts, and a new socket type, which is a pain for server makers. Intel wanted to get the Xeon E7 chips back in synch with the other Xeons in terms of their use of chip manufacturing processors – 22 nanometers in this case – and also to get them into a common socket with the Xeon E5s. And so Intel skipped the Sandy Bridge-EX chips entirely and asked customers to wait for the "Ivy Bridge-EX" Xeon E7s instead.

Those processors started shipping to server makers as 2013 came to a close and were launched by Intel this week as the Xeon E7 v2 chips. The software engineers at SAP and Intel have worked together to make sure that the HANA in-memory database is tuned up to take advantage of all of the features of the Xeon E7 v2. Intel had over 100 engineers working with SAP on the initial HANA in-memory database, and still has ten software engineers at SAP facilities continuing this work. (If IBM wants SAP to run on Power iron, it will have to make a similar commitment.)

Aside from the factor of three increase in memory capacity and the 50 percent increase in cores, what SAP has worked hard on is making sure HANA can make full use of the AVX vector math units inside of the Xeon E7 v2 processors. The Xeon E7 v1 chips could do floating point math in their SSE units, but the Ivy Bridge cores have two AVX units, each 256-bits wide. That means a single core can process eight double-precision floating point operations per clock cycle. This is about 3.5 times the floating point oomph where the compiler hits the chip compared to the Xeon E7 v1 chips.

"We do use vector processing, and we are actually working on compressed data a majority of the time," says Hallenbeck. "So HANA looks at what version of the processor is running, and in this case, AVX gave us a lot of new things we could do in the system to make it faster. We use fixed-length encoding in our compression scheme. By going from 128-bit to 256-bit, we can double the instruction arte per cycle, but it gives us an additional set of optimizations on top of that. Plus, we have a lot more addressable memory that is local to individual cores, and we have more cores."

HANA has to make a lot of decisions about where to place data in the memory hierarchy in a single socket, then within a system, and possibly across a cluster of systems, and this gets easier if there is more memory to play with.

"Add it all up, this gives us tremendously higher throughput," says Hallenbeck with a laugh.

Initial tests run by Intel and SAP show a four-socket machine with 60 cores running at 2.8 GHz using the Xeon E7 v2 chips (the E7-4890 v2 to be precise) was able to process twice as many HANA queries per hour as a 40 core box using E7-4870 chips running at 2.4 GHz. With predictive analytics workloads running against HANA, the speedup on early tests in the SAP labs in Silicon Valley was on the order of 2.5 to 3 times on the new Xeon E7 machines versus the old ones.

What EnterpriseTech is dying to see is how the future "HANA Box" shared memory system being developed by SGI is going to perform with HANA running on it. SGI said last month it would deliver a machine with 64 TB of shared memory, using the new Xeon E7 v2 chips and its NUMAlink interconnect, tuned up for HANA.

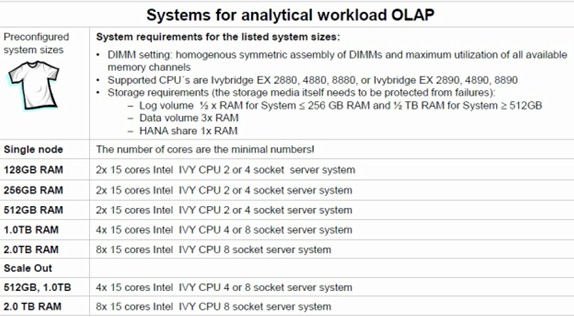

SAP is still working out what configurations of the new Xeon E7 v2 processors it will recommend, and the recommendations vary depending on if the machine will be used for analytical or transactional workloads. (You can run both on the same machine, and in fact, that is precisely the point of HANA: You query the live data in the system, not some snapshot sitting in a data warehouse that is hours or days old.) These are the preliminary recommendations for analytics systems:

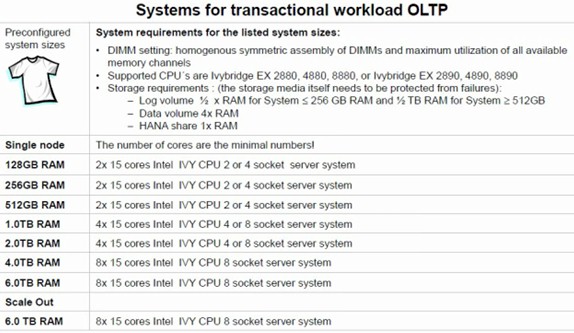

And here are the suggested configurations for online transaction processing workloads:

The HANA in-memory database was a key aspect of Intel's Xeon E7 launch this week, too. Intel showed a demo of an application used in the oil and gas industry that takes telemetry off of sensors that are attached to all of the gear that comprises an oil rig. Diane Bryant, who is general manager of the Datacenter and Connected Systems Group at Intel, explains that rigs have tens of thousands of sensors and that every minute of downtime costs on average of $16,000. So being able to predict a failure and fix it proactively rather than reactively makes a big difference.

So this unnamed oil company took analysis tools from SAS Institute, grabbed a stream of 4 TB of data coming off the rig, and ran predictive maintenance algorithms on two different configurations. In this demo, there was 4 TB of data that was streamed in from the rig and it was fed into a four-socket Xeon E7 v1 server using disk drives to store data. A new four-socket Xeon E7 v2 machine was equipped with the HANA database and 6 TB of memory to hold that data stream, and the analytics ran in around five seconds instead of 12 minutes with the disk-based system. That is a factor of 128.4 speed up.

This is the kind of performance improvement that initial HANA customers are looking for when they decide to give HANA a try. SAP has more than 3,000 customers using HANA to date, and 800 of them are running it underneath the Business Suite ERP applications. HANA posted revenues of €664 million (around $913 million) in 2013, up 69 percent.

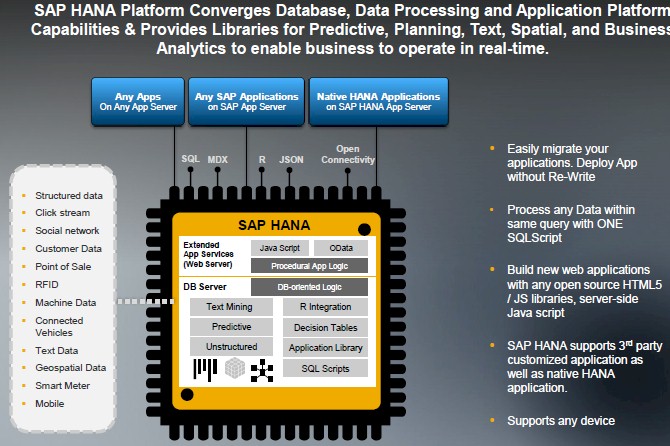

The continued uptake of HANA will be dependent on making it easier to use, and with HANA SP7, announced in December, SAP has added a lot of tools that were created as one-offs in the field for specific customers that are now available for all HANA shops to employ. The stack now includes a development workbench, which is a lightweight integrated development environment for creating and debugging objects and queries. The stack also includes the Advanced Function Library, which is a collection of 50 different functions (such as hierarchical clustering, affinity propagation clustering, or forecast smoothing) and 35 algorithms and data preparation steps that make staging information from production systems and other data sources easier. Spatial processing capabilities were also enhanced with SP7, and Hallenbeck hints that there is a lot more coming in the near term.