Google Makes Cassandra NoSQL Scream On Compute Engine

Search engine giant Google has an infrastructure and platform service called Cloud Platform, and it is up against some pretty stiff competition from Amazon Web Services, Microsoft Windows Azure, and a few others. Google is years behind AWS with infrastructure services, so it has to brag about the oomph it can bring to bear and the relatively low prices it is charging to get customers to make the jump, or better still, start out with Cloud Platform in the first place.

To that end, Google has cranked up some virtual machines on its Compute Engine infrastructure cloud and put the Cassandra NoSQL data store through the paces. Cassandra was created by social networker Facebook because it was frustrated by some of the limitations of the MySQL relational database and the Hadoop big data muncher. It is a hybrid data store that marries a columnar data format with a key-value store.

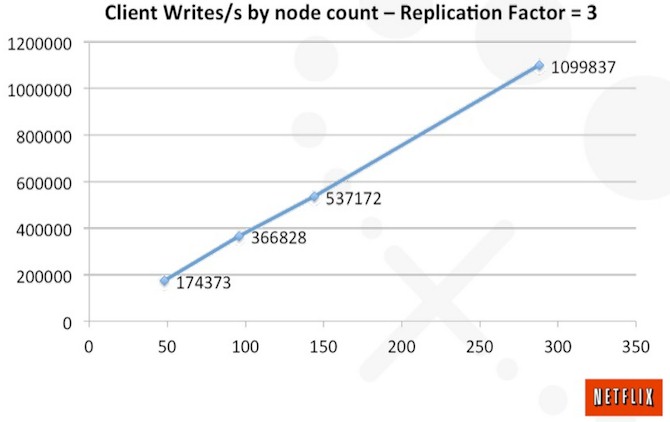

The largest Cassandra cluster known, according to the Apache Cassandra site, spans 400 server nodes. Back in 2002, Netflix, which uses Cassandra as a backend for its subscriber systems, demonstrated that a machine with just shy of 150 server nodes (the precise number was not given) could push over 537,137 writes per second and a cluster with what looks like 280 nodes could do nearly 1.1 million writes per second. The tests at Netflix showed that Cassandra could scale linearly, as you can see below:

The precise configuration of the nodes in the Netflix test were not given, but in a blog post outlining the benchmarks it ran, Google performance engineer lead Ivan Santa Maria Filho gave the feeds and speeds and the virtual cluster for its Cassandra benchmark was on par with the top-end cluster in the Netflix test.

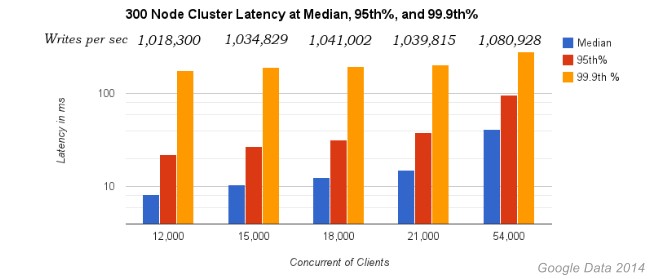

The cluster that Google set up on the Compute Engine cloud had 300 instances of its n1-standard-8 server slices, which have eight virtual cores and 30 GB of main memory each. These slices were running in its US-Central-1B datacenter facility rather than spread out across multiple datacenters. (Cassandra is cool in that it allows for NoSQL data stores to be spread across physically separated facilities.) Each one of these instances was equipped with the commercial-grade Cassandra 2.2 software from DataStax (running atop Debian Linux) and each one also had a 1 TB Persistent Disk volume. Another 30 nodes based on Compute Engine n1-highcpu-8 were set up client software to simulate the write operations of an application. These 30 nodes used the Cassandra-stress routing to write 3 billion small records (each 170 KB) to the Cassandra data store. All of this data used the Cassandra Quorum commit protocol, which has triple replication of data and which stipulates that writes have to be complete in at least two of the nodes; data is also encrypted once it is a rest.

In its Cassandra test, Google ramped up the number of Cassandra clients hitting those 300 nodes to show the effect on latency and writes per second with the data store. Take a look:

The median latency for the benchmark test was 10.3 milliseconds, and 95 percent of the writes completed in under 23 milliseconds. With a write commit to only one node, the cluster could push 1.4 million writes per second with a median latency of 7.6 milliseconds and a 95th percentile completion of 21 milliseconds.

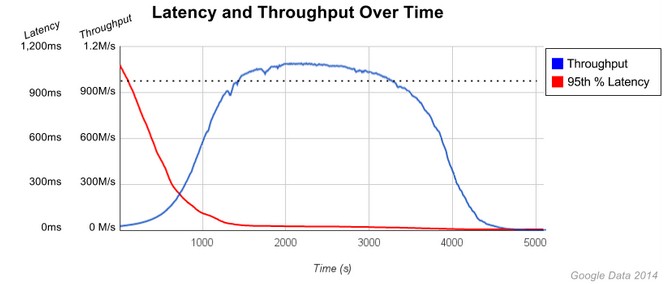

It takes a while for the Compute Engine instances to be loaded and for Cassandra to warm up, as is the case with all complex distributed software. The warm up period is a bit longer for Cassandra because it is written in Java, which runs code in interpreted mode for a bit and then does dynamic compilation as code runs to get it closer to the iron and running something on the order of twice as fast in the case of most commercial Java applications. Google says a preconfigured environment could ramp up to the 1 million writes per second load in about 20 minutes. Google can provision, deploy, and warm up a 300-node cluster in about 70 minutes.

By the way, the latest Zing 5.9 Java virtual machine from Azul Systems, which EnterpriseTech already told you about last week, gets around this warm-up issue through some clever tricks and is taking off at financial trading firms for this very reason.

Anyway, here's what the warm-up period looks like:

Just to test how well this cluster responded to issues, Google knocked out 100 of the 300 Cassandra nodes and measured what the remaining nodes would do under test. The median latency while still maintaining 1 million writes per second was 13.5 milliseconds, and the 95th percentile writes took 61.8 milliseconds. The worst case writes were taking on the order of 1.3 seconds to complete (at the 994.9th percentile).

"We consider those numbers very good for a cluster in distress, proving Compute Engine and Cassandra can handle both spiky workloads and failures," Google wrote.

Now, here's the real kicker. The entire test consumed 70 minutes of capacity at a cost of a total of $330, with about $18 of that coming from the cost of the nodes to simulate the load. This sounds cheap, of course, and that is precisely Google's intent in making that statement. Those are the costs for the infrastructure, not including the licenses from DataStax for its commercial Cassandra version.

But hold on a minute. Cassandra is a back-end data store for applications, and while it may have performance needs that scale up and down, it is not like a traditional supercomputing simulation job, which gets submitted, run, and deleted.

There are 8,765.8 hours per year here on Earth, so the costs on that Cassandra data store with 300 nodes and 300 TB of persistent disk can mount up. If you do the math and sustain that load over a whole year, then you are talking about $2.34 million. Even if you only ran an average of half of that capacity over the course of a year, that is still well over $1 million to keep Cassandra humming. European customers will pay a slightly higher cost for the same job, based on current Google pricing for Compute Engine services.

Enterprise shops have to figure out if they can do it for less than that with all of their costs – datacenter, power, cooling, infrastructure, software licenses, and people – added up. And they have to be brutally honest about it. In many cases, given the skill sets and sunk costs in datacenters and infrastructure, large enterprises probably can do it for the same or less. Whether that holds true as Google gains skill in running compute and storage clouds and leverages volume economics remains to be seen.

The Cassandra benchmark test unveiled today follows a similar test that Google did last November to demonstrate the performance of Compute Engine when used as a front-end for applications. A decade ago, handling 10,000 simultaneous clients on a Web server was a big deal. But in the 21st century, being able to fire up 200 virtual machines behind the Compute Engine Load Balancing service was able to handle over 1 million requests per second, or about 5,000 requests per second for each of the 200 virtual machines. (They ran Debian Linux and the Apache Web server.) This setup cost $10 for a 300 second test.