Microsoft Adds Beefy Compute, File Service To Azure

It was a cloudy week for Microsoft at its annual TechEd shindig, which was held in Houston, Texas, this time around. The consistent theme of the announcements has been that Microsoft has the scale and tools that make Azure the appropriate public cloud companion to Windows and Linux workloads already inside of enterprise datacenters. Microsoft made some nuts and bolts announcements for compute and storage to back up this claim.

The first important one for enterprises considering large-scale deployments on the Azure cloud is that there are two new hefty compute instances that are backed by InfiniBand interconnect. Microsoft has done a lot of work with Mellanox Technologies, one of the two suppliers (along with Intel) of InfiniBand switches and server adapters, to tune up Windows Server 2012 to make full use of this high bandwidth, low latency interconnect and its Remote Direct Memory Access (RDMA) feature. Microsoft had previously worked with Mellanox and Dell to make custom servers employing InfiniBand as the back end for the imagery applications behind the Bing Maps service affiliated with the company's Bing search engine. InfiniBand has been used on the HPC instances of Azure and there are rumors that Microsoft uses InfiniBand internally on other parts of its network, which the company has never confirmed.

At the TechEd event this week, Microsoft rolled out Azure Compute instances called A8 and A9. These instances are backed by 40 Gb/sec InfiniBand networking, Madhan Ramakrishnan, principal lead program manager for Azure, told EnterpriseTech at a prebriefing ahead of the launch. It is interesting that Microsoft has not pushed up to 56 Gb/sec InfiniBand, which has been available for quite some time from Mellanox, but it could be that the company is recycling some internal machines in its fleet that were used for Bing and other services into the Azure Virtual Machine service. Or, alternatively, Microsoft could be skipping the 56 Gb/sec InfiniBand generation entirely and waiting for 100 Gb/sec InfiniBand to be available later this year or early next year.

The A8 instance is based on Intel's eight-core Xeon E5-2670 v1 processor (that's a "Sandy Bridge" flavor from early 2012) and it has a clock speed of 2.6 GHz and yields eight virtual CPUs. (The entire socket is dedicated to the virtual machine.) This instance has 56 GB of virtual memory capacity and the DDR3 chips in the box are running at 1.6 GHz. The A8 instance has 127 GB of disk space for the local operating system, which can be Windows or Linux, and 382 GB of temporary disk space on top of that. The machine can be allocated with up to sixteen 1 TB drives (presumably SATA) that can deliver up to 500 I/O operations per second per drive. The A9 instance doubles up the cores to sixteen and the virtual memory to 112 GB with the same allocations for local storage.

For the kind of simulation, modeling, parallel database, and video encoding workloads that the A8 and A9 instances are designed to support, knowing these details matters because these applications are often tuned to specific iron. The A8 and A9 instances are only available as new instances, by the way. You cannot switch from smaller A0 through A7 instances, and importantly, even though Linux virtual machines can run on the A8 and A9 instances, RDMA support is only available on Windows instances. RDMA for Linux instances on A8 and A9 VMs is in the works.

As you might expect, these beefy Azure compute instances with InfiniBand interconnect are not cheap. The A8 instance costs $2.45 per hour running Windows and for a month averaging 744 hours, that works out to around $1,823 per month. The Linux version of the A8 instance costs $1.97 per hour. The A9 instance has twice as much oomph and costs twice as much at $4.90 per hour running Windows (around $3,646 per month) and $4.47 per hour running Linux ($3,326 per month). Not everyone is going to use such an instance for a full month, of course, and the whole point of using the cloud for parallel applications is to fire up the job on a much larger virtual cluster than customers might be able to build in house and get the work done quicker. The cloud is about turning shared scale into a faster turnaround on simulations.

The A8 and A9 instances are available in the US East and US West, Europe West, and Japan East regions of the Azure cloud. No word on if or when other regions will get them. The A8 and A9 compute instances support Microsoft's own MS-MPI implementation for clustering the nodes together to run parallel software and you can use the HPC Pack add-on for Windows to setup a cluster on Azure.

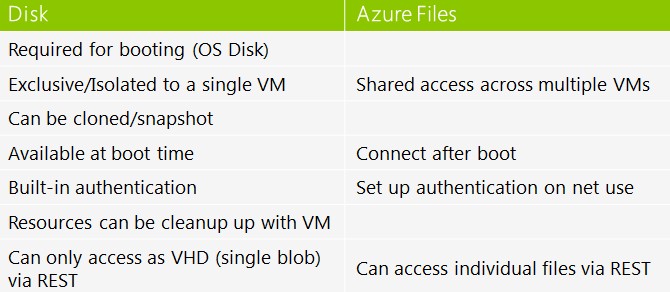

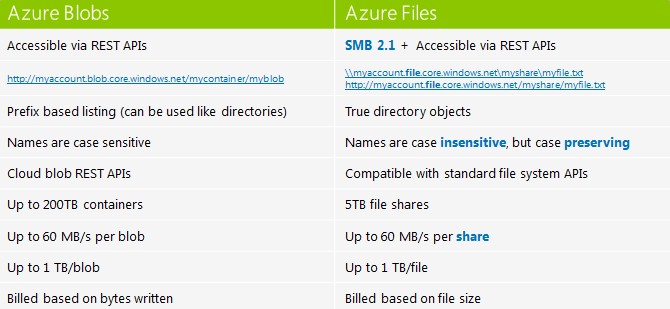

On the storage front, Microsoft has taken the Azure Storage back-end service, which currently allows for virtual machines to share disks, Binary Large Objects (BLOBs), tables, and queues, and outfitted it with an SMB layer that allows it to serve up shared files across virtual machines. Basically, it turns Azure into a file server, much as Windows has been a file server for decades now, only this time for VMs. The Azure Import/Export service is also now out of preview and available.

Azure Storage is a beast and currently has over 25 trillion objects in it across Microsoft's sixteen Azure datacenters and processes on the order of 2.5 million requests per second on average at the moment. The Azure Storage service has overlays for BLOBs that allow for object storage on a massive scale (obviously, just look at those numbers), including documents, pictures, video, music and other media files as well as raw unstructured data and log files and intermediary files used during MapReduce analytics and data and device backups.

The disks in the Azure Storage cloud are BLOBs that are in the VHD format used by hypervisors and the Azure Tables service is a massive and auto-scaling NoSQL key/value store. The Queues service is a low latency, high throughput messaging system that, like the on-premise Microsoft Message Queuing middleware, allows for elements of a distributed application to be equipped with data queues so they can communication asynchronously and therefore in a manner that allows for a certain amount of resiliency and fault tolerance between elements of the application.

The Azure Files service, which is in tech preview now, rounds out the cloudy storage picture for Microsoft's public cloud. It is not trivial to set up a shared file service on Azure (or any other cloud) these days. Up until now, explained Andrew Edwards, principal software development engineer on the Azure Storage team, companies had to set up their own VM with an instance of Windows Server (or another operating system that spoke the SMB file sharing protocol) and configure that instance with some disk storage. The other VMs in the setup running the host operating systems and applications had to be pointed at this shared virtual file server, and usually companies had to write their own code to deal with upgrades on the shared storage host VM, node failures, and backups. With Azure Files, Microsoft does this.

Interestingly, Azure Files only supports the SMB 2.1 protocol, which has the benefit of being more widely supported by Windows and Linux applications. However, warns Edwards, because SMB 2.1 does not support encryption, Microsoft is not allowing for it to be used with cross-datacenter shares within the Azure cloud. The SMB 3.0 protocol that came out with Windows Server 2012 R2 last fall does support encryption, and therefore could allow for cross-datacenter file sharing on Azure, but Edwards was not at liberty to say if this was the long-term plan. Within Azure, client operating systems on the VMs that can access the shared Azure Files service include Windows Server 2008 R2, Windows Server 2012, and Windows Server 2012 R2; Microsoft is investigating support for Canonical's Ubuntu Server 13.10 and 14.04 LTS releases, and arguably it should be possible to make it work with any Linux (or any other operating system) that speaks SMB 2.1, whether or not it is officially supported.

During the preview, Microsoft is allowing up to 5 TB of capacity to be shared across VMs and you can geo-replicate the share for disaster recovery purposes. The Azure Storage backend is making triplicate copies of the share in the background inside of a region, as it does for disks, tables, and queues already. This costs 4 cents per GB for locally redundant storage (LRS in the Azure lingo) and 5 cents per GB for the geographically redundant storage (GRS). The Azure File service does not yet have de-duplication or compression and the Azure Import/Export service cannot be used to bulk load data into Azure Files during the tech preview.

Speaking of which, the Azure Import/Export service is now available worldwide, after having been only available as a tech preview in the United States since last November. As the old saying goes, nothing beats the back of a truck for bandwidth, but the latency can be a little high. Customers who need to upload lots of data can ship a disk drive to Microsoft with their data and it will be uploaded into the Azure cloud. There is a $40 per drive handling fee until June 11, after which the price goes up to $80. (Those are US region prices.) The standard storage pricing applies to the data once it is moving onto the Azure Storage backend. Azure Import/Export is available in US East, US West, US North Central, US South Central, Europe West, Europe North, Asia Pacific East, and Asia Pacific Southeast regions.