Neural Nets Improve Google Datacenter Efficiency

Key attributes of the smart grid, namely adding intelligence to the networks that distribute power, are being applied by Google to the design and operation of power-hungry datacenters.

The search giant said its quest for ever-greater energy efficiency in its sprawling complex of datacenters has led it to adopt a new tool: machine learning. Specifically, it is trying to leverage neural networks that can be trained to optimize datacenters and further reduce energy usage.

Google's approach also offers the potential of virtualizing datacenter operations so engineers can run simulations to squeeze more energy efficiency out of their operations without having to take a datacenter offline.

Mimicking the central nervous system, artificial neural networks are a class of machine learning algorithms that work by forming new interconnections as they are trained for a particular application. Typical use cases include pattern and voice recognition along with computer vision.

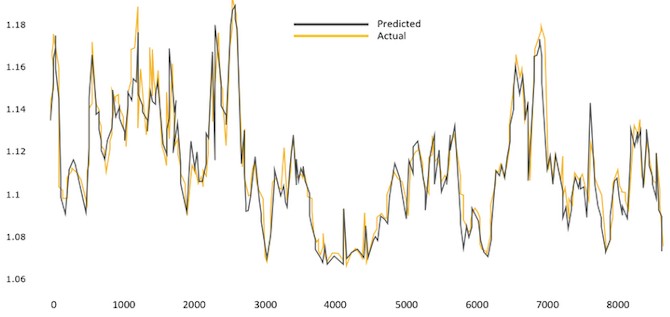

Google engineers said they have developed a neural network framework that "learns" from actual datacenter operations in order to model energy performance and predict the power usage effectiveness, or PUE, of the datacenter. PUE is a standard energy efficiency metric for datacenters, and it is the total power draw of the datacenter divided by the power consumed by the compute, storage, and networking elements inside the datacenter. The closer you get that number to one, the better. Since 2008, Google's average PUE across all of its datacenters, averaged over the trailing twelve months, has dropped from just under 1.22 down to 1.12.

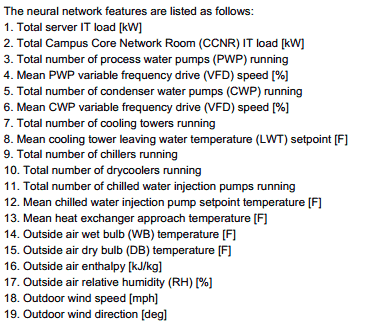

Google calculates PUE at its datacenters every 30 seconds. It also collects operational data about its server farms on a daily basis along with critical statistics like outside air temperature and cooling equipment settings.

Google datacenter engineers realized that they could massage all this sensor information to improve datacenter PUE. One of them, Jim Gao, came up with models based on machine learning that could be used to improve datacenter performance and save energy. (Gao wrote a blog about the effort, and also has published a whitepaper about what Google has done to mesh neural networks with datacenter operations.)

The Google datacenter neural network sifted through large amounts of operational data like IT loads and outside temperature looking for patterns. That information was run through Gao's model to discern more patterns in the complex interactions within the modern datacenter.

Once the neural network was trained up, Google claims that Gao's model accurately predicted PUE with an accuracy of 99.6 percent. The results can then be applied to improve the energy efficiency of Google datacenters.

The measly 0.4 error rate for the prediction model demonstrates that "machine learning is an effective way of leveraging existing sensor data to model [datacenter] performance and improve energy efficiency," Gao concluded in the Google white paper.

Google, Facebook, and other operators of large datacenters have made big strides in improving energy efficiency. But Gao noted that the overall pace of PUE reduction has slowed due generally to diminishing returns and specifically to factors like limits on existing cooling technologies.

Hence, Google turned to machine learning as a way to squeeze the last bits of energy efficiency out of its existing datacenter infrastructure. The company's neural network model sifts through the enormous number of mechanical and electrical equipment combinations along with control software and temperature set points to maximize datacenter PUE.

The need for simulation tools is growing as datacenters have become so complex that testing every combination of features to improve energy efficiency is unworkable. Given constraints like frequent fluctuations in IT load and weather and the need for stable datacenter operations, Gao reasoned that a mathematical framework could be used to train a datacenter energy efficiency model.

Concludes Gao: "A machine learning approach leverages the plethora of existing sensor data to develop a mathematical model that understands the relationships between operational parameters and the holistic energy efficiency." The resulting simulation "allows operators to virtualize the [datacenter] for the purpose of identifying optimal plant configurations while reducing the uncertainty surrounding plant changes."

There is one caveat to the Google energy initiative: Gao stressed that machine-learning applications are only as good as the quantity and quality of the data analyzed.

Related

George Leopold has written about science and technology for more than 30 years, focusing on electronics and aerospace technology. He previously served as executive editor of Electronic Engineering Times. Leopold is the author of "Calculated Risk: The Supersonic Life and Times of Gus Grissom" (Purdue University Press, 2016).