Rackspace OnMetal Gives Bare Metal Oomph, Cloud Flexibility

When is a cloud not a cloud? When it is really dedicated hosting with a new name slapped on it. Rackspace Hosting, which made enough of a mark in hosting to go public and which was wise enough to start the OpenStack cloud controller project with NASA four years ago, has been peddling true cloud servers for the past several years, at first using a homegrown controller that wraps around the XenServer hypervisor. Now, Rackspace is moving into the next – and perhaps ultimate – phase of cloud computing by delivering bare metal servers that can be provisioned as if they were cloudy server slices.

The new service that Rackspace has cooked up is called OnMetal Cloud Servers, and it will be an option that customers can choose right beside the XenServer-powered regular Cloud Servers. Ev Kontsevoy, the product manager behind the OnMetal service, says that it came about because there are times when running in a virtualized environment atop a full-on hypervisor makes it difficult – if not impossible – to get predictable and consistent performance out of distributed applications. Server virtualization might be great for driving up utilization on servers, but it also drives up unpredictability in terms of latencies between virtual nodes in a virtual cluster as well as resource availability within each virtual machine. Rackspace hosts a number of its own services, including its ObjectRocket implementation of the MongoDB NoSQL data store on bare metal machines, and Kontsevoy tells EnterpriseTech that Rackspace was looking for some way to differentiate from other cloud providers while at the same time enterprise customers with scale-out workloads were looking for bare instead of virtualized metal. And thus the OnMetal service was born.

The funny bit about this differentiated service is that it is based on open software and hardware. And in another interesting twist of words, the bare metal provisioning is actually based on the OpenStack Ironic project, which has been in the works for the past couple of years to allow the OpenStack cloud controller to provision compute, storage, and networking for bare metal machines as well as for VMs running atop hypervisors like KVM and Xen.

"The whole thing can be replicated because it is all open source," says Kontsevoy. "That is consistent with the Rackspace strategy of not differentiating with nuts and bolts, but with support."

Kontsevoy says that the Ironic project was in need of some work and that Rackspace has stepped up its contributions to the effort – along with other contributors, of course – to make it suitable for managing the deployment of bare metal machines. The SoftLayer division of IBM, which was an independent company until last year, sells a mix of cloud slices and bare metal servers, and lots of its customers prefer bare metal because they are running oil and gas simulations or online games where the virtualization tax and the unpredictability of performance is an issue. And thus, SoftLayer had long since created its own controller, called the Infrastructure Management System, to handle bare metal provisioning and to wrap around the OpenStack controller that in turn manages XenServer for virtual machine slices. OnMetal will bring Rackspace on par with its cross-state rival SoftLayer on the bare-metal front, and ahead of Amazon Web Services, Google Compute Engine, and Microsoft Azure, none of which have bare metal servers.

The OnMetal service starts with Rackspace's own variants of servers that are inspired by the Open Compute Project started by Facebook three years ago. Kontsevoy was not sure at press time which precise Open Compute servers were underneath the OnMetal service, but he said he believed they had three X86 nodes in an enclosure. (You can see a report on Rackspace's OCP designs from January at this link, and it looks like it might be a variant of the Facebook "Winterfell" server.)

The OnMetal service, like other bare metal clouds that will no doubt follow if this idea takes off, seeks to get rid of the "noisy neighbor" issues on shared infrastructure. You can be your own noisy neighbor, so this is not, strictly speaking, about multi-tenancy even though the problem is usually posed in that fashion. The issue is that operating systems are greedy and solipsistic bits of code – an operating system inside of a virtual machines still thinks it has an entire machine to itself – and balancing the needs of 10 to 40 virtual machines on a single server is a tough juggling act for any hypervisor. Many modern workloads scale across server nodes rather than inside of them, so the smart thing to do is to get rid of the hypervisor and figure out how to do the same provisioning and monitoring on bare metal that is done for hypervisors and their virtual machines.

Ironic, isn't it? We have come all this way with server virtualization, and in the end, what we might end up with is Docker containers and perhaps someday their Windows equivalent running on bare metal. (This is how Google does it, more or less, and that is usually a pretty good predictor of the future in hyperscale computing.) And for a lot of workloads, there may be no container at all.

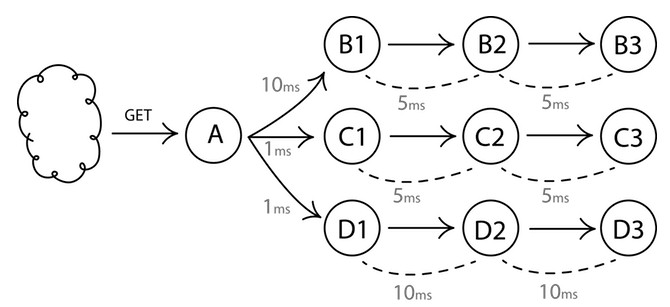

In a blog post, Kontsevoy explains how the noisy neighbor problem is compounded on a cluster of virtual nodes. In a distributed application, a Web page might be dependent on pulling data from nine different servers running in bare metal mode, thus:

But on a public cloud, that latency is not a maximum of 21 milliseconds, as you calculated by adding up the latencies on the bottom request. Because of the noisy neighbor problem on virtual machines running on public clouds (as well as the uncertainty about what specific hardware is being used underneath those slices), the latencies are not constant, and adjacent virtual machines can affect the performance of your virtual machines. So response time is something you constantly have to measure because you can't do it once and assume it stays the same. Because response time is probabilistic instead of deterministic, companies then overprovision their virtual machines or the count of virtual machines. In some cases, clever companies are firing up many virtual machines, running a quick benchmark to see which ones are the most stable, and then only deploying applications on those nodes. This is obviously very complex, and it is one reason why some companies move from colocation or dedicated hosting to the cloud and then back again. But when they do that, these companies abandon the idea of elasticity, allowing for capacity to rise and fall as needed.

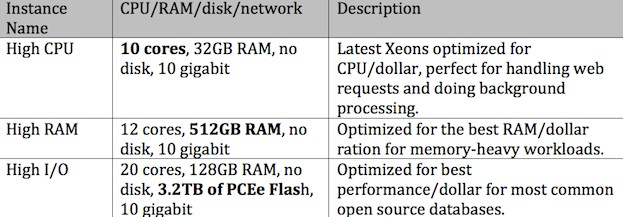

To start, the OnMetal service will come with three different server configurations:

The OnMetal servers do not have any spinning disks and they employ external cooling so that fans are not necessary, either. (Rackspace did not say what kind of cooling was employed.) But the idea is that removing all of the spinning devices from the machine eliminates heat and vibration, which in turn makes the server more reliable and long-lived. Presumably there are base flash units not shown in the configurations above that are used to store operating system and application code. All of the machines use 10 Gb/sec Ethernet ports and switches to link them together.

The OnMetal service is debuting in the Rackspace datacenter in Northern Virginia and is in a limited beta program for now, which you can sign up for here. Kontsevoy says that the service will be generally available next month, and that pricing information is not being released as yet. But he did tell EnterpriseTech that the price/performance of the OnMetal service would be "by far" better than pricing of virtual slices on the public cloud. You will have to buy a whole machine, but the amount of work you will be able to push through it will be so much higher that the bang for the buck will be better and you will have to buy fewer physical instances than you would have to do for virtual instances.

This all presupposes, of course, that you have a workload that can drive up the utilization very high on a bare metal machine. Because many applications cannot do this, we ended up going down the server virtualization road in the first place. Google doesn't use containers on its infrastructure because it likes adding layers of complexity, but rather because it needs to multithread workloads across clusters to drive up utilization across time and space, which drives down costs. And that is the best reason to believe that in the long run, Rackspace will offer some form of lightweight container on top of OnMetal.

It is also not hard to imagine OnMetal instances with Nvidia Tesla, Intel Xeon Phi, and other kinds of accelerators hanging off them, and perhaps even instances that employ faster InfiniBand networking.