Mellanox Cranks InfiniBand Bandwidth Up To 100

InfiniBand network equipment supplier Mellanox Technologies had been hinting that it would be able to get its first 100 Gb/sec products out the door perhaps in late 2014 or in early 2015, and it turns out that this will happen sooner rather than later. The timing is not accidental, but rather precise, and specifically aims to get the next jump up in InfiniBand bandwidth and drop in latency timed to the next big processor announcement from Intel, which is widely expected in the fall just in time for end-of-year cluster buying.

Mellanox is unveiling the new Switch-IB chip for 100 Gb/sec InfiniBand at the International Super Computing 2014 conference in Leipzig, Germany this week, but don't think InfiniBand is only for parallel clusters running technical simulations. InfiniBand has been used by Microsoft as the underpinning of its Bing Maps and has been deployed by a number of public cloud vendors to support simulation and analytics workloads. Database appliances and clustered storage arrays often have InfiniBand linking multiple server or storage nodes together as an internal fabric even if these devices talk to the outside world using Ethernet, Fibre Channel or other protocols.

A case can always be made to stick with Ethernet in the datacenter because it is the volume leader and it is what most companies know, but at the same time, the alternative case can always be made that InfiniBand usually offers the highest bandwidth and the lowest latency at any given time, no matter how much technology is moved over from InfiniBand to Ethernet. Mellanox itself has been narrowing the gap between Ethernet and InfiniBand in its most recent generations with its SwitchX-2 ASICs, which are designed to run both InfiniBand and Ethernet protocols at 40 Gb/sec and 56 Gb/sec speeds.

But with the Switch-IB ASICs that are being previewed at ISC'14 this week, Mellanox has created a chip that only speaks InfiniBand rather than trying to do both protocols. The reason, explains Gilad Shainer, vice president of marketing at Mellanox, is that supporting both protocols on a Switch-X chip always means making sacrifices on the InfiniBand side because Ethernet support adds complexity and overhead because it needs to support protocols such as BGP, MLAG, and others, and this overhead impacts the performance of the InfiniBand side of a Switch-X chip. As it does from time to time, Mellanox wants to push the lower limits of latency as it pushes up bandwidth to reach 100 Gb/sec for the InfiniBand protocol, and that is why there is not a Switch-X3 chip being announced this week, but rather a new Switch-IB chip.

"Because it is InfiniBand-only, we can pack more ports into it and reduce the latency by almost half," says Shainer. Moving forward, Shainer confirmed Mellanox will deliver other Switch-X chips – presumably to be called the Switch-X3 – that will support both InfiniBand and Ethernet protocols.

This Switch-IB ASIC is the companion to the circuits used on the Connect-IB host adapters that Mellanox has already put into the field. Those Connect-IB chips support two 56 Gb/sec Fourteen Data Rate (FDR) InfiniBand ports, and Shainer says they laid the groundwork for the move to 100 GB/sec Enhanced Data Rate (EDR) InfiniBand on the server side. (It is not much of a stretch to convert one of these Connect-IB adapters into a single port, 100 Gb/sec device, but there is no point in doing so until there is a 100 Gb/sec switch to drive it.) Mellanox has similarly already demonstrated back in March that it can manufacture copper, optical, and silicon photonics cables capable of handling 100 Gb/sec traffic. With the Switch-IB ASIC, the set will be complete and the 100 Gb/sec roll out can begin in earnest.

The Switch-IB chip has 144 SerDes (that's network speak for serializer/deserializer) circuits, and the lane speeds range from 1 Gb/sec to 25 Gb/sec speeds. You gang up four lanes to get a port, and that is what gets you to the top speed of 100 Gb/sec per port.

With the SwitchX-2 chip that was used to implement 40 Gb/sec and 56 Gb/sec InfiniBand as well as 10 Gb/sec and 40 Gb/sec Ethernet protocols, the ASIC had 144 PHY transceivers, and speeds ranged from 1 Gb/sec up to 14 Gb/sec (where FDR gets its name). The SwitchX-2 ASIC offered an aggregate switching bandwidth of 4.03 Tb/sec and a port-to-port hop running the InfiniBand protocol at 56 Gb/sec was 200 nanoseconds.

With the Switch-IB chip, the bandwidth is jacked up to 7.2 Tb/sec and the port-to-port hop is a mere 130 nanoseconds. That is a 78.6 percent increase in bandwidth and a 53.9 percent reduction in latency. The Switch-IB chip can drive up to 5.4 packets per second. In its first instantiation, the Switch-IB chip will be able to drive up to 36 ports in a single switch at those EDR speeds in a 1U enclosure, and a modular chassis design will be able to push 648 of those EDR ports. The 100 Gb/sec ports burn a little hotter, but the heat per gigabit is lower. A 36-port switch with 36 ports running at 56 Gb/sec burned about 55 watts, or about 36.7 gigabits per second per watt. But a new Switch-IB ASIC can drive 43.4 gigabits per watt as it burns 83 watts across its 36 ports running at EDR speeds.

Incidentally, the software development kit for the Switch-IB ASIC also works with the SwitchX-2 predecessor. (This is important for Mellanox itself as well as its OEM customers who buy chips and make their own switches or embed them in other devices such as storage or database clusters.)

Although Mellanox never made a big deal about it, the SwitchX-2 ASIC was not only able to run either the InfiniBand or Ethernet protocol, but it also had a Fibre Channel gateway and could, in theory, have been used to create a switch that could speak either InfiniBand or Ethernet at the same time, adjusting as the protocol on the ports as traffic came in. To our knowledge, Mellanox did not productize this, but it did offer customers the ability to run Ethernet at 56 Gb/sec speeds with a software upgrade, and this was also a useful bonus and side effect of the converged chip.

With the Switch-IB chip, Mellanox is including InfiniBand routing capability for the first time. This router functionality has a number of different uses. First, it allows for multiple InfiniBand subnets to be hooked together, allowing for networks to scale from tens of thousands of nodes to hundreds of thousands of nodes across a single InfiniBand fabric. The router also allows for security zones to be set up in the fabric using those subnets; this is functionality that both supercomputing and cloud customers want to have. And finally, the InfiniBand routing functions allow for InfiniBand networks with different topologies to be linked together. InfiniBand supports fat tree, mesh, torus, and Dragonfly topologies – perhaps the compute part of a cluster is on fat tree and the storage is on a mesh or torus network – the most efficient way to link different topologies, says Shainer, is through a router.

The torus and mesh topologies, says Shainer, allow you to add nodes to the clusters easily and without having to change the cabling on the existing nodes, but you pay for this with more hops and higher latencies between nodes. As such, torus interconnects are good for applications where nodes near each other talk a lot but only infrequently try to shout across to the other side of the cluster. Fat tree and Dragonfly have lower latencies across the cluster, and fat tree is a little simpler to deploy in that it does not require adaptive routing. The Switch-IB ASIC supports a new topology called Dragonfly+, which has enhancements that Mellanox is in the process of piling patents to cover and which are related to speeding up the adaptive routing function that makes Dragonfly topologies possible. The Dragonfly+ implementation that Mellanox has come up with has half the number of hops between nodes as the previous implementation.

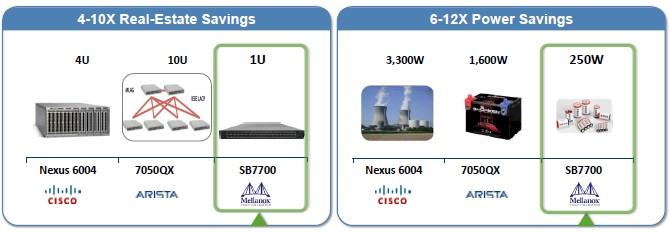

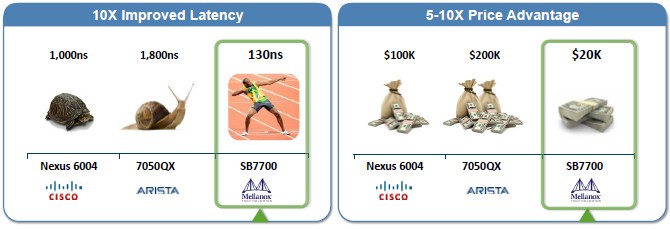

So how will the first Switch-IB switch from Mellanox, the SB7700, stack up compared to the current Ethernet incumbents in terms of delivering an aggregate bandwidth of 7 Tb/sec? Mellanox presents the following comparisons to Cisco Systems Nexus 6004 and Arista Networks 7050QX Ethernet machines, starting with rack space and power:

And here is how the latencies and the money stack up:

Obviously Cisco, Arista, and others will be fielding more compact and capacious Ethernet switches in the coming months to better compete with the likes of the Mellanox SB7700 InfiniBand switch, but it is going to be interesting to see how they price against a 36-port switch that costs $20,000. That price, by the way, is a little bit less than twice what Mellanox is charging for a 36-port 56 Gb/sec InfiniBand switch. (Those prices do not include the cables.)

The Switch-IB chip ASIC has just gone into production, and Shainer says that switches will ship late in the third quarter. There's no word yet on when the Connect-IB adapters will be pushed up to 100 Gb/sec speeds, but the odds are that this will be timed to the "Haswell" Xeon E5 processor launch from Intel in the fall.