VXLAN Is The Future Of Networking In The Cloud

Cloud deployments depend on many network services such as monitoring, VPN services, advanced firewalling and intrusion prevention, load balancing, and traditional security and optimization policies. If we consider a modern cloud data center that supports potentially hundreds of thousands of hosts, scaling and automating these network services creates the most challenging task for the cloud operator.

Fortunately, server virtualization has enabled automation of routine tasks, reducing the cost and time required to deploy a new application from weeks to minutes; however, reconfiguring the network for a new or migrated virtual workload can take days and cost thousands of dollars.

Scaling existing networking technology for multi-tenant infrastructures requires solutions enabling virtual machine (VM) communication and migration across Layer 3 (L3) boundaries without impacting connectivity, while also ensuring isolation for hundreds of thousands of logical network segments and maintaining existing VM addresses and Media Access Control (MAC) addresses. This is such a complex task that it can be impractical; therefore, “pods” of servers are often dedicated to one set of workloads, which wastes capacity and causes unnecessary expenditures on supporting systems such as security and networking gear.

These barriers get in the way of cloud consolidation projects. Either virtual data center designers accept that physical pods are now built for each class of workload, or they attempt to maintain impractical, complex network and security configurations, which are time consuming to build, prone to errors, and costly to maintain. Due to security policy requirements, Layer 2 (L2) limitations and L3 complexity, we are basically stuck with modern compute systems and a network architecture from the 1990s.

The Answer: Overlay Networks

Overlay network standards, such as Virtual Extensible LAN (VXLAN) and Network Virtualization using Generic Routing (NVGRE), make the network as agile and dynamic as other parts of the cloud infrastructure by enabling dynamic network segment provisioning for cloud workloads. This results in a dramatic increase of cloud resource utilization. Overlay networks provide for ultimate network flexibility and scalability, as well as the possibility to:

• Combine workloads within pods

• Move workloads across L2 domains and L3 boundaries with no extra work

• Seamlessly integrate advanced firewall appliances and ADCs

A service provider, for example, is rolling out a public cloud offering in its large-scale data center. Compute and storage are virtualized and can be elastically provisioned throughout the data center. The cloud operator requires a Virtual Network Infrastructure (VNI) that can satisfy the following requirements:

• Enable the tenants of the cloud offering to securely provision and configure its virtual networks without requiring additional infrastructure or involvement of the operator's IT/networking teams

• Enable creation of virtual network templates that can be cloned by different tenants

• Scale-out and scale-up (in terms of traffic processing rates) as the number of tenants, workloads, and/or servers grow over time

• Guarantee high availability under node, OS and virtual network failures

• Provide the ability to develop (in-house or through a third party) and non-disruptively roll out new network functions or improvements of existing network functions.

VXLAN's Hidden Challenge

While VXLAN offers significant benefits in terms of scalability and security of virtualized networks, there is an unfortunate side effect: significant additional packet processing, which consumes CPU processing cycles and degrades network performance. VXLAN uses an additional encapsulation layer, and therefore traditional NIC offloads cannot be utilized. The result is the consumption of high levels of expensive CPU processing resources as well as significant reduction in network throughput.

In order for VXLAN to be of real value, the resulting CPU overhead and networking degradation must be eliminated. This can be achieved by offloading the overlay network processing to hardware embedded within the network controllers. This includes:

• Conducting checksums on both the outer and inner packets

• Performing large segmentation offload (LSO)

• Handling NetQueue steering to ensure that virtual machine traffic is distributed between different CPU cores in the most efficient manner

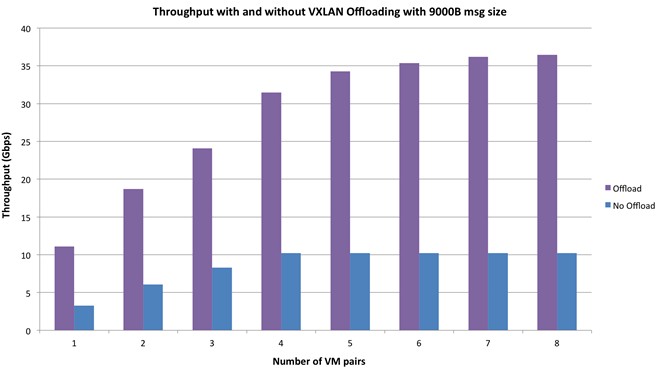

The impact of these hardware offloads on the throughput is dramatic as shown in this graph (source: Plumgrid running KVM and Ubuntu 12.04 with kernel version 3.14-rc8 with fixed message size of 9000B and mlx4 VXLAN offload support).

Throughput comparison with and without VXLAN hardware offloads

As can be seen in the figure above, the offload performed by a 40GbE adapter with overlay network hardware offloads enables nearly line rate performance (36 Gb/s). By contrast, while using a 40GbE adapter without the hardware offload of the overlay packet processing, throughput is limited to around 10 Gb/s.

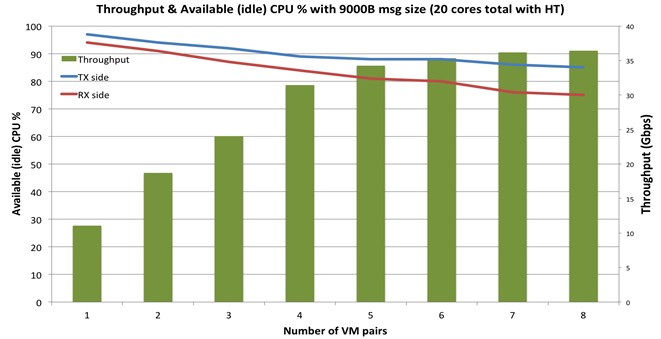

Furthermore, one can enable receive side scaling (RSS) to steer and distribute traffic based on the inner packet. This is unlike traditional NICs, which can only steer based on the outer packet, allowing for multiple cores to handle traffic. This then enables the interconnect to run at full line-rate bandwidth versus at reduced bandwidth as what occurs when VXLAN is used without RSS. The result is improved CPU utilization, although the number of VMs increased the CPU utilization, in comparison to the obtained throughput with fixed message size (9000B) remains nearly constant as throughput grows to 36 Gb/s, as you can see below:

Integration to Cloud Management Orchestration

OpenStack integration is essential to make overlay networks simple to use. In fact, platforms that want to achieve true flexibility and scalability need overlay networks that can be efficiently deployed and managed. The Neutron plug-in for OpenStack is a networking abstraction layer that offers such integration, allowing a number of open source tools and commercial products to act as networking back-ends.

A new project of Neutron called Modular L2 (ML2) is a framework allowing OpenStack networking to simultaneously utilize the variety of L2 networking technologies found in complex real-world data centers, including simpler integration of overlay into physical and virtual networks.

New plug-in functionality to the driver can enable customers to set up VXLAN, enhance security for para-virtualized vNICs, and to achieve the best performance using Single Root IO Virtualization (SR-IOV) with the same setup (and even the same VM).

Overlay networks focus on eliminating the last constraint to enable workloads to seamlessly move across racks, host clusters, and data centers. This is a very important step towards lowering the cost of the datacenter infrastructure. Given the cost and complexity of traditional networks to deploy and manage virtual servers, overlay network protocols can reduce the time and cost to deploy and manage a virtual workload throughout its lifecycle.

While VXLAN achieves these goals, this comes at the cost of significant performance penalties introduced by the VXLAN encapsulation/decapsulation process, and from the loss of existing hardware offloads. To exploit the full potential of VXLAN requires hardware that can properly address these performance challenges – this includes a network controller that not only supports VXLAN, but also enables critical hardware offloads.

An adapter with overlay network hardware offloads addresses the unintended performance degradation in both CPU usage and throughput seen when using VXLAN. By using such an adapter, the scalability and security benefits of VXLAN can be utilized without any decrease in performance and without a dramatic increase in CPU overhead.

Eli Karpilovski manages cloud market development at Mellanox Technologies, building the partner ecosystem for the SDN and OpenStack architectures. In addition, he serves as the Cloud Advisory Council chairman. Previously, he served as product marketing manager for the HCA Software division at Mellanox from 2010 to 2011. Karpilovski holds a bachelor of science in engineering from the Holon Institute of Technology in Holon, Israel.