Ceph Clustered Storage Dons A Red Hat

Red Hat is the world's largest supplier of support services for open source software, and it has delivered its first update to the Ceph storage software it acquired back in April to give it a better footing in the OpenStack cloud market. The company is adding data tiering to the storage software and also a new method of replicating data that is less expensive, allowing Ceph to be used for cold storage.

Inktank was acquired by Red Hat for $175 million in cash and was commercializing the open source Ceph block and object storage software, much as Red Hat has famously commercialized the open source Linux operating system and subsequently acquired the JBoss middleware stack. Ceph was started back at the University of California Santa Cruz back in 2004 and was opened up two years later; support for the Ceph client (the thing that can ask for objects of the distributed storage cluster) was adopted into the mainline Linux kernel in 2010 and integration with OpenStack and KVM followed in 2011 and the formation of Inktank to offer commercial support for Ceph in 2012. Since then, support for the Xen hypervisor and the CloudStack cloud controller, both largely supported by Citrix Systems, was added, and Ceph Enterprise, the first commercial-grade edition of the distributed object and block storage, was launched in October 2013.

This is not Red Hat's first foray into clustered storage. Back in October 2011, seeking to get a piece of the storage action, Red Hat paid $136 million to acquire Gluster, which had created an open source clustered file system that competed in some respects with the open source Lustre and IBM General Parallel File System (GPFS) clustered file systems that have their origins in the supercomputing market. Red Hat has thousands of Gluster users, some using the open source GlusterFS and others using the commercial-grade Red Hat Storage Server, but clearly the company needed an object and block storage product that fit in better with its OpenStack aspirations and Ceph was already popular with the OpenStack crowd.

The one thing that Ceph does not yet have is a file system layer than runs atop the distributed storage. Ross Turk, director of marketing and community for the Ceph product at Red Hat, tells EnterpriseTech that the CephFS file system, which has been pretty much done in terms of development for the past three to four years, is not yet ready for production. Not because the feature set is not complete or the code isn't ready, but rather because Inktank, which was focusing on object and block storage commonly used by cloudy applications, did not have time for the "bug squash" to get it ready for enterprise deployments. There is not scheduled release for production-grade CephFS.

The Ceph community has put out six releases since July 2012, with the "Firefly" release come out in May this year. As with other open source projects, the open source version has more frequent updates, but the enterprise edition that has commercial-grade support is updated less frequently because most enterprise customers can't handle the disruptions of updating their software several times a year. This is particularly true with storage and database software, where making any changes really makes companies jumpy. Ceph Enterprise V1.1 came out last fall based on the "Dumpling" release of the open source Ceph stack, and Ceph Enterprise V1.2 is based on the Firefly release that came out two months ago. In general, the Ceph community does a release every three months and Red Hat will do an enterprise release every 18 months, says Turk.

At its heart, Ceph is a distributed object storage system, and it includes the RADOS Block Device (RDB) driver that allows for an operating system to mount Ceph as if it were a block device like a disk array. Either way, data is automatically replicated (usually in three copies) and striped across the storage cluster. Up until now, a Ceph storage cluster replicated and striped three sets of data as it came into the cluster, which is great inasmuch as it provides high durability for the data as well as quicker recovery if some portion of the data goes missing because a disk or flash drive, or even a whole server node, crashes. But This also means that customers are storing three copies of data, which is necessary for hot, mission-critical data but which is perhaps economically undesirable for data that is cold or even warm.

Ceph now has erasure coding, which is a technique that is popular in many storage applications that adds chunks of parity data to chunks of actual data so that lost files can be recreated in the event of a crash. Ceph Enterprise V1.2 has a pluggable architecture to allow for different erasure coding techniques, but general speaking, Turks says that this approach will allow Ceph customers to store twice as much data in Ceph as the triple-replicated pooling method does. The trade-off is that recovery of lost data takes time and compute resources.

The latest Ceph Enterprise also has cache tiering capabilities to go along with the erasure coding, allowing for the hottest data to automatically be moved to the highest-performing disk or flash media in a Ceph cluster and the coldest data to be moved to the slowest disks.

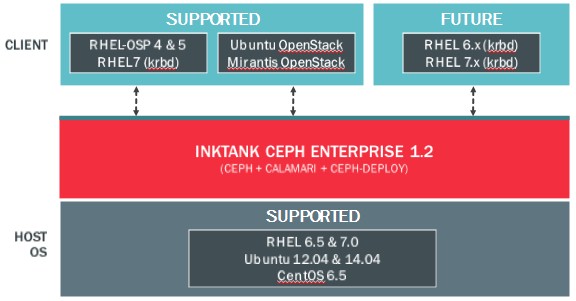

Along with the updated Ceph, Red Hat is also putting out an updated Calamari V1.2 management console; the code for the Calamari console was just opened up by Red Hat in May after the acquisition and was previously a for-fee product from Inktank that drove its revenues. Ceph Enterprise gets support for Red Hat Enterprise Linux 7 with this V1.2 update; the software had already run on RHEL 6.5 and its CentOS 6.5 clone as well as Ubuntu 12.04 and 14.04, which are the Long Term Support (LTS) releases from Canonical. On the client side, Red Hat's implementation of OpenStack (using the Cinder block driver) as well as the krbd virtual block device in RHEL 7 can directly access block data stored in Ceph, and so can the OpenStack distributions from Mirantis and Canonical (also through Cinder). In the future, RHEL 6 clients will be supported through the krbd kernel module and so will future releases of RHEL 7. On the object side, Ceph supports the S3 APIs for object storage used by Amazon Web Services as well as the Swift APIs used in OpenStack. Because the CephFS file system is not yet ready for primetime, Ceph does not have endpoints for CFS or NFS, but someday it will.

In addition to rolling out the Ceph Enterprise V1.2, Red Hat is providing pricing for two different packages of tech support for Ceph. The jumpstart edition of Ceph Enterprise is aimed at customers who want to set up a test cluster. It costs $10,000 for a test cluster, which includes six months of online support plus three days of design and deployment consulting from Red Hat and support for one Ceph admin. The production support costs 1 cent per GB per month and has hotfixes for critical issues, interaction for an unlimited number of Ceph admins, 24x7 online and telephone support, and scalability to petabytes and above.

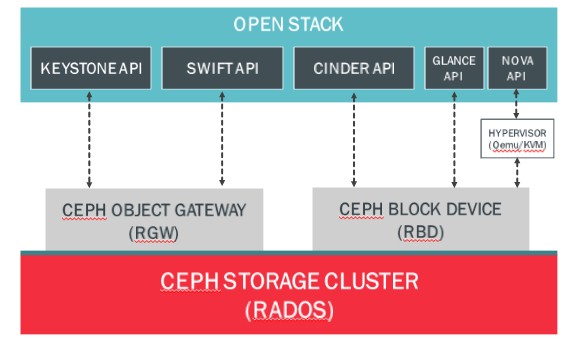

The most popular use of Ceph today, says Turk, is as storage for an OpenStack-based cloud, which looks like this:

In this use case, there are virtual machines running atop KVM. But another use case for Ceph, albeit a less popular one, is to just run the cluster as cloud object storage, without any KVM or virtual machines and just linking Web applications to the cluster through S3 or Swift APIs. The Ceph Object Gateway allows for clusters that are geographically distributed to replicate data across multiple regions for better data resiliency. In still other cases, where performance is of the utmost, such as social networking or photo sharing, customers are using the Librados native interface to allow their Web application servers to access objects on the Ceph cluster.

Looking ahead, Turk says to expect support for iSCSI protocols in Ceph concurrent with the future "H" release of Ceph, which has not been named yet, as well as RDB mirroring, support for LDAP and Kerberos authentication, and performance tweaks. Further out, Red Hat will be delivering a production-grade version of CephFS – and it is not clear what this means for GlusterFS – as well as support for Microsoft's Hyper-V hypervisor and VMware's ESXi hypervisor on the client side.