Big Switch Brings Hyperscale SDN To Enterprises

Big Switch Networks, a networking startup that was spun out of the Stanford University labs that created the OpenFlow protocol that underpins a lot of software-defined networking stacks, is rolling out its Big Cloud Fabric Controller. The software is the culmination of four years of work and fulfils its founders' goals of creating a controller that can span both physical and virtual networks.

One thing that Big Switch is not doing is cloning VMware's NSX virtual networking stack. The company was moving along a similar track way when it was founded a little more than four years ago and was as keen on network overlay and underlay technologies as the formerly independent Nicira, which was acquired by VMware two summers ago for $1.28 billion to become the foundation of NSX.

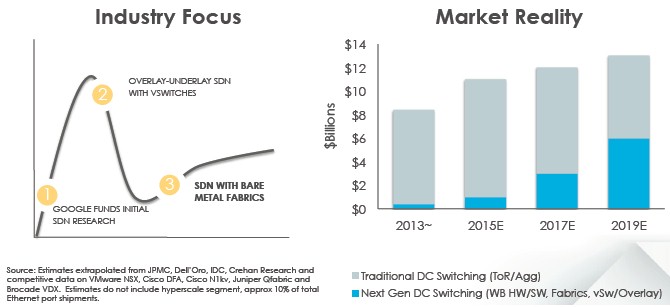

Among other things, the Big Cloud Fabric Controller is taking inspiration from the hyperscale datacenter operators like Google, Microsoft, and Facebook, which have all created their own network operating systems and fabrics as part of their own SDN efforts. The companies could not wait for SDN technologies to mature because they are operating at scales that most enterprises will never see and they must add automation for the configuration of switches and routers and for the shaping of traffic around their massive, global networks as workloads and conditions change.

"The hyperscale datacenter operators have really lapped their incumbent vendors in pushing forward the state of the art," Kyle Forster, co-founder and vice president of marketing at Big Switch, tells EnterpriseTech. "A couple of years ago, we took a deep breath and asked who was being successful with SDN, and why, and are there patterns out there that we can look at. Frankly, it was the big hyperscale companies that were making SDN, bare metal switching, and core platform and datacenter designs to enable this stuff really successful. And in many ways, these ideas became the fabric of our company."

The hyperscale datacenter operators are not, Forster explains, just working in a vacuum. He says that a lot of the networking engineers at Google, Facebook, Microsoft, Amazon, and similar hyperscale companies came from traditional supercomputing backgrounds.

"And these folks are moving back and forth," says Forster, and it is because the hyperscale datacenter operators, the national supercomputing labs, and large Federal agencies in the intelligence arena – all of whom are working with Big Switch at the moment – are trying to solve the same networking issues – mainly that networking is too brittle and takes too much human intervention – at scale. "I think the pendulum has swung just a little towards the hyperscale companies because they are funded a little more and they can work a little further into the future," says Forster. All of the hyperscale companies buy their bare-metal switches directly from original design manufacturers in Asia and they install their own network operating systems on them; in some cases they have a mix of physical and virtual switches but all of them are deploying onto bare metal. Facebook is unique in that it is trying to foster open switch designs to be shared with the industry through its Open Compute Project.

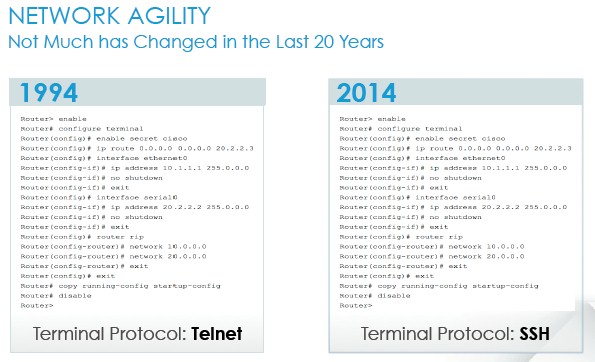

The rigidity of traditional networking is a real problem. Here is a funny graphic that shows the two decades of progress in cutting-edge network management technology that illustrates the point:

That is a silly chart, but it makes a valid point. In addition to rolling out the new Big Cloud Fabric Controller, Big Switch will be updating its existing Big Tap entry-level network monitoring this quarter to a V4.0 release.

Big Switch has closed its first $1 million deal with a customer and now has production deployments in six key verticals – financial services, hyperscale, technology, telecom carriers, Federal government, and higher education. The largest customer has Big Tap deployed in 16 different datacenters worldwide. "Big Tap is a fairly easy way to get into SDN and bare metal," says Forster. The Big Tap update update has features for deeper packet matching, which has affinity for VXLAN matching and tunneling matching. The tunneling allows for taps to be in remote data centers, but monitoring tools to be located in a central datacenter. "We think that the traditional tap-every-rack topology was just way too expensive, management didn't scale for it, and we have competitive wins to show for it. We think that distributed tapping is the next new topology."

The Big Tap V4.0 update also includes support for 40 GE networks, allowing for the monitoring fabric to aggregate 10 GE links and to tunnel over 40 GE links. The update also includes support for Dell's Force 10 S4180-ON switch, which is important because of the partnership agreement between Dell and Big Switch that was announced in April and that is kicking in now.

While Big Tap is interesting and useful, Big Cloud Fabric Controller is the flagship product at Big Switch. The software is based on a number of different design principles from the hyperscale datacenter operators. First, it assumes that enterprises will want to use bare metal switches to cut costs and to separate the underlying hardware from the network operating system. Just as enterprises have a choice of operating systems for X86 servers, Big Switch along with the hyperscale companies and many large enterprises want to have a choice of network operating system atop generic iron. At the moment, Broadcom's Trident II network ASIC is the closest thing that the networking industry has to an X86 processor and it is the one that the Big Cloud Fabric Controller supports. Other network ASICs will no doubt be added as network operating systems (largely based on Linux) become available for these bare metal switches. Separating the network control plane from the dataplane is the other hyperscale design principle informing the new Bog Switch fabric, and instead of putting management features as well as the control plane and data plane all together in the network operating system running on the switch with additional orchestration and network management tools feeding into the switches, the Big Cloud Fabric Controller puts a thin operating system with SDN features on top of the switches using merchant silicon and an SDN controller to provide centralized management and policies for those switches.

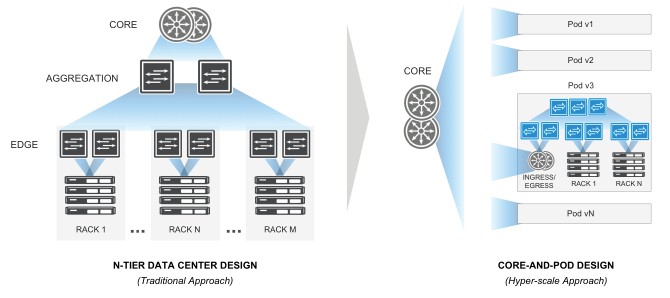

The final hyperscale principle is a core-and-pod network design, which contrasts with the traditional three-tier network:

In this example, the network assumes there will be a lot of traffic between nodes in a cluster of servers (so-called east-west traffic) as well as communication with other pods of servers and the outside world (so-called north-south traffic). Back in the early days of the Internet, the traffic flowed up the tree and down, but these days with virtualization and distributed parallel workloads, up to 70 percent of the network traffic is east-west in a typical enterprise datacenter.

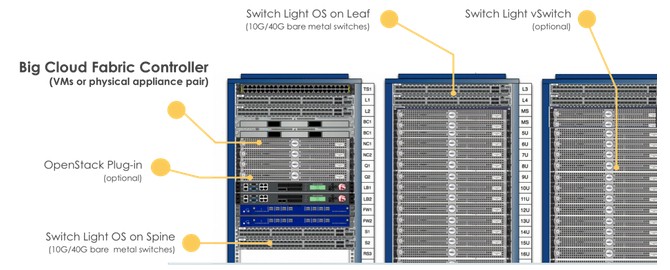

Here are the elements of the Big Cloud Fabric Controller:

The Big Cloud Fabric Controller is implemented either on a server hardware appliance, like other traditional network gear, or as a cluster of virtual machines running on an X86 server. The controller has a minimum hardware requirement of a two-socket machine with cores running at 2 GHz with 4 GB of memory and 20 GB of disk capacity, and it installs on a Linux operating system atop the hypervisor of your choice. Some of the functions of the controller stack are pushed down to the physical or virtual switches, and the network can operate in a "headless mode," meaning if the controllers are taken down for some reason, the network keeps operating at its then-current configuration.

The stack includes support for a number of bare metal 10GE and 40GE spine and leaf switches, which can be configured with the Switch Light operating system, a Linux-derived, SDN-friendly network operating system designed by Big Switch. The controller can also hook into the Switch Light vSwitch, an implementation of the vSwitch virtual switch originally developed by and open sourced by Nicira before it was eaten by VMware. The Big Cloud Fabric Controller also has a Neutron plug-in for the OpenStack cloud controller, and can also hook into OpenStack through the new Modular Layer 2 (ML2) driver mechanism of that cloud controller. (ML2 is a superset of the Neutron plug-ins for Open vSwitch, Hyper-V, and linuxbridge that allows for drivers to be more easily created.)

Big Cloud Fabric Controller is coming out in two versions. The P Clos Edition is aimed at Clos fabrics running atop bare metal leaf and spin switches, while the Unified P+V Clos Edition runs on the physical network as well as on vSwitch virtual switches embedded in hypervisors on the servers. The former is for workloads that only deploy on bare metal servers, while the latter is for workloads that deploy on a mix of bare metal and virtualized servers.

The initial target design for the Big Cloud Fabric Controller is to create and manage a sixteen-rack pod. At around 40 servers per rack and 640 servers in a pod, that works out to somewhere around 16,000 virtual machines of capacity in a cloud. "That's a pretty big blast area," says Forster. "We don't see a lot of folks wanting more than 16,000 VMs in a pod."

The controller stack is licensed on a per-switch basis, and Big Switch is being a bit cagey about the pricing but says the goal is to be 75 percent lower than the NSX stack with overlays and underlays from VMware and 60 percent lower than the ACI approach from Cisco Systems, which has application control functions embedded in the selected switches to provide SDN functionality. Big Cloud Fabric Controller has been in beta testing at ten companies since last November and will be available this quarter. As you might expect, Big Switch will be focusing on greenfield cloud and virtual desktop infrastructure installations to start, since this is where the low-hanging fruit is. No one expects for companies to rip out and replacing their switching infrastructure overnight.