Heftier Gluster Storage Helps Red Hat Tackle Big Data

Just because Red Hat bought Inktank for its Ceph clustered object storage software does not mean it has forgotten about its commercial variant of the Gluster clustered file system, which is commercialized as Red Hat Storage Server. Each has its own purpose in the datacenter, and each needs to be expanded to provide more capacity and throughput as datasets continue to swell.

To that end, Red Hat is wrapping up the latest tweaks to the open source GlusterFS 3.6 file system as Red Hat Storage Server 3, which launches today with significant capacity enhancements, making it better able to take on other parallel file systems such as Lustre, championed by Intel, and GPFS, which was created by IBM. Both Lustre and GPFS come out of the supercomputing community and have been tweaked to be more enterprise friendly in recent years and, like GlusterFS, are being positioned as a better option than the Hadoop Distributed File System (HDFS) for underpinning MapReduce and other workloads running atop Hadoop as well as for more generic parallel file system workloads where the datasets are large and need to be fed into clusters at a high rate. Red Hat bought Gluster back in October 2011 for $136 million and has thousands of customers using the open source GlusterFS variant and an unknown number using Red Hat Storage Server.

With the release 3 update, Red Hat Storage Server has about three times the raw capacity as the prior version. The commercial-grade GlusterFS can now have up to 60 disk drives per cluster node, up from 36 drives, and the maximum number of nodes in the cluster has been boosted by a factor of two to 128 nodes. With fatter 6 TB SATA disk drives coming in at an additional 50 percent more capacity than the 4 TB drives that were typically used until recently, a fully extended RHSS cluster can hold about 19 PB of usable capacity per cluster, Irshad Raihan, senior principal product manager for storage and big data at Red Hat, tells EnterpriseTech.

We took these numbers at face value when talking to Raihan, but on consideration it was a bit perplexing that the usable capacity on a big cluster running RHSS 3 was not larger. When we do the math on a fully extended RHSS 3 cluster, it should be 60 drives times 128 nodes times 6 TB per drive for a total of 46 PB of raw capacity. Raw capacity is not usable capacity, of course. The server nodes each have RAID 6 data protection on them in the scenario above, which eats some capacity, and then two-way data replication for both performance and availability reasons. Which is how the data gets knocked down to 19 PB from 46 PB.

Perhaps the more important thing for a lot of customers is that they can get a raw 1 PB storage pool with three hefty server nodes. As Raihan correctly pointed out, only five years ago, customers measured data in tens to hundreds of terabytes and now with machine data, logs, sentiment analysis, and other data being gathered and kept forever, petabytes are becoming the new norm.

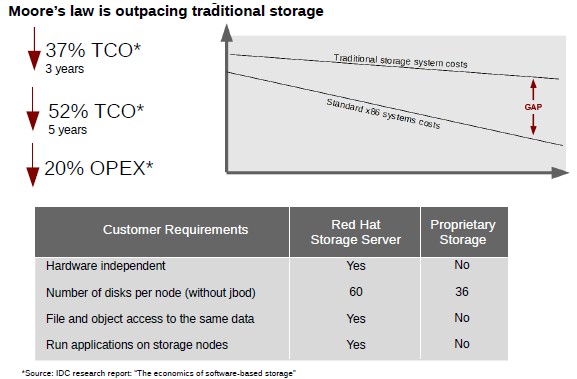

The thing that makes GlusterFS and its commercial-grade RHSS interesting for enterprises is that it runs atop the XFS file system on the local nodes and provides a single, global namespace that runs across the cluster. This gets around some of the metadata issues that other clustered file systems have. GlusterFS is also POSIX compliant, which means all kinds of operating systems can link with it, and it supports NFS, SMB, HDFS, and various object storage protocols in addition to its own native Gluster protocol for data access. The file system also has synchronous replication for local data protection and asynchronous replication to move data to a separate geographic location for high availability and disaster recovery. The other interesting bit about GlusterFS is that the same nodes that are used for storing data can be used to run applications. Here is how Red Hat stacks GlusterFS/RHSS up against traditional disk storage arrays:

In addition to the expandability of the file system across more drives, nodes, and capacity, Red Hat has also added some data protection features. RHSS 3 has thin provisioned volume snapshots for backups of data that allow for less capacity to be used than would be done with regular snapshots. The updated software can do non-disruptive snapshots, meaning the data can be copied without pausing the file system or the applications that use it, and RHSS 3 can do up to 256 snapshots per file system volume. Red Hat has user-serviceable snapshots – basically, a portal that allows end users to recover files without having to call storage administrators – in tech preview. RHSS 3 now allows for the Nagios system monitoring tool to plug into the RHSS console and act as the babysitter for the servers and networks underpinning the storage cluster. Nagios is, of course, one of the most popular system monitoring tools in the world and is often a part of the cluster management stack.

Red Hat says that it has significantly expanded the hardware compatibility list for RHSS with the version 3 update and that it is also adding support for solid state storage in addition to disk drives to allow RHSS to be used for low latency workloads. Red Hat is also packaging up RHSS in an RPM format so Enterprise Linux customers can deploy RHSS atop existing clusters running Shadowman's Linux. RHSS 3 itself is certified to run atop RHEL 6 and it is not clear when RHEL 7 will be added.

The final new bit with RHSS 3 is the integration of GlusterFS with Hadoop. Red Hat has been working with HortonWorks to get GlusterFS to be an alternative to HDFS underneath its Data Platform implementation of Apache Hadoop. RHSS 3 is certified to be the storage file system for HortonWorks Data Platform 2.0.6, and Raihan says that Red Hat is exploring offering similar support for other Hadoop distributions. These partnerships may be hard to come by. MapR has its own file system alternative to HDFS, and Cloudera is the other possibility but with its strong partnership with Intel, it looks like Cloudera will lean towards Lustre. IBM has its own BigInsights distribution and is keen on pairing it with the gussied up version of GPFS, which is called Elastic Storage to give it a little marketing pizzazz. RHSS 3 has not been certified against the raw Apache Hadoop distribution, but Raihan says there is a good chance it will work given the closeness between the HortonWorks Hadoop distribution and the open source Apache on which it is based.

At the moment, RHSS can mimic HDFS, and in the future, says Raihan, Red Hat will be adding support for the HBase non-relational database layer that runs atop HDFS as well as the Tez application framework developed by HortonWorks for the new YARN cluster resource manager for the Hadoop 2 stack.

Red Hat did not provide pricing for RHSS 3. It is available starting today.