Taking The Omni-Path To Cluster Scaling

Intel has some impressive networking assets that it has acquired in the past several years, and the company has made no secret that it wants its chips to be used in switches and network adapters and the silicon photonics networks that will eventually be used to lash machines to each other. The first fruition of Intel’s networking efforts, now called the Omni-Path architecture, will come to market next year, offering 100 Gb/sec links on switches that are denser and zippier than InfiniBand gear.

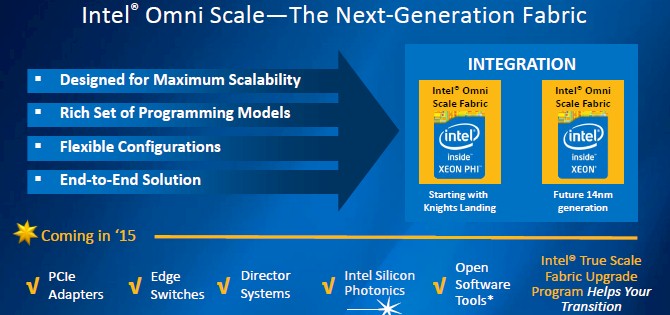

The plan has been to add Omni-Path ports to the “Knights Landing” Xeon Phi multicore chips, which will be created in versions that allow them to be put onto PCI-Express co-processor cards or as standalone processors with their own main memory, network interfaces, and PCI-Express peripherals just like a regular Xeon processor from Intel. In the summer, Intel talked about the network interfaces it would be adding to Xeon Phi and Xeon processors supporting Omni-Path, and now it is talking a bit about the switch side of the Omni-Path fabric.

You might be doing a bit of a double take on the name Omni-Path. Back in June, when Intel divulged some details on its follow-on to the InfiniBand networking it got through its acquisition of the QLogic True Scale business, the future product was called Omni Scale, not Omni-Path.

According to Charlie Wuischpard, vice president and general manager of workstation and high performance computing at Intel’s Data Center Group, the idea behind the Omni Scale name was that the networking scheme would provide benefits at any scale of cluster, from small ones to mega-scale ones. But Intel's marketing people are using the "path" name as they have before, specifically in the QuickPath Interconnect that is the point-to-point interconnect that links Xeon processors to each other in shared memory systems. So the InfiniBand follow-on is now formally known as Omni-Path.

Last summer, Intel said that it would deliver Omni-Path switching products including PCI-Express network interface cards and edge switches and director switches, and would also be employing its silicon photonics fiber optic interconnect technologies to extend the range of network links and get rid of copper cabling. Intel also said at the time that it would be integrating its first generation Omni-Path interfaces on the “Knights Landing” many-cored X86 coprocessor and on a future Xeon processor implemented in 14 nanometer processes. That could mean the “Broadwell” Xeons, which are a tweaked version of the current “Haswell” Xeon processors that are shrunk down to 14 nanometers from the 22 nanometers used to manufacture the Haswells. But it could also mean the “Skylake” Xeon follow-ons to the Broadwell chips, which will have a brand new architecture as well as using the 14 nanometer manufacturing. Broadwell Xeons should come in late 2015 or early 2016, but Intel has not provided any schedule for the as yet, and the Skylake Xeons could come out anywhere from 12 to 18 months after that if history is any guide.

At the SC14 supercomputing conference this week in New Orleans, Intel is divulging some details on the switches that will be able to talk to these ports on its Xeon and Xeon Phi processors as well as to adapter cards that plug into normal PCI-Express or mezzanine slots on servers and storage arrays.

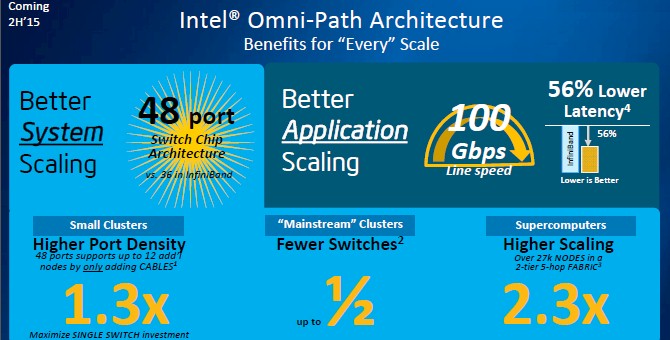

The Omni-Path switch chip will support up to 48 ports, compared to the 36 ports that Intel’s True Scale and Mellanox Technology’s Switch-X and Switch-IB switch ASICs provide. This might sounds trivial, but 48 ports means you can support a dozen more server nodes in a rack without having to buy a second switch. Wuischpard adds that on a mainstream cluster with 1,024 node, the Omni-Path edge switches could drop the switch count by as much as half compared to current InfiniBand gear. To be precise, this compares fat tree configurations for hooking those 1,024 nodes together using either Mellanox SwitchX or Intel True Scale edge switches with full bisection bandwidth. And at the high-end, the 36-port InfiniBand switches from Intel and Mellanox hit the top-end of their scale at 11,664 nodes, according to Wuischpard, but Omni-Path networks using the 48-port switches will be able to scale out to 27,648 nodes, or a factor of 2.3X higher than current InfiniBand networks.

Intel that the Omni-Path switches and adapters will run at 100 Gb/sec and it will offer 56 percent lower latency. That comparison pits two 1,024-node clusters in fat tree configurations against each other. (These clusters have two tiers of switches and a maximum of five hops between nodes.) One cluster is using the Omni-Path 48-port switches and server adapters and the other stacks up the CS7500 director switch and the SB7700/SB7790 edge switches and their companion adapters from Mellanox. All of that Mellanox gear runs at Enhanced Data Rate (EDR) 100 Gb/sec speeds for InfiniBand. Back in June, when Mellanox debuted the Switch-IB ASICs, it said that the SB7700 provided 7 Tb/sec of aggregate bandwidth across its 36 ports and had a port-to-port latency of 130 nanoseconds. Dropping the latency in the Omni-Path gear by 56 percent would put the port-to-port latency down around an astounding 57 nanoseconds.

Small wonder, then, distributed databases and storage clusters are adopting InfiniBand as what amounts to a backplane to link nodes together almost as tightly as NUMA chipsets do on servers.

While Intel is talking a bit about Omni-Path switching, it has not, as Wuischpard admits, divulged much about the Omni-Path protocol itself and how it differs from InfiniBand and Ethernet and how it is similar.

"It is not Ethernet and it is not InfiniBand, but the important thing is that as a user and as an application, you really won't care,” explains Wuischpard. “One of the ways that we have doubled share with our InfiniBand business is that we have been selling installations of True Scale InfiniBand with an upgrade path, and one of the main benefits there is that the whole software layer remains consistent even as we are switching out the whole underlying infrastructure. That sounds like a pretty big project, but there are a number of customers that if they are going to own a cluster for four years, they would like to get three years with a 100 Gb/sec solution. And if they are comfortable with our stack and the way it works, then they are comfortable with the decision."

The fact that Intel has been able to boost sales by more than 50 percent in the first three quarters of 2014 for its True Scale business, which is selling five-year-old Quad Data Rate (QDR, or 40 Gb/sec) InfiniBand switches and adapters, is a testament to the Omni-Path technology, even if we don’t know much about it yet. Clearly, some very large customers do.

The important thing, again, is that the application layer sees Omni-Path the same way it sees True Scale, and that Intel is working with the OpenFabrics Alliance to ensure that the OpenFabrics Enterprise Distribution (OEFD) high-performance network drivers work well on top of Omni-Path hardware. Intel is also setting up an organization called the Intel Fabric Builders to get their system and application software ready to run on day one with Omni-Path gear. Founding members include Altair, ANSYS, E4 Computer Engineering, Flow Science, Icon, MSC Software, and SUSE Linux.

All of this work is important to Intel. For all intents and purposes, Intel owns the processing on servers in the datacenter, an effort that didn’t start with the launch of Pentium Pro chips for servers back in 1993, but rather years earlier when the planning for the datacenter assault began. The company took a long-term view on specialized processors for storage devices and also on flash drives, and has built up a considerable storage-related business. And it acquired Fulcrum Microsystems, the interconnect business from Cray, and the InfiniBand business from QLogic to build out a formidable networking chip business. If it can marry network ports on processors to switch chips, it will be able to provide an end-to-end stack of chips for its server and switch customers and give the network incumbents a run for the money.