Using Automated Data Analytics and IoT to Enhance Shop Floor Productivity Sponsored Content by Numascale

Data from networked devices — the Internet of Things (IoT) — is beginning to flow from the manufacturing floor to the data center. This IoT data provides an easier, better, and more automated way to monitor the performance of the entire manufacturing process. Many companies are deploying IoT-enabled devices, but using the actual data can be a challenge because most large organizations don’t have a data scientist on staff to analyze the statistics — small to mid-sized factories and manufacturing shops certainly don’t have that luxury. To address this need and create a cost-saving IoT infrastructure, many smaller companies are now turning to automated IoT solutions.

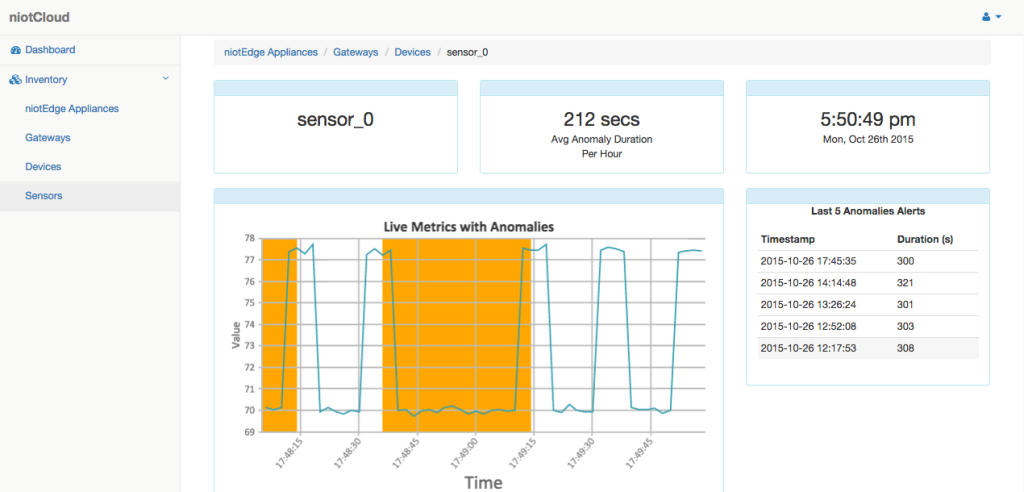

Traditionally, “shop floor“ data is analyzed using Statistical Process Control (SPC), which applies statistical methods to gain insight into manufacturing processes. SPC is deployed to both monitor and control manufacturing processes, in order to ensure optimal operations. It does this by providing threshholding, where alarms and alerts can be set on IoT (and other) devices. A deeper analysis can be made, however, by using the constantly flowing IoT data to detect work stoppage anomalies, events that could possibly go undetected using SPC and thresholding.

For example, a rapid temperature fluctuation may occur beneath a set threshold, indicating that a failure is imminent. Such a failure mode would go undetected without a deeper analysis of the available data. Modern data analytics systems now make it possible to detect anomalies in data patterns based on normal past behavior, rather than on arbitrary thresholds set by engineers.

Numascale’s data science team has developed a fully automated end-to-end solution that uniquely addresses this cost saving capability by preventing expensive downtime. The process works using six simple steps:

- Edge Analytics Appliances are deployed to read and collect data (these Edge analytics appliances also do the real-time analytics when models are trained).

- A model is automatically trained with a real incoming stream of IoT data, based on a time window set up by the engineer (who would have an idea of what is considered normal behavior).

- The model is built on back-end Numascale servers or in the cloud.

- The model is pushed back down to the Edge Analytics Appliance.

- Once in operation, the model offers much more intelligent monitoring than the previous threshold approach.

- Model performance is tracked and the model is updated when required.

Numascales's approach solves a number of deployment issues that have been common in the past. For instance, a typical six-month analytics deployment timeframe becomes just a few weeks because a robust suite of analytical models for machine anomalies is already built. Secondly, no data scientist is required at the customer/user site, only an experienced floor engineer who can help determine the time window of good data (the length of time that provides an adequate amount of normal operational data) and similar parameters.

Lastly, customers can choose to deploy a Proof Of Concept in the cloud and thus bypass long procurement processes and approvals. Since the costs will be pay-per-use, it is easy enough to show ROI, and then go for a larger on-premise deployment based on ROI data.

Numascale provides scalable turnkey systems for Big Data Analytics by combining optimized and maintained open source software with cache coherent shared memory architecture. Their IoT solution was developed in conjunction with their partners and customers, such as Intel, Adlink, Dell, and Singapore Technologies.