Cisco Refreshes Nexus Switches, Integrates Whiptail Flash With UCS

If you are in the hunt for new switches in your datacenter, Cisco Systems has just revamped portions of its Nexus line of top-of-rack and end-of-row devices. Cisco has also created new flash arrays based on its own Unified Computing System rack-based servers that are based on technology it got through its acquisition of flash array startup Whiptail back in September last year.

We will walk you up the switch announcements from top to bottom. All of the devices are based on the NX-OS operating system, which Shashi Kiran, senior director of datacenter, cloud, and open networking at Cisco, tells EnterpriseTech has over 55,000 customers running many hundreds of thousands of devices worldwide. The shift from Catalyst switches and its IOS operating system to Nexus switches and their NX-OS operating system caused Cisco a certain amount of grief several years back, since the newer devices were more expensive and yet had lower margins. Cisco got the product line back in balance and customers caught the 10 Gb/sec Ethernet wave despite the Great Recession, and now Cisco is humming along again.

At the very low-end, the Nexus 1000V virtual switch, which is created to provide a virtual link between hypervisors and virtual machines running on physical servers, is now able to link into the KVM hypervisor championed by Red Hat and often associated with the Linux operating system and the OpenStack cloud controller.

From day one with the UCS machines that were announced back in March 2009, marking Cisco's entry into the server racket, the machines were tightly integrated with VMware's ESXi hypervisor and its integrated virtual switch, which was called vSwitch at the time. Even though Cisco and VMware are tight partners, there are network admins who want the familiar NX-OS operating system – well, it is familiar now, even when it wasn't so much five years ago when customers were using switches with the Cisco IOS operating system on them. So Cisco took NX-OS and its Layer 2 switching functions and the Layer 3 routing functions from IOS and wrapped it up in an ESXi virtual machine to create the Nexus 1000V alternative to vSwitch. After nearly two years of work, mostly waiting for Hyper-V to mature and for customers to start using it in datacenters, Cisco ported the Nexus 1000V virtual switch to Microsoft's hypervisor in April 2013. Now, the Nexus 1000V virtual switch can plug into the KVM and OpenStack combination, and all of the bases are mostly covered.

Cisco has been promising support for the former heavyweight on Linux, the Xen hypervisor, but has not delivered that and, depending on customer demand, it may never do so. But if it does, then a XenServer/CloudStack combo would make sense. "It will be a function of the business requirements," says Kiran. "Xen support is definitely in the pipeline."

The Nexus 1000V comes in two editions. Essential Edition is the freebie version that provides the basic switch functionality. The Advanced Edition adds TrustSec security policies from Cisco's physical switches to the virtual one, plus rolling upgrades to keep the virtual switches live, VXLAN virtual overlays, and high availability clustering for failover and hooks into the management consoles for ESXi, Hyper-V, and KVM. It costs $695 per node. The Nexus 1000V does not, by the way, require Cisco servers, and in fact, many of the 8,500 customers who use the virtual switch are running it on servers made by someone other than Cisco.

Let's Get Physical

On the physical switching front, the new entry switch from Cisco is the Nexus 3172TQ, which is a top-of-rack system that fits in a 1U form factor that packs 48 10GBASET ports plus six QSFP+ uplinks running at 40 Gb/sec into the chassis. Those 10 Gb/sec ports support existing copper LAN cables used for older Gigabit Ethernet, which saves on cabling costs. The 40 Gb/sec ports can have four-way splitter cables plugged into them, converting the ports into 24 more 10 Gb/sec ports. As the network backbone gets faster, the splitter cables can be removed and the 40 Gb/sec ports can be used as faster uplinks. The Nexus 3172s support Layer 2 and 3 switching and employ the "Trident-II" ASIC from Broadcom rather than something cooked up by Cisco's engineers. The switch has an aggregate switching capacity of 1.4 Tb/sec and can handle 1 billion packets per second of bandwidth. The Nexus 3172TQ is available now and costs $21,000.

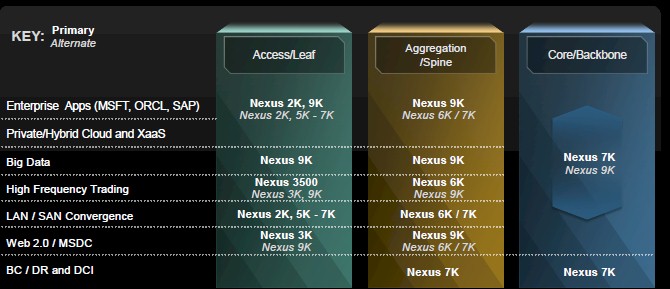

It is hard to keep straight where Cisco is aiming various switches at, so here is this handy little chart to show the primary and alternative uses of various switches by their markets.

As you can see, the Nexus 3000 series are aimed at Web 2.0 and other hyperscale datacenters. The Nexus 5000s and 6000s, which are bigger boxes with more ports and more bandwidth and which are based on Cisco's own ASICs, are aimed at clouds, enterprise datacenters and their beefy back-end applications, and environments where LAN and SAN traffic are converged.

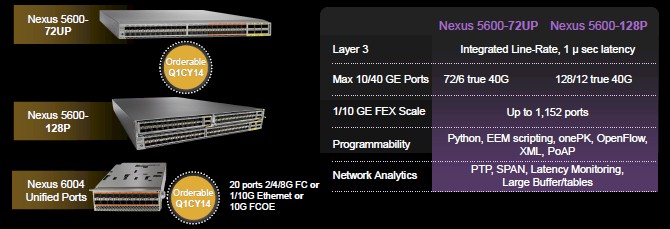

There are two new Nexus 5600 switches, a 1U rack machine with 72 ports and a 2U box with 128 ports. The Cisco ASIC adds support for VXLAN virtual overlays, which allows for a Layer 2 network to span Layer 3 routing links between datacenters and still look like one giant Layer 2 network. (You need this capability to be able to live migrate virtual machines across those routers, among other things.)

The big news with the new Nexus 5600s is that they have line rate around a 1 microsecond port-to-port latency, which is about twice as fast as the Nexus 5500 switches they replace. The Nexus 5672UP sports 32 SFP+ Ethernet ports running at 10 Gb/sec plus 16 universal ports that can support 10 Gb/sec Ethernet or Fibre Channel over Ethernet (FCoE) ports as well as actual Fibre Channel ports for hooking into SANs at 2, 4, or 8 Gb/sec speeds. The chips in the box deliver 1.44 Tb/sec of bandwidth and also about a 1 microsecond latency on the port hops. This switch costs $32,000 and will be available in the first quarter.

The Nexus 5600-128P switch takes this base 5672UP switch and adds two double decker expansion ports, called generic expansion modules, or GEMs, on top. These expansion modules have 24 universal ports running at 10 Gb/sec or the slower native Fibre Channel speeds plus two Ethernet uplinks running at 40 Gb/sec. The Nexus 5600-128P has double the bandwidth at 2.56 Tb/sec. The base switch costs $36,000 and the GEM costs $12,000. This will also ship before the end of the first quarter.

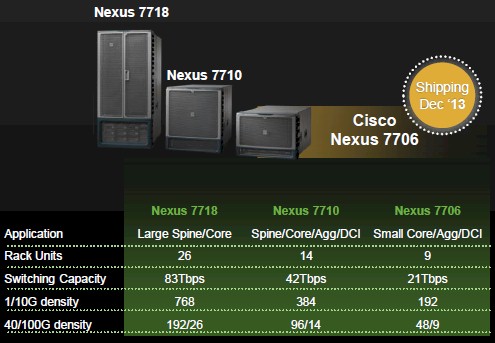

Those were the new top of rackers. The end of row machine, which aggregates those top of rack switches, is the Nexus 7000, and this is a big, bad modular switch. In fact, with announcement of the low-end Nexus 7706, there are now three different variants of this machine, which scale up the number of line cards they support. Cisco actually started shipping the new six-slot Nexus 7706 at the end of December, but waited until now to make a formal announcement. The existing Nexus 7718 and 7710 have eighteen and ten slots, respectively. The six-slotter supports up to 192 ports running at 10 Gb/sec, 96 ports running at 40 Gb/sec, or 48 ports running at 100 Gb/sec; it has 21 Tb/sec of aggregate switching capacity with all of its modules plugged in, and it can be programmed using Cisco's own ONE software-defined network controller (through the onePK development kit), XML, or Python; it also supports the OpenFlow protocol. The Nexus 7706 modular switch costs $65,000.

Those were the new top of rackers. The end of row machine, which aggregates those top of rack switches, is the Nexus 7000, and this is a big, bad modular switch. In fact, with announcement of the low-end Nexus 7706, there are now three different variants of this machine, which scale up the number of line cards they support. Cisco actually started shipping the new six-slot Nexus 7706 at the end of December, but waited until now to make a formal announcement. The existing Nexus 7718 and 7710 have eighteen and ten slots, respectively. The six-slotter supports up to 192 ports running at 10 Gb/sec, 96 ports running at 40 Gb/sec, or 48 ports running at 100 Gb/sec; it has 21 Tb/sec of aggregate switching capacity with all of its modules plugged in, and it can be programmed using Cisco's own ONE software-defined network controller (through the onePK development kit), XML, or Python; it also supports the OpenFlow protocol. The Nexus 7706 modular switch costs $65,000.

In conjunction with the new switch, Cisco is launching the F3 Series 10 Gb/sec module card, which has 48 Ethernet SFP+ ports running at 10 Gb/sec and which consumes 35 percent less juice than the F2 series cards. These new cards, which are based on Cisco's own F3 ASIC, are shipping now and cost $44,000.

There is not much news on the high-end Nexus 9000 front other than customer momentum. Cisco launched the Nexus 9000 high-end switches and their Application Policy Infrastructure Controller, or APIC, back in November. Like NX-OS and the Nexus switches, the Nexus 9000s and their APIC add-ons were developed by a Cisco spinoff that was subsequently spun back in. Cisco has more than 300 customers who are playing with the Nexus 9000 switches in the first 30 days that they have been shipping, and they are using APIC simulators to gain experience with APIC. For the Nexus 9000s, Cisco created a cut-down version of NX-OS and put many of the functions that are required to virtualize networks into chips. The idea is to use hardware to compete against software-based network virtualization tools such as VMware's NSX. Cisco has also announced that security vendors A10 Networks, Catbird and Palo Alto Networks and Hadoop distributors Cloudera and MapR Technologies have joined the APIC partnership program to hook their wares into Cisco's application controller that is embedded in the APIC chips. A lot of the big names in operating systems, storage, hypervisors, and security software – 28 in total – joined up for the November Nexus 9000 launch.

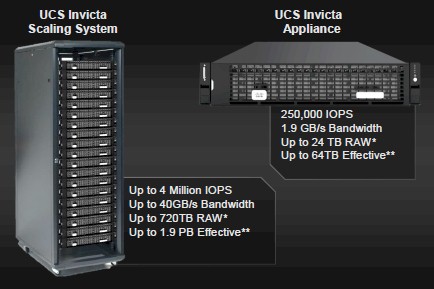

On the server front, Cisco has created a version of the Whiptail appliances based on its own iron to help accelerate UCS servers with flash. The Whiptail appliances are now branded UCS Invicta, and as EnterpriseTech told you last fall when the $415 million deal was done by Cisco, this is more about using flash to accelerate compute than it is about Cisco entering the storage market.

On the server front, Cisco has created a version of the Whiptail appliances based on its own iron to help accelerate UCS servers with flash. The Whiptail appliances are now branded UCS Invicta, and as EnterpriseTech told you last fall when the $415 million deal was done by Cisco, this is more about using flash to accelerate compute than it is about Cisco entering the storage market.

Todd Brannon, director of product marketing for Cisco’s Unified Computing System line, says that the new UCS Invicta Appliance is based on the company's C-Series rack server and uses 2.5-inch SSDs from multiple sources. The idea behind the appliance is that it will be hooked into the switching infrastructure of the UCS chassis and provide fast access to data for a collection of blade servers. As Brannon has said before, he still expects for primary data to be stored on SANs, but that hot data will be staged to the flash drives for performance reasons.

For selected workloads, such as virtual desktop infrastructure and email workloads, the idea is to use the de-duplication features of the Whiptail OS on the flash array to radically reduce the capacity needed to support users while at the same time boosting performance. The important thing about the Whiptail OS, Brannon adds, is that it was tweaked to get near parity between read and write speeds on the flash. Writes are generally slower than reads on flash arrays, and this is annoying.

The base UCS Invicta Appliance has 3 TB of capacity, expandable to 24 TB and providing a maximum of 64 TB of space using data compression. This base machine has a suggested list price of $63,300 and it will be available on January 29.

The UCS Invicta Scaling System scales up to 30 of these appliance nodes, which are interconnected and offer up to 1.9 PB of effective capacity after data compression and 4 million I/O operations per second of aggregate performance.

Cisco is still using PCI-Express flash cards from Fusion-io to accelerate the performance of single server nodes, and Brannon says that there will be uses for these. He did not want to tip Cisco's cards about the potential of using the UltraDIMM flash-based memory sticks inside of Cisco's blade and rack servers, as IBM has just done with its System X6 series of Xeon E7 servers. But it stands to reason that if IBM – soon to be Lenovo – is using the UltraDIMMs, Cisco and the other server players will as well.

One last thing. Cisco has updated the UCS Director software that it got by virtue of its $125 million acquisition of Cloupia back in November 2012. Cloupia, which was founded by some former Cisco executives in 2009, had created a cloud management tool called Unified Infrastructure Controller, and as the name suggests, it is a framework for managing public and private cloud infrastructure. The software was rebranded UCS Director after the acquisition, and with the current release, it can now babysit up to 50,000 virtual machines compared to 10,000 with the prior release. UCS Director is not to be confused with UCS Manager, which is the control program inside the UCS chassis for managing server nodes and virtual fabrics for those nodes, or UCS Central, which aggregates multiple UCS Manager domains into a single entity that can span up to 10,000 servers.