Intel’s Super Elastic Datacenter Bubble

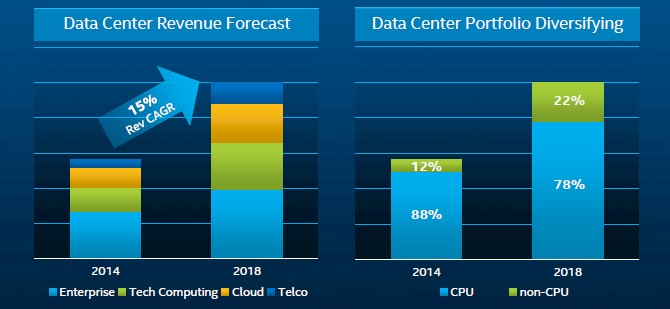

IT spending is growing at a few measly points a year, at best, and guess what? Diane Bryant, general manager of Intel's Data Center Group, is not even breaking a sweat. And the reason why is simple: revenues from the chippery that Intel sells in servers, storage, and switches has been growing much faster than the market at large and is projected to do so out until 2018, which is the edge of Intel's planning horizon.

At the company's annual Investor Meeting late last week, the top brass of the chip giant gave Wall Street and the rest of us who care about Intel's fortunes because so much of the datacenter is underpinned or driven by Intel some insight into how they think the future will play out. While Intel certainly faces its share of competition in the networking market that it is just beginning to get traction in and from upstarts who want to craft ARM servers, use Nvidia GPU accelerators, or IBM Power systems, the company is nonetheless projecting for revenues in the Data Center Group to grow around 16 percent this year. That is twice the growth rate that Intel had in 2013, thanks to an uptick in spending by enterprise customers. (This was an uptick that Intel had expected in the second half of 2013.)

The Data Center Group had 11 percent growth in 2012 and was expected to accelerate in 2013 and out beyond, but there was a slight pause among enterprise shops who historically have pegged their spending very tightly to gross domestic product (GDP) growth. The economy picked up in 2014, and that is the main reason why Bryant is expecting such high growth this year. The forecast for at least 15 percent growth between 2016 and 2018 inclusive for Data Center Group is an indication of two things. First, Intel is not predicting an economic downturn for the next four years and, second, it is not anticipating that the competitive threats from its many rivals will amount to much.

There is no such thing as perfectly elastic demand for any product, but compute capacity is probably the closest thing that the world has ever seen for it. It is difficult to sate the appetite for compute, and as Intel has delivered more, the demand has spiraled upwards. Bryant and Stacey Smith, Intel's CFO, cited Jevons Paradox, an economic theory developed by William Stanley Jevons in 1865 that says that as a technology progresses and gets more efficient, the use of the technology will expand instead of contracting. Jevons was observing the use of coal as a fuel for the Industrial Age, and Bryant was talking about commodity servers, virtualization, and cloud computing in the Information Age some century and a half later.

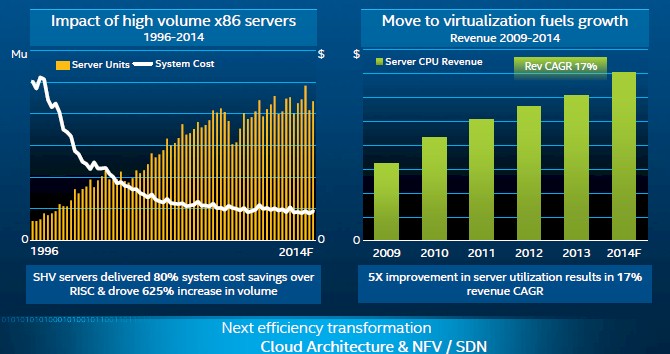

The move to X86-based commodity servers just have people cheaper iron, and this was important because it compelled companies to create distributed applications that spanned systems instead of running on big, fat SMP or NUMA machines. But with server utilization hovering around 5 to 10 percent for most X86 systems, it is arguable that companies merely traded ease of programming for a more distributed and resilient architecture that was better able to deal with spikes in capacity. It took server virtualization to drive utilization up to 50 percent or so, which was accomplished by running multiple workloads concurrently on one machine, and it will take cloud computing, which means running multiple workloads from different business divisions on a private cloud or from different companies entirely on the public cloud to drive utilization up to 80 percent or higher.

"Cloud architecture is the next big transition in the industry and we believe it will continue to drive efficiency and we believe it will continue to unleash new demand," explained Bryant. It is not just the efficiency that drives more compute capacity than expected, of course, but the massive reduction in friction in getting a virtual server set up and running a specific software stack compared to how it was done on physical machines at most companies only a few years ago. The time has come down from months to hours and sometimes minutes.

You will no doubt notice that when Intel first entered the server market back in 1996, average selling prices were quite high, and then as it used its prowess in PC chips to create server chips at high volume, those peddling proprietary or RISC/Unix servers had to try to compete. They have largely been pushed to their niches – important ones, to be sure, but Intel accounts for the lion's share of server CPU shipments in the world. You know that those peddling ARM server chips want to do the same thing to Intel that Intel has done to other chip makers, and they are used to living on the razor-thin margins already from client devices. Which is why Intel is trying hard to get its own Atom and Core-M chips into smartphones, tablets, and other non-PC client devices as 64-bit ARM chip makers are trying to break into the datacenter. History would seem to suggest that the incumbent with a market to protect and margins to protect always loses out to the usurper from below. But history has not seen a force like Intel since the IBM of the 1960s and 1970s, and unlike that Big Blue of days done by, Intel never stops being paranoid (in a healthy way) about its competition.

If you look at those two charts above, you will notice that server unit shipments have pretty much been on a barely upward trend since 2007 or so, if you take out the dip out for the Great Recession. Server prices have been trending downward thanks to cut-throat competition, but the trend change in price has as much to do with expensive RISC/Unix and proprietary systems representing less of the pie than X86 machinery. As you can see from the right side of the chart above, server chip revenues for Intel have been trending upwards at a compound annual growth rate of 17 percent from 2009 through 2014, and if anything have accelerated a little faster this year.

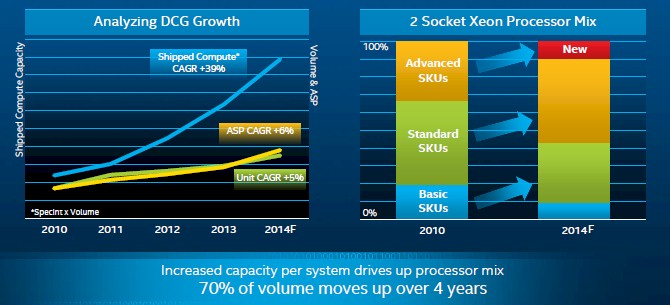

Bryant said that Intel's unit shipments of server chip processors (the chart above is for all server chips, not just Intel's) have grown at a rate of about 5 percent compounded annually from 2010 through projected 2014 shipments, and this year average selling prices of chips have actually risen much faster than shipments and therefore raised the compound growth rate for this same term to 6 percent. (If you look carefully, shipments grew faster than ASPs in 2010, 2011, and 2012. But look at that compute capacity shipped as measured by the SPECint benchmark as applied to those chips. Intel is using Moore's Law to add more cores to each chip, and customers are soaking it up. In general, said Bryant, Intel offers a minimum of 20 percent more performance at a given price point in the Xeon line with a generational upgrade, and customers have run the numbers and figured out that they need to build more capacious servers rather than install more of them. This has driven up the processor mix, and over the past four years, 70 percent of the server chip volumes have moved up the stack as companies play the numbers.

The reason here is simple: better bang for the buck at a chip, at a server capital expense, and at an operational level. Bryant cited James Hamilton, vice president and distinguished engineer for Amazon Web Services, who said that by getting customized variants of Xeon processors, AWS was paying something on the order of 2 percent to 4 percent in incremental total cost of ownership (TCO) but getting 14 percent more oomph from the servers.

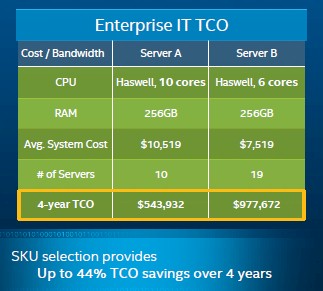

Intel's math also suggests that customers should – and are – buying beefier Xeon machines as the core counts go up, which works for a lot of enterprise and cloud workloads but not always for every HPC workload. (As EnterpriseTech has previously reported, Intel itself is shifting away from two-socket Xeon E5 servers towards single-socket Xeon E3 servers for its vast electronic design automation cluster because EDA apps at Intel are really just running a massive collection of hundreds of thousands of single-threaded jobs, and having the fastest cores matters more than anything else.) In a comparison that Bryant pulled from an enterprise IT customer, over the course of four years, the customer could reduce its TCO by 44 percent and get the same processing capacity in 10 servers using ten-core "Haswell" Xeon E5 processors compared to buying and maintaining 19 machines running six-core Haswell Xeon E5s. The amazing thing to me in these numbers is that this transfers money from software vendors and system vendors to Intel. All the savings in power, cooling, software licenses, and maintenance gives the customer some savings, but the average system cost goes up and is mostly dedicated to the higher Xeon SKU.

It is tough to be a server supplier these days. In case you were wondering. But it is pretty good to be Intel, almost like IBM in the late 1960s, in fact. All of the core businesses that Intel organizes itself against – enterprise, telco, cloud, and HPC – in the server business are growing sufficiently for it to predict 15 percent revenue growth over the next four years. But, as Bryant pointed out, the lines are blurring. Telcos are building clouds, and enterprises are, too. Cloud service providers are doing HPC, too.

"There is a blurring of the workloads between these different market segments as these new architectures emerge and as these new usages of these architectures emerge," Bryant said. Looking ahead, she added, Intel will have a harder and harder time saying a Xeon or Atom chip goes into an enterprise or cloud or telco or HPC account because of this blurring. This is a theme that readers of EnterpriseTech are well aware of.

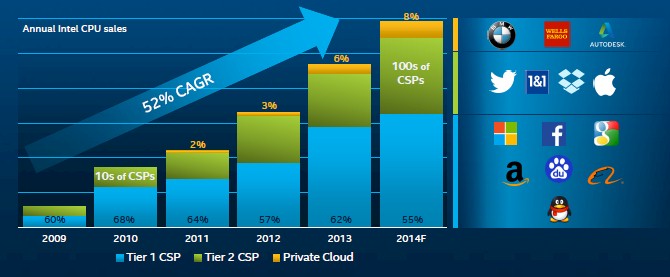

Cloud service providers in their many forms drive an astonishing 35 percent of server CPU revenues for Intel, and these customers are the first ones to drive the company to offer customized chips. This year, 23 percent of server CPU chips bought by cloud service providers will be custom, and Intel expects it to be more than half of server chips purchased by cloud companies in 2015. Intel has roughly 100 standard Xeon and Atom SKUs at any time, but this year did 35 custom SKUs on top of that, compared to 15 custom chips a year ago.

"Our customers are willing to pay a premium for a customized solution," said Bryant, and said further that this was what was driving cloud revenues upward in addition to the cloud build out. "It's wonderful. Wonderful margins."

So now the ARM collective knows where to hit first, as if this wasn't the first target anyway. Cloud builders control their own software stack, and they have relatively few applications and the best systems and software experts in the world, so they can port their applications to a new architecture faster than any other organizations on earth. The hyperscale shops are sort of like supercomputing centers in the late 1990s when Linux and Beowulf clusters came of age and parallel computing went mainstream. They had the money, the people, and the incentive to make a jump. Thus far, Intel has done a good job protecting and extending its customer base, but the fight has not really begun. If Microsoft, Facebook, Google, or Baidu go ARM, and in a big way, that shock will be felt around the world.