Deep Learning Portends ‘Sea Change’ for Oil and Gas Sector

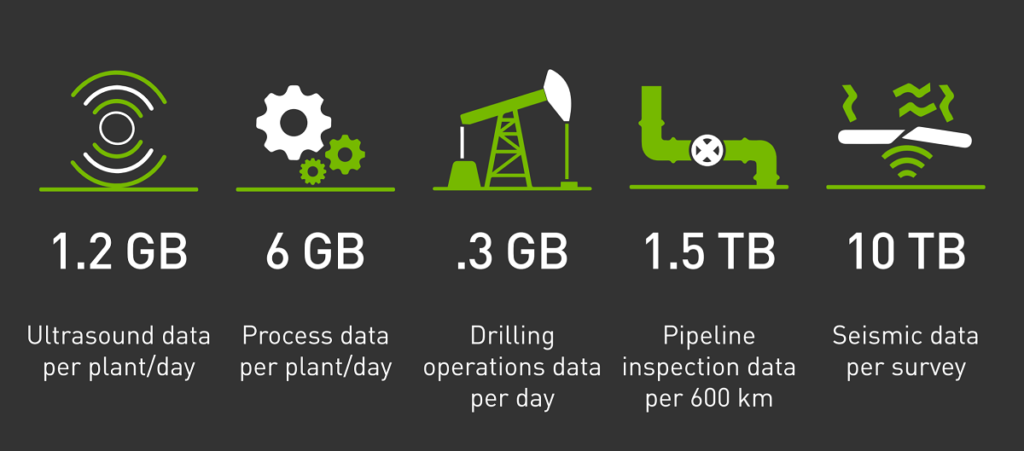

The billowing compute and data demands that spurred the oil and gas industry to be the largest commercial users of high-performance computing are now propelling the competitive sector to deploy the latest AI technologies. Beyond the requirement for accurate and speedy seismic and reservoir simulation, oil and gas operations face torrents of sensor, geolocation, weather, drilling and seismic data. Just the sensor data alone from one off-shore rig can accrue to hundreds of terabytes of data annually, but most of this remains unanalyzed, dark data.

Why? Because there just isn’t enough compute capacity to crunch it all.

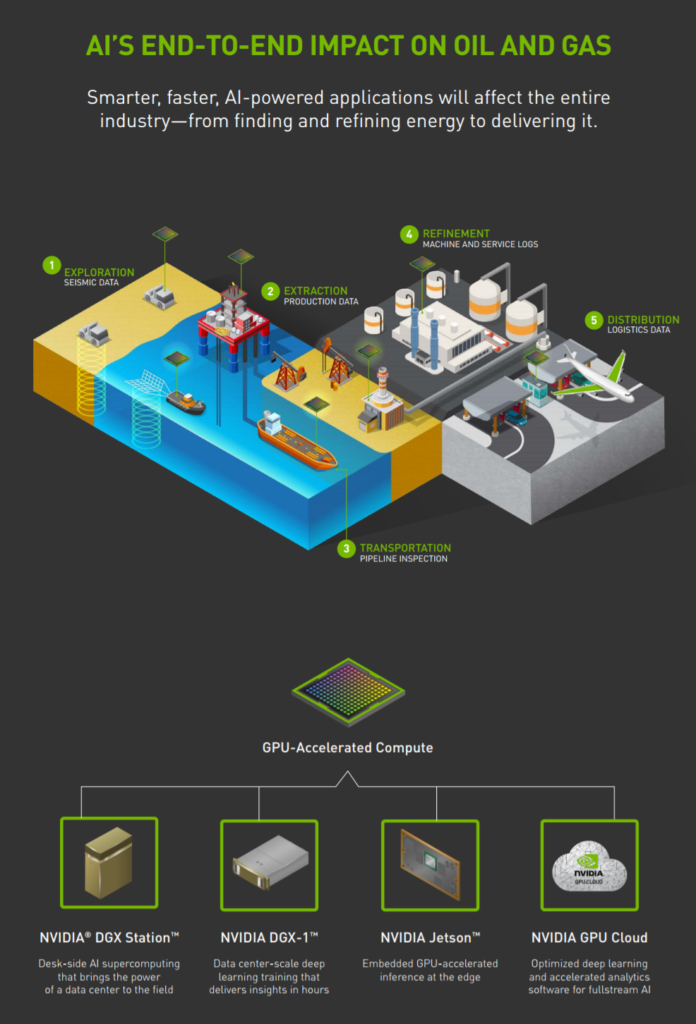

A collaboration between Nvidia and Baker Hughes, a GE company (BHGE) -- one of the world's largest oil field services companies -- kicked off this week to address these data challenges by applying deep learning and advanced analytics to improve efficiency and reduce the cost of energy exploration and distribution. The partnership leverages accelerated computing solutions from Nvidia, including DGX-1 servers, DGX Station and Jetson, combined with BHGE’s fullstream analytics software and digital twins to target end-to-end oil and gas operations.

“It makes sense if you think about the nature of the operations, many remote operations, often in difficult locations,” said Binu Mathew, vice president of digital development at Baker Hughes. “But also when you look at it from an industry standpoint, there’s a ton of data being generated, a lot of information, and you often have it in two windows: you have an operator who will have multiple streams of data coming in, but relatively little actual information, because you’ve got to use your own experience to figure out what to do.

“On the flip side you have a lot of very smart, very capable engineers who are very good at building physics models, geological models, who often take weeks or months to fill out these models and run simulations, they operate in that kind of timeframe. So in between you’ve got a big challenge of not being able to have enough actual data crossing siloes into a system that can analyze this data that you can take operational action from. This is the area that we at Baker Hughes Digital plan to address. We plan to do it because the technologies are now available in the industry: the rise of computational power and the rise of analytical techniques.”

The volume of data being generated by the industry leads logically to the expectation that the industry will greedily adopt exascale computing as soon as possible. “Even if you don’t talk about things like imaging data – which adds a whole order of magnitude to it – but just in terms of what you’d call semi-structured data, (which is) essentially data coming up from various sensors, it’s in the hundreds of petabytes annually,” Mathew said. “And if you take a deep water rig you’re talking about in the region of a terabyte of data coming in per day. To analyze that kind of data at that kind of scale, the computational power will run into the exaflops and potentially well beyond.”

Baker Hughes -- and an increasing number of groups across academia and industry -- are tackling this extreme-scale challenge using a combination of physics-based and probabilistic models.

“You cannot analyze all that data without something like AI,” said Mathew. “If you go back to the practical models: the oil and gas industry has been very good at coming up with physics based models, and they will still be absolutely key at the core for modeling seismic phenomenon. But to scale those models, combine the physics models with the pattern matching capabilities that you get with AI, the sea change we’ve seen in the last several years, if you look at image recognition and so on, deep learning techniques are now matching or exceeding human capabilities. So if you combine those things together you get into something that’s a step change from what’s been possible before.”

Nvidia believes that its GPU technologies will fuel this transformation by powering accelerated analytics and deep learning for oil and gas operations across the spectrum of operations.

“With GPU-accelerated analytics, well operators can visualize and analyze massive volumes of production and sensor data such as pump pressures, flow rates and temperatures,” said Nvidia’s Tony Paikeday (director of product marketing, artificial intelligence and deep learning) in a blog post. “This can give them better insight into costly issues, such as predicting which equipment might fail and how these failures could affect wider systems.

“Using deep learning and machine learning algorithms, oil and gas companies can determine the best way to optimize their operations as conditions change,” Paikeday continued. “For example, they can turn large volumes of seismic data images into 3D maps to improve the accuracy of reservoir predictions. More generally, they can use deep learning to train models to predict and improve the efficiency, reliability and safety of expensive drilling and production operations.”

The collaboration will leverage Nvidia’s DGX-1 servers for training models in the datacenter; the smaller DGX Station for computing deskside or in remote, for bandwidth-challenged sites; and the Nvidia Jetson for powering real-time inferencing at the edge.

Jim McHugh, Nvidia vice president and general manager, said in an interview that Nvidia excels at bringing together this raw processing power with an entire ecosystem: “Not only our own technology, like CUDA, Nvidia drivers, but we also bring all the leading frameworks together. So when people are going about doing deep learning and AI, and then the training aspect of it, the most optimized frameworks run on DGX, and are available via our NGC [Nvidia GPU cloud] as well.”

Cloud connectivity is a key enabler of the end-to-end platform. “One of the things that allows us to access that dark data is the concept of edge to cloud,” said Mathew. “So you’ve got the Jetsons out at the edge streaming into the clouds. We can do the training of these models because training is much heavier and using DGX-1 boxes helps enormously with that task and running the actual models in production.”

Baker Hughes says it will work closely with customers to provide them with a turnkey solution. “The oil and gas industry isn’t homogeneous, so we can come out with a model that largely fits their needs but with enough flexibility to tweak,” said Mathew. “And some of that comes inherently from the capabilities you have in these techniques, they can auto-train themselves, the models will calibrate and train to the data that’s coming in. And we can also tweak the models themselves.”

Nvidia sees a huge market opportunity, where partnering with BHGE is part of a broader strategy to work with leading companies to bring AI into every industry. The self-proclaimed AI company believes technologies like deep learning will effect a strong virtuous cycle.

“The thing about AI is when you start leveraging the algorithms in deep neural networks, you end up developing an insatiable desire for data because it allows you to get new discoveries and connections and correlations that weren’t possible. We are coming from a time when people suffered from a data deluge; now we’re in something new where more data can come, that’s great,” said McHugh.

Related

With over a decade’s experience covering the HPC space, Tiffany Trader is one of the preeminent voices reporting on advanced scale computing today.