Nvidia Inception Awards: AI Start-ups Pushing Innovation

We have seen the future, it was on display at Nvidia’s GTC 2018 conference in San Jose this week, and if this stuff works*, man, the future will be interesting:

- 50,000-square-foot retail stores with few employees and no exasperating checkout lines – you walk in, pick out what you want, and leave.

- using the signals from our central nervous system as a kind of USB port to our physiological internet to more precisely treat and predict chronic diseases

- using robots for arduous warehouse tasks, or mind numbing overnight security at office parks, power plants, R&D labs, etc.

- combining AI and satellite imagery to quickly detect infrastructure changes – such as expansion of a forced labor camp in North Korea, or new oil rig construction in the Gulf of Mexico.

- and cutting the time you’re confined in an MRI contraptions from 45 minutes to 10 to 20, and reducing costs significantly.

Companies building these autonomous systems were the six finalists competing for a third of the $1 million Nvidia Inception Awards jackpot. Common themes among all of them were using AI to save lives, time, money, labor costs (i.e., replacing workers) – and, of course, that these AI-based use cases are enabled by by lightning-quick Nvidia GPUs working against massive data sets.

Another common theme: the fresh enthusiasm of the CEOs and founders as they pitched their start-up strategies, fired by passion for their innovations and dreams of success. In them we saw the real face of the technology industry, the visionaries inventing innovative advances against big challenges.

The Inception Awards are in their second year, and Nvidia said the number of contesting companies grew by 10X over last year. There are now about 3,000 start-ups in the Inception Program. The 14 finalists for last year’s awards have, in the 12 months since, raised $180 million in investment capital.

The finalists were grouped into three categories, healthcare, enterprise and autonomous systems, and here are portraits of the awards finalists:

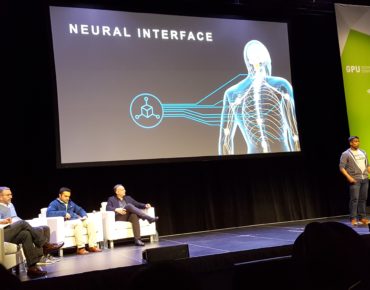

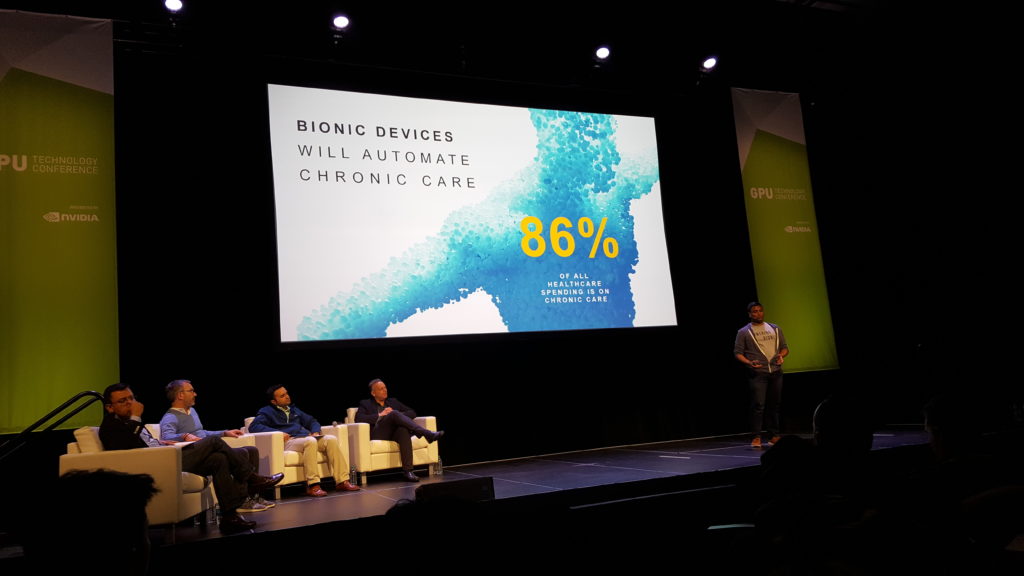

Healthcare Finalist: Cambridge Bio-Augmentation Systems

Cambridge Bio-Augmentation Systems (CBAS) co-founder and CEO Emil Hewage believes the key to the treatment of chronic disease (diabetes, heart disease, arthritis) is converting the biological code generated by our nervous system (our “biological internet”) into “neural data” that can be analyzed to direct and anticipate the unique medical treatments of individual patients.

“If someone is undergoing heart failure or responding poorly to experimental treatment, like a drug, or even diabetes,” Hewage said, “before you see the symptoms in the patient, what’s happening beforehand is this nervous system is becoming diseased and is unable to keep you regulated and in check.”

As you might expect, the nervous system’s biological code, the nerve signals, is “messy static,” Hewage said, “incredibly complicated.”

“The place for our technology at CBAS is to sit there between the biologically messy neural data, and the control systems and the engineers and clinicians who are trying to develop treatments of the future, and simplify and translate that,” he said.

CBAS’s strategy is to surgically implant in patients a custom titanium “bio-structured” device that’s controlled via a cloud interface and collects and transmits neural data for analysis. “Our implants pick up many channels of this messy static around any organ or system that they’ve put in the body,” said Hewage. “The challenge is to turn this into a code that we can interpret.”

CBAS’s strategy is to surgically implant in patients a custom titanium “bio-structured” device that’s controlled via a cloud interface and collects and transmits neural data for analysis. “Our implants pick up many channels of this messy static around any organ or system that they’ve put in the body,” said Hewage. “The challenge is to turn this into a code that we can interpret.”

He said CBAS’s goal is to create a “neural engineering open standard” as the basis for other medical device companies to build upon. “This is the way to enable the community… We’re releasing this as an open plug-and-play standard, as well as the data sets.”

“We’re translating nerve signals in real time and making them standard across patients and customize them automatically with a dedicated deep learning pipeline,” said Hewage. “This requires us to study intensely the entire nervous system, and everyone else can just treat it like an API.”

He said each of us generate 5 terabytes of neural data per week. “We’re mapping these disease conditions. We know this is the future of treatment.”

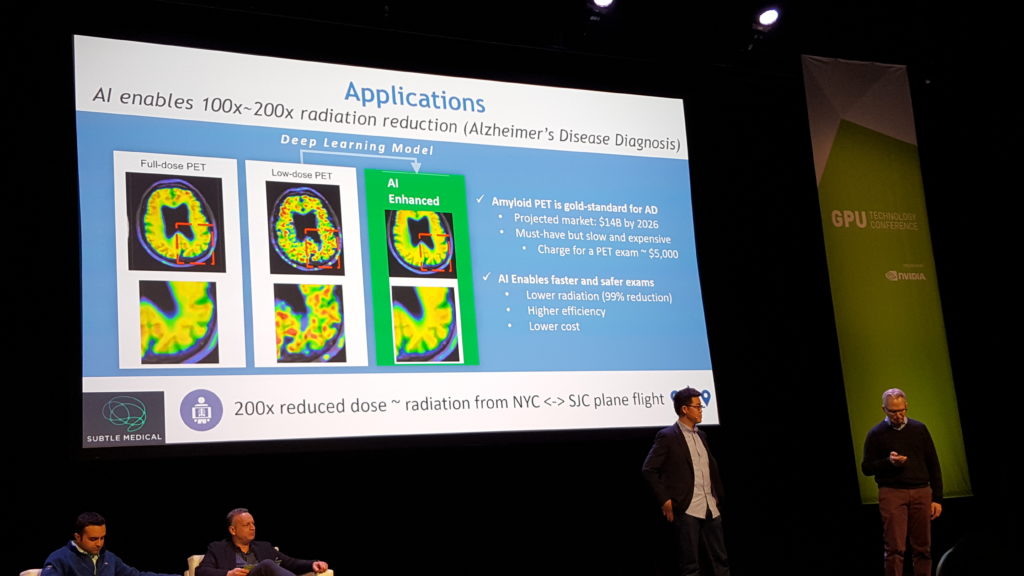

Healthcare Finalist: Subtle Medical

MRI’s are problematic. They take 45 minutes, the patient remains as still as possible inside what looks like a personalized sensory deprivation cave. Children, meanwhile, often must be sedated. And because the equipment is so expensive, MRIs typically cost $2,000.

Greg Zaharchuck, co-found of Subtle Medical, has worked on speeding up MRIs for more than 10 years. He’s partnered with AI specialist Enhao Gong to develop new techniques that they say make MRIs and PET (Positron Emission Tomography, to create images of organs and tissues for cancer diagnosis) two- to four-fold times faster, safer and smarter.

“We convert low cost, low quality into high quality imaging using deep learning,” said Gong, adding that Subtle is addressing a $7 billion market, targeting more than 5,000 hospitals and 14,000 medical image centers. “We can also use deep learning-based super resolution,” Zaharchuk said, “to improve diagnostic quality and lead to more confident clinical decisions.”

On the safety side, Subtle is working to reduce MRI “contrast doses,” which patients are given to produce medical images but that have been found to be toxic for people with kidney disease (martial artist Chuck Norris has filed a law suit about contrast doses). “Our deep learning solution can take 10 percent dose images and convert them into diagnostic-quality images,” Zaharchuk said.

On the safety side, Subtle is working to reduce MRI “contrast doses,” which patients are given to produce medical images but that have been found to be toxic for people with kidney disease (martial artist Chuck Norris has filed a law suit about contrast doses). “Our deep learning solution can take 10 percent dose images and convert them into diagnostic-quality images,” Zaharchuk said.

Ultimately, the company foresees the use of medical images and AI for predictive diagnostics.

“Immediately our goal is to enable faster scans, lower dose and improve the workflow,”said Zaharchuk. “But what’s really exciting is the future vision… We can potentially predict images that will occur in the future and prognosticate about patients’ personalized treatment in the future. We believe the vast amount of medical imaging information can be used to predict pathologies and genetic features without the need for biopsy.”

For the record, the company said its solution will not reduce the role of MRI radiologists, who represent only 10 percent of MRI costs.

Much of what Subtle does is enabled by GPUs.

“GPU is very essential for our technology,” Gong said. “At GTC last year we presented how we use GPUs to get up to 400x speed-up for both deep learning training and inference. And GPUs are essential for our product to deliver improved performance and real time feedbacks.”

Subtle expects FDA approval of its initial product, designed to reduce the duration and radiation dosage for PET, by September.

Healthcare Winner: Subtle Medical

---------

Enterprise Finalist: AiFi

Amazon garnered strong media attention for the opening of its checkout-free Go store in Seattle a few months ago. But AiFi wants to go Amazon one better. At its GTC pitch, the start-up said Amazon Go is a specialty food store on the scale of a convenience store (1,800 square feet). AiFi said its ability to scale is what separates it. The company has a fully-functional pilot store operating in Santa Clara and that it plans to open a 50,000-square-foot/30,000 SKU-item retail store in New York later this year – a store with the capacity for hundreds of shoppers at a time, none of whom will stand in a check-out line.

Co-founder Steve Gu has developed sophisticated neural nets that automate many retail store functions, such as real time consumer tracking at the edge via shoppers’ mobile devices, along with large-scale SKU product recognition and shelf auditing.

Co-founder Steve Gu has developed sophisticated neural nets that automate many retail store functions, such as real time consumer tracking at the edge via shoppers’ mobile devices, along with large-scale SKU product recognition and shelf auditing.

Key to AiFi’s system training and testing is using training data generated by simulations.

“In our simulations we know exactly where everything is, where all the people are, what they’re doing and what the items are,” said Co-founder Kaushal Patel. “We can train our AI on all this data that they’re craving. We can solve the large-scale checkout-free problem with our large-scale simulations.”

He said Nvidia GPUs generate realistic photos to the point where “sometimes we can’t tell the difference between real and simulation. Most companies use GPUs for training and inference. We use GPUs to actually create the data, which we think is pretty cool.”

AiFi has an ambitious business plan. Reduced labor costs alone represent significant savings for client retailers. But AiFi sees a multi-billion market hidden beneath the surface “through the customized shopping experience and collecting fine-point individual purchase data. Also, real-time inventory management. There’s a trillion dollar loss globally due to under- and overstocking. We believe our tech will solve that problem. So our technology is worth more than $100 million a year over and above reduced labor costs, this saves $3 million per year per store.”

Gu said AiFi plans to use a subscription fee model based on continual use of the system that will net about $500,000 per store per year for the company. He said projects to $100 million in annual revenue if the company wins 25 percent of U.S. supermarkets. He added that AiFi is in pilot project discussions with 15 retailers.

Enterprise Finalist: CrowdAI

When Hurricane Harvey hit Houston last year, emergency response organizations needed the most up-to-date aerial imagery showing the locations and extent of flooded roads, neighborhoods, reservoirs and other infrastructure. Defense and intelligence organizations need to surveil activities in hot spots around the world, such as North Korea, Iran and Syria. Investors need to track yesterday’s construction, drilling, transport and storage of oil and gas. Ride sharing companies need accurate, up-to-date maps, enabling them to determine the most efficient routes for their drivers.

This is the $8.2 billion geo-spatial market startup CrowdAI is pursuing, and the company is going up against some big competitors, such as Google and Apple. But CEO and Co-founder Devaki Raj said CrowdAI has stolen a march on other geo-spatial companies by partnering with satellite and aerial imagery producer of high frequency, low resolution images that CrowdAI converts into useful, daily infrastructure updates using the AI technique of segmentation.

“We’ve built our own convolutional neuro nets for segmentation,” she said. “Unlike other deep learning techniques, segmentation gives fine-grain results on edges, orientation, etc. We’ve also built an in-house annotation tool, as well as a methodology to create training data faster by using human-removed approaches. Our deep learning model then takes this training data to answer questions, like ‘Does this pixel belong to a road?’”

“We’ve built our own convolutional neuro nets for segmentation,” she said. “Unlike other deep learning techniques, segmentation gives fine-grain results on edges, orientation, etc. We’ve also built an in-house annotation tool, as well as a methodology to create training data faster by using human-removed approaches. Our deep learning model then takes this training data to answer questions, like ‘Does this pixel belong to a road?’”

Raj said customers give CrowdAI areas of interest for tracking. “Then we take satellite and aerial imagery from our partners. Our model’s raw output are probabilities per pixel, red indicating high probability it’s, for example, a road, and blue for lower probability.”

Raj said Nvidia GPUs are the “lifeblood” of CrowdAI, critical for rapid generation of results for clients.

“Recently we beta tested V100s on AWS, so we created bigger models faster, and we were able to find all the roads in Syria in just six hours on a couple of V100s,” she said.

Constantly updated information is paramount for many CrowdAI clients.

“Our differentiator is we’re able to harness high frequency/low resolution imagery that hasn’t been seen by any of our competitors out there,” said Raj. “The reason high frequency is important is because people want to know about daily changes. They don’t care about what’s happening on a quarterly basis, they need it on a daily basis, and by harnessing these new types of satellite imagery that essentially photograph the entire world on a daily basis, it puts us in a unique position.”

Enterprise Winner: AiFi

---------

Autonomous Systems Finalist: Ghost Robotics

Ghost Robotics wants to sell into the enterprise and DoD markets, and that, said CEO Jiren Parikh, requires 5/9’s robustness. The company builds legged robots that look like large insects feeling their way over ground and floors, that can climb chain link fences and ladders and other irregular surfaces.

“There are three reasons why legged robots are superior,” Parikh said. “They can operate on any type of unstructured terrain. And we mean anything in the great outdoors. Indoors we talk about stairs, stair stepladders, metal ladders you’d have on an oil rig. Second: they can manipulate the environment. Take a look at a legged robot, it has four manipulators on a chassis. You can teach it to do anything you want it to do. Third: they are superior on energy utilization on unstructured terrain. Why? A wheeled or tracked robot might have to go around something, a legged robot can actually go through it. If they see an object and it’s hard, they can go around or over it. But if its porous like a shrub it should be able to walk through it.”

“There are three reasons why legged robots are superior,” Parikh said. “They can operate on any type of unstructured terrain. And we mean anything in the great outdoors. Indoors we talk about stairs, stair stepladders, metal ladders you’d have on an oil rig. Second: they can manipulate the environment. Take a look at a legged robot, it has four manipulators on a chassis. You can teach it to do anything you want it to do. Third: they are superior on energy utilization on unstructured terrain. Why? A wheeled or tracked robot might have to go around something, a legged robot can actually go through it. If they see an object and it’s hard, they can go around or over it. But if its porous like a shrub it should be able to walk through it.”

He said Ghost has built a design model that’s size scalable. “Any size, from a foot up to four feet, short, skinny, tall, fat, long or short legs. It’s programmable, we can talk our legged robots and take the complexity out of it through a software development kit and give it to the development community to create derivative works and make it better.”

Ghost’s is initially targeting the security and inspection market for its unmanned ground vehicles, robots that patrol security-sensitive sites and are programmed to report suspect behaviors.

“For solutions for the entire market, industries and DoD, you need a product that is robust, and the only way to get to robustness is through AI,” Parikh said, “so we use AI across the entire operational capability of our legged robotics platform.”

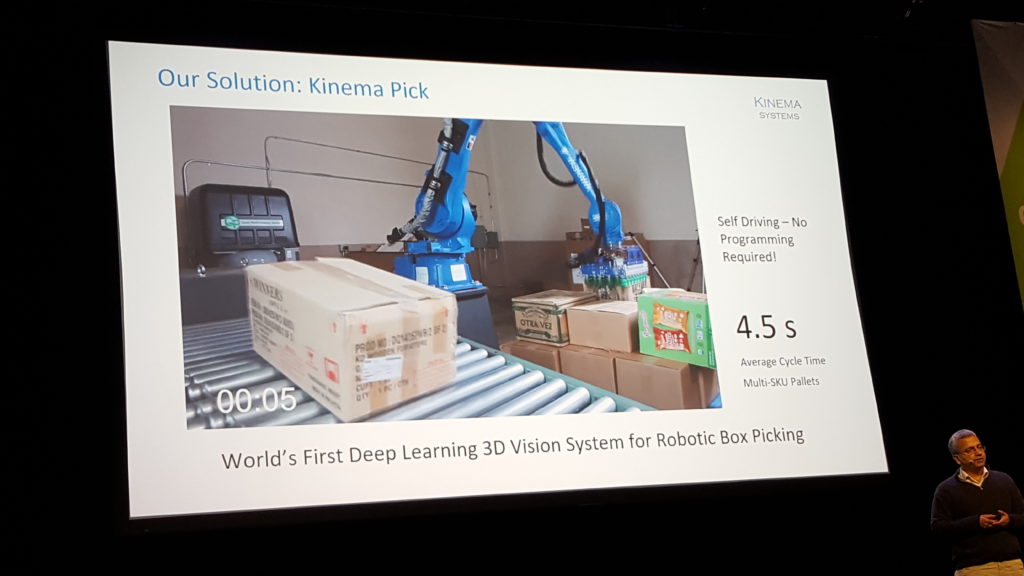

Autonomous Systems Finalist: Kinema Systems

Sometimes – too often, in fact – start-ups founder because their vistas are too broad. In anachronistic parlance, it’s called “boiling the ocean.” But Sachin Chitta, CEO of robotics maker Kinema Systems, has focused his technology on well-defined tasks that cost logistics, delivery and warehousing companies a lot of money, impose major physical wear and tear on workers – and bores those workers in the extreme.

We’re talking about “de-palletizing,” the removal of boxes from a pallet and putting them on a conveyer belt (reverse this process, and you’ve got “palletizing”). Surprisingly enough, this simple task has to date eluded the capabilities of robots. But Kinema says they’ve mastered the task.

“Imagine your worker in a warehouse or distribution center,” Chitta said. “A huge pallet of boxes comes in, the boxes can be 50 to 60 pounds and you’ve got people moving them off the pallet onto conveyers. Do this every day, eight hours a day, you’re going to break your back pretty soon. Tasks like these are incredibly difficult, they’re dull, dangerous, they’re still performed by people and there are no good automation solutions for tasks like these because robots used to be only designed to do repetitive tasks. So if everything looks the same every time, it would work. But that’s not what the real world is like.”

“Imagine your worker in a warehouse or distribution center,” Chitta said. “A huge pallet of boxes comes in, the boxes can be 50 to 60 pounds and you’ve got people moving them off the pallet onto conveyers. Do this every day, eight hours a day, you’re going to break your back pretty soon. Tasks like these are incredibly difficult, they’re dull, dangerous, they’re still performed by people and there are no good automation solutions for tasks like these because robots used to be only designed to do repetitive tasks. So if everything looks the same every time, it would work. But that’s not what the real world is like.”

The difficulty Kinema has overcome, Chitta said, is that its robot can recognize the wide variety of box shapes and sizes, it can adapt to box varieties in real time, and it can pick and put down 500 or more boxes an hour.

“When you get these pallets in customer sites, we don’t know a priori what boxes are on the pallets, we have no information. So the system’s almost looking at it blind. And there’s often new boxes, new packaging is introduced every year, so there’s new boxes coming through that we don’t know. So traditional techniques just don’t work, and that’s why it’s difficult. All our customers throw new stuff at us all the time, and it just has to work every single time.”

GPUs in combination with data from 3D vision technology are used, he said, to recognize unfamiliar box designs without slowing the robot’s productivity.

“There are millions of boxes, and to find them and get the scale we need so the robot can keep moving fast, there’s only one way to do , through deep learning, and we need GPUs to scale this way. We use GPUs in both training and the inference pipelines.”

Kinema also has worked at simplifying implementation of their robots so that non-specialists can get them up and running quickly.

“We can go faster (than 500 boxes an hour), and it’s self driving, there’s no programming required, you just set it up and tell it to go and it figures out all the motions by itself,” he said.

Autonomous Systems Winner: Kinema