Rehydrating the Data Center – A New Spin on Water Cooling

Much as race cars are to the automobile industry, high performance computing (HPC) has long been the proving ground for technologies that later end up in mainstream computing. Indeed, today’s cloud systems and hyperscale implementations owe a considerable tip of the cap to HPC pioneers who drove the development of vanity free hardware, load balancing file systems and open source software. This leads one to ask whether the current trend of water cooling in HPC has application to mainstream data centers.

A Bit of History

The use of water as an alternative cooling method to cold air has enjoyed a resurgence. Water, for those too young to remember, was once the primary cooling method and still is for large mainframes. But with the advent of x86 architecture in the 90’s and 00’s, air cooling became the de facto standard.

In 2012, Leibniz-Rechenzentrum (LRZ) in Munich, Germany, a supercomputing center supporting a diverse group of researchers from around the world, gave the HPC vendor community a unique challenge: LRZ wanted to dramatically cut the electricity it consumed without sacrificing compute power. The IBM System x team delivered a server that featured Warm Water Direct Water Cooling that piped unchilled water directly to the CPU, memory and other high power consuming components. Thus, was born the era of warm water-cooled supercomputers.

Chillers had been a staple of water cooling in the old days. Instead, at LRZ a controlled loop of unchilled water, up to 45°C, was used. In addition to the energy efficiency and data center-level cost savings, several additional benefits emerged. Since the CPUs were kept much cooler by the ultra-efficient direct water cooling, there was less energy loss within the processor, saving as much as 5 percent more than a comparable air-cooled processor. If desired, the Intel CPUs could run in “turbo mode” constantly, boosting performance up to an additional 10-15 percent. Because the systems had no fans – except small ones on the power supplies – operations were nearly silent. And the hot water produced from the data center was piped into the building as a heat source. Overall energy savings, according to LRZ, was nearly 40 percent.

And Today…

Several years have passed, and most, if not all, of the major vendors of x86 systems have jumped into water cooling in some manner. These offerings run the gamut from water-cooled rear-door heat exchangers, which act like a car’s radiator and absorb heat expelled by air-cooled systems, to systems literally submerged in a tank full of special dielectrically compliant coolant – something akin to a massive chicken fryer with servers acting as the heating elements.

Direct water-cooled systems have evolved too. Advances in thermals and materials now allow intake water up to 50°C. This makes water-cooling a viable option almost anywhere in the world without using chillers. Also, the number of components cooled by water has expanded: In addition to the CPU and memory, now the IO and voltage regulation devices are water cooled, driving the percentage of heat transferred from the system to water to more than 90 percent.

Unfortunately, not everything in the data center can be water cooled, so LRZ and Lenovo are in the process of expanding alternative cooling by converting the hot water “waste” into cold water that can be reused to cool the rest of the data center. This process utilizes “adsorption chillers,” which take the hot water from 100 compute racks and passes it over sheets of a special silica gel that evaporate the water, cooling it. From there, the evaporated water is condensed back into a liquid, which is then either piped back into the compute racks, or into a rear-door heat exchanger for racks of storage and networking gear, which aren’t water cooled. This approach to data center design is made possible because the water delivered to the chillers is hot enough to make the process run efficiently. The tight connection and interdependence between the server gear and the data center infrastructure has strong potential.

Does it Play in Peoria?

Sure, giant supercomputer clusters with thousands of nodes, petabytes of storage and miles of interconnect cables can probably justify the infrastructure costs of moving to water cooling. Will the average data center, running email, file-print, CRM and other essential business applications, need to hire Acme Plumbers any time soon? Not this week, but there are factors today that are going to make customers consider alternative cooling methods in the future, and probably sooner than most expect.

It’s Not the Humidity, It’s the Heat

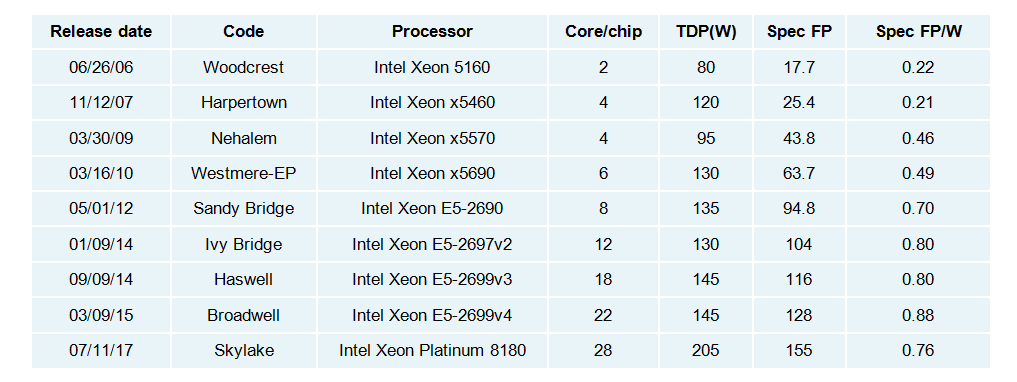

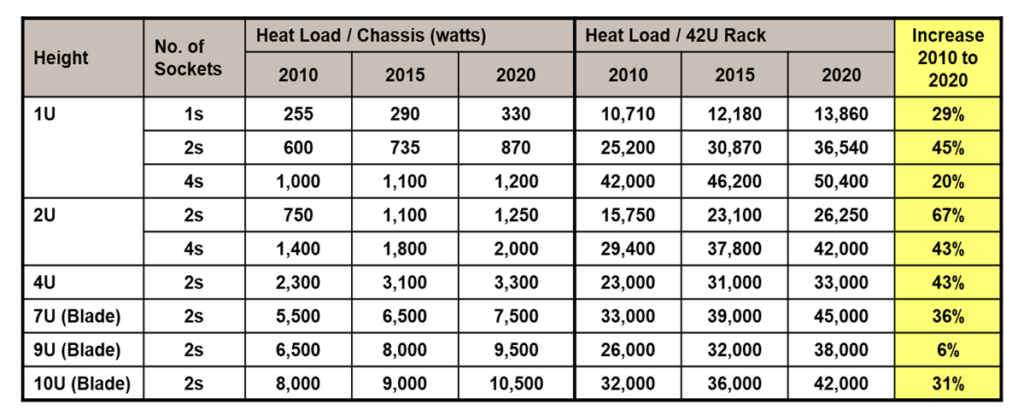

The driving force behind processor innovation for the last 50 years has been Moore’s Law, which states that the number of transistors in an integrated circuit will double approximately every two years. Moore’s company, Intel, condensed to double CPU performance every 18 months while costs come down 50 percent. After half a century, however, delivering on that prediction has become increasingly more difficult. To stay on the Moore’s Law curve, Intel has to add more processing cores to the CPU, which draws more power, and in turn, produces more heat. Look at how the power draw in Intel processors has grown over the last dozen years: To deal with that heat, processors in an air-cooled environment will need larger (taller) heat sinks, which will require systems with taller or bigger chassis. The American Society of Heating, Refrigerating and Air-Conditioning Engineers has estimated the increased heat load in a standard rack:

To deal with that heat, processors in an air-cooled environment will need larger (taller) heat sinks, which will require systems with taller or bigger chassis. The American Society of Heating, Refrigerating and Air-Conditioning Engineers has estimated the increased heat load in a standard rack: Could those 1U “pizza box” servers that have been so popular over the last 20 years end up being an endangered species? Probably not, but they may not be deployed in the same way they have been over the last two decades. Simply put, customers will face a difficult trade-off: system density (the number of servers their IT people can cram into a rack) vs. CPU capability (fewer cores). Customers wanting to run higher core CPUs will have to give up space in the rack, meaning more racks in the data center, meaning higher OPEX in real estate, electric and air conditioning costs.

Could those 1U “pizza box” servers that have been so popular over the last 20 years end up being an endangered species? Probably not, but they may not be deployed in the same way they have been over the last two decades. Simply put, customers will face a difficult trade-off: system density (the number of servers their IT people can cram into a rack) vs. CPU capability (fewer cores). Customers wanting to run higher core CPUs will have to give up space in the rack, meaning more racks in the data center, meaning higher OPEX in real estate, electric and air conditioning costs.

Customers will certainly have to consider alternative cooling to alleviate these issues. Direct water-cooled systems do not need SUV-sized heat sinks. This would allow them to maintain their profile without compromising on performance.

Going Green

A second factor at work depends on your geography. “Green” data center initiatives have been in place in Europe for a decade. They are what spurred LRZ and others like them to seek alternatives to air cooling. There is even a “Green 500” list of the most energy efficient data centers on the TOP500.org site. As more of these installations are completed, and promoted, other customers may see 50 percent savings on electricity and 15 percent performance improvement as substantial enough justification to take the plunge to water cooling.

Roughly 55 percent of the world’s electricity is produced from burning fossil fuels. Data centers consume almost 3 percent of the world’s electricity and can no longer “fly under the radar” when it comes to energy consumption. Other governments may see these energy savings achieved in other countries and put regulations in place to reduce data center power consumption.

“The Electric Bill Is Due!”

“Going green” in the data center is not simply an altruistic endeavor. It is, in many places, a matter of necessity. Electricity prices in some parts of the world can exceed $0.20 per kilowatt-hour, in other areas it can be double that amount. The largest hurdle to alternative cooling is the up-front costs for plumbing infrastructure and the small premium (in most cases less than 10 percent) for water-cooled systems over comparable air-cooled.

Finance departments always ask, “How long until a system like this will pay for itself?” and in some cases, it may be one year. Of course, TCO and ROI depend on the solution itself and the installation costs, but most OEMs have TCO calculators to assist customers in determining payback on a direct water-cooled system.

Summary

There is no doubt that water-cooled systems have an established place in HPC. We expect that to expand to regular commercial data centers in the future. Obviously, most data centers won’t convert to water cooling in the Skylake era. However, as it becomes more difficult to adhere to Moore’s Law alternative cooling will gain traction. Many customers will face significant trade-offs, and they will look for choices that maximize performance and data center density. Water cooling offers a way to do that, while reducing operating costs and burning a little less coal too.

Scott Tease is executive director, HPC and AI, Lenovo Data Center Group.