Intel Reveals Next Gen Xeon Processors, Optane Persistent Memory

For all its power, success, profits and influence, life at Intel is a permanent struggle. Chide if you will Andy Grove’s faith in paranoia as an organizing corporate principle, but Intel is right to believe everyone’s out to get them. Everyone, in fact, is. Driven by fear, the company has accomplished the rare feat of staying on top – and staying and staying – while competing in the most dynamic and innovation-driven of global business sectors. And they’ve done it without the inspirational adrenaline that fuels technology start-ups on a mission to build the next insanely great new thing. Ask IBM what a neat trick that is as Big Blue tries to hack a trail back to something like its old prominence. Or even ask Microsoft, which occasionally misreads the market and has one or two down years every decade or so. But Intel keeps going, maintaining a 95+ percent share of the data center market.

Having said that, Intel’s permanent state of struggle isn’t without its challenges and pitfalls. Right now, its core chip business is under multi-pronged assault – from nemesis AMD, again, which boasts price and performance advantages with its EPYC data center chips and which has shaved data center market share from Intel (with a resulting AMD stock surge as Intel’s has dipped), from Nvidia and its AI-dominant GPUs, from IBM, with its CPU-GPU integrated Power processors, from Arm, from FPGAs – a slew of new processors for emerging HPDA, IoT and AI workloads. It's what industry analyst Addison Snell calls “technology disaggregation.” No longer are Intel CPUs the only chips in town.

In fact, in several ways the past six to 12 months could be seen as an unsettled time for Intel. Its CEO resigned under pressure (for personal, not business, reasons), Intel chips have been found to be vulnerable to the Spectre and Meltdown viruses, the Xeon Phi accelerator processor line was recently discontinued, and Intel failed to deliver its pre-exascale supercomputer to Argonne National Labs even as IBM, in league with Nvidia, installed the world’s No. 1 supercomputer earlier this year at Oak Ridge National Labs. Taken together, these developments may be leading indicators of the processor landscape evolving away from Intel.

Maybe.

Intel responded yesterday at the company’s Data Centric Innovation Summit with a series of processor, memory and storage announcements that, the company argues, positions it to tap into emerging data center and AI revenue streams with TAMs (total available markets) totaling $200 billion by 2022, according to Intel’s Navin Shenoy, EVP/GM, Data Center Group.

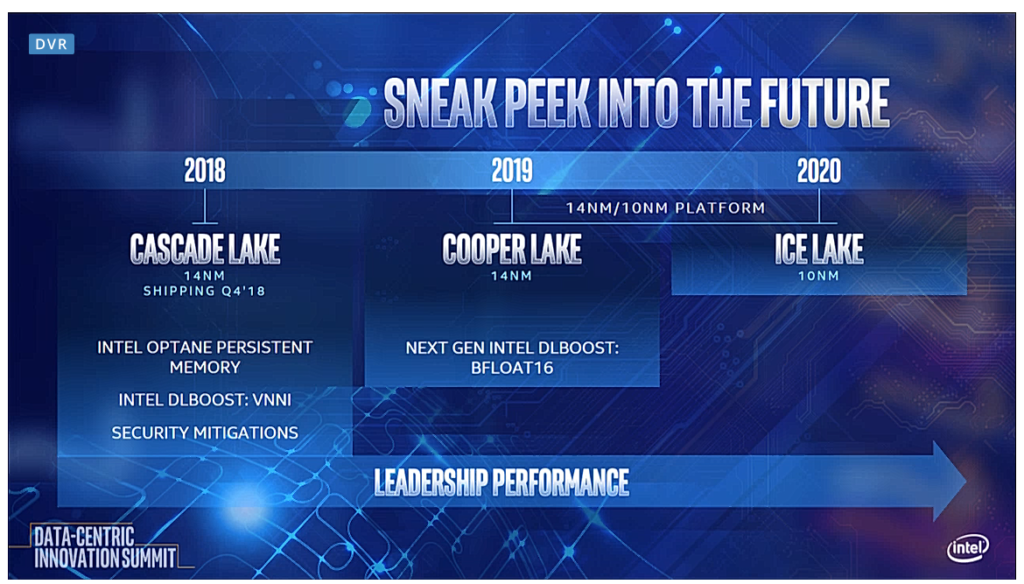

On the processor front, Intel disclosed the next generation roadmap for the Intel Xeon platform:

• Cascade Lake is a future Intel Xeon Scalable processor based on 14nm technology with a memory controller for Intel’s new Intel Optane DC persistent memory (more on this below).

Shenoy said Cascade Lake also will provide enhanced security features to address Spectre and Meltdown vulnerabilities. And it will include a new AI extension called Intel Deep Learning Boost, which extends the Intel AVX 512, adds a new vector neural network instruction and handles INT8 convolutions with fewer instructions.

This embedded AI accelerator will, Shenoy said, speed deep learning inference workloads by 11 times for image recognition compared with current-generation Intel Xeon Scalable processors, launched a year ago.

Cascade Lake is scheduled to begin shipping late this year.

• Cooper Lake is next in the Intel Xeon Scalable processor line based on 14nm technology and will, according to Shenoy, offer a general set of performance improvements, including ‘B Float 16,” numeric format used for AI training workloads. The chip will ship in late 2019.

• Ice Lake is a future Intel Xeon Scalable processor based on 10nm technology that shares a common platform with Cooper Lake and is planned for 2020 shipment.

• Ice Lake is a future Intel Xeon Scalable processor based on 10nm technology that shares a common platform with Cooper Lake and is planned for 2020 shipment.

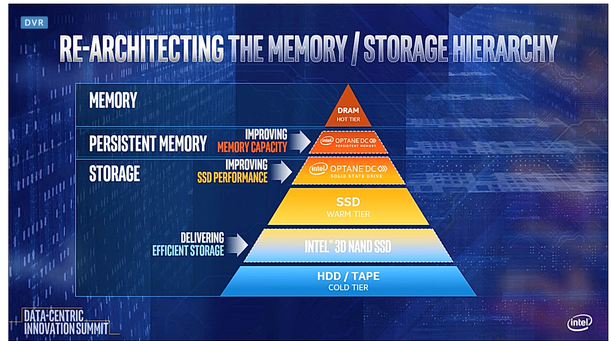

Shenoy spoke extensively about Intel’s recently announced Intel Optane DC persistent memory, which he said is a new class of memory and storage that enables a large persistent memory tier between DRAM and SSDs and can achieve up to eight times the performance of configurations with DRAM only.

The first production units of Optane persistent memory have shipped to Google and broad availability is planned for 2019.

In a typical data center, there are hot, warm and cold layers of data access. “But in a world of ever increasing amounts of data, this model is insufficient,” Shenoy said. “That traditional DRAM layer is costly, has limited capacity, and while the software community has been creative in engineering around memory, it’s not sufficient for the types of workload footprints our customers are demanding.”

At the same time, he said, the warm and cold layers have data transfer latency limitations.

He said Intel Optane DC SSDs offer 40x lower latency than typical NAN SSDs, offering a step forward in breaking the storage bottleneck.

“Optane Persistent Memory delivers the ability to keep the data intact when you lose power,” said Shenoy. “What that does is profound: in high availability systems you now have the ability to go from minutes to seconds of re-boot times. You go from three 9’s to five 9’s of availability. That’s huge for a mission critical system in the data center.”

Shenoy also disclosed that Intel generated $1 billion in AI-related revenues in 2017.

Industry analyst Patrick Moorhead, president and principal analyst, Moor Insights & Strategy, told EnterpriseTech Intel’s product strategy is opening the company to new potential opportunities.

“I am struck by how much growth potential Intel has in the data center,” he said. “Many know Intel for server compute where it has 98 percent market share, but when you add up storage and networking, the company only has around 30 percent market share.”

On the processor front, Moorhead said that “while everyone wants Intel to deliver Ice Lake sooner, it’s not going to be here until 2020 without granularity on 1H or 2H. I do like that Intel is offering a 14nm gap-filler in Cooper Lake as it adds two new ML acceleration instructions. Data centers are anxiously awaiting Cascade Lake as it adds support for Optane server-class memory and adds improved machine learning inference capabilities with VNNI and side-channel security improvements.”

He also said Intel’s willingness to reveal its AI revenue indicates the company “must have some confidence in its roadmap as it will now be asked every quarter what the AI revenue number is.”