Ignore AI Fear Factor at Your Peril: A Futurist’s Call for ‘Digital Ethics’

“I believe that man will not merely endure: he will prevail. He is immortal, not because he alone among creatures has an inexhaustible voice, but because he has a soul, a spirit capable of compassion and sacrifice and endurance.”

- William Faulkner, Nobel Prize acceptance speech, 1949

This time, AI isn’t fooling around. This time, AI is in earnest, and so are its related technologies: robotics, 3-D printing, genomics, machine/deep learning, man-machine interface, IoT, HPC at the edge, quantum – the gamut of emerging, incredibly powerful technologies. AI in decades past has gotten off to false starts, but not now, the building blocks are in place for the convergence of data-driven power evolving toward an AI supernova that will bring with it profound changes to human existence in the decades to come.

With this expectation has come serious thinking – and worrying – about AI’s potential negative impacts. Naturally, AI investors and developers are going full speed ahead while airily dismissing AI fear as generally baseless. Rarely from within the industry do we hear voices – Elon Musk’s is an exception – calling for controls on AI.

But outside the industry, concerns increasingly have been raised about AI's power to manipulate, to addict, to undermine our institutions – and to abuse our personal data. Among some thinkers, there is the sense that AI is (as has been said about war and generals) too important to be left to the technologists. And when you attend a conference session such as one delivered by futurist Gerd Leonhard last week in Las Vegas at NetApp Insight, you realize the industry should take heed: Ignore calls for restrictions on AI at your peril.

In the case of Musk, last year he issued a broad, undefined call for regulation of AI that struck many as so vague as to be pointless. You can’t regulate – you can’t stop – technology development, right? Innovation never stops.

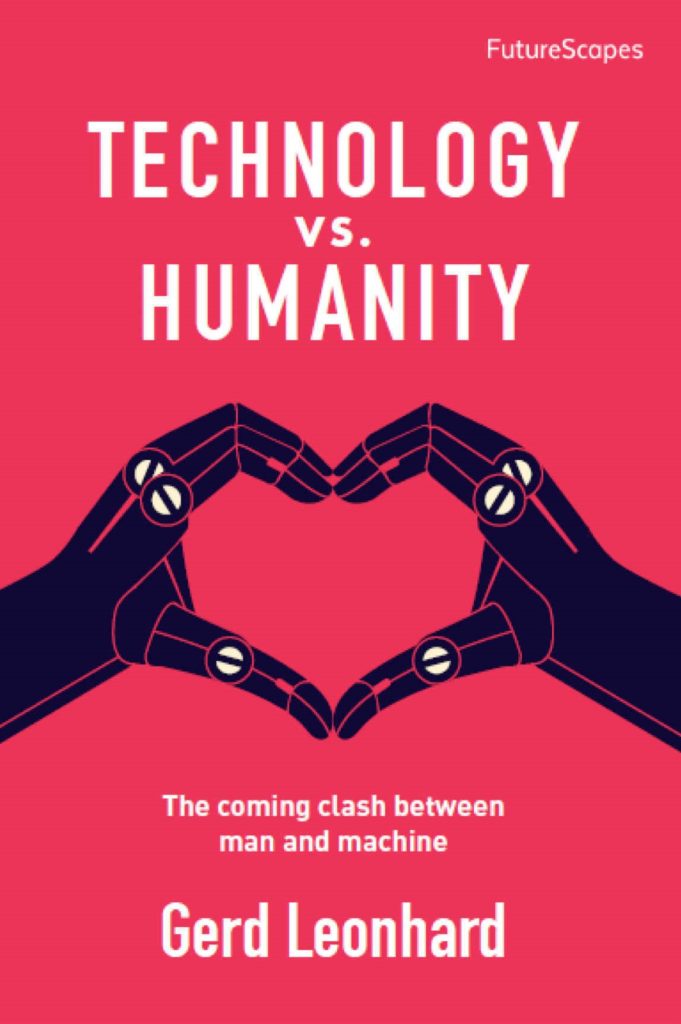

But Leonhard – a resident of Switzerland, widely traveled public speaker, CEO of Zurich-based consulting company The Futures Agency and author of Technology vs. Humanity: The Coming Clash between Man and Machine – shifts the discussion away from a broad notion of AI regulation and toward “digital ethics,” placing controls, either by government fiat or voluntary corporate action, on the data that is AI’s lifeblood.

But Leonhard – a resident of Switzerland, widely traveled public speaker, CEO of Zurich-based consulting company The Futures Agency and author of Technology vs. Humanity: The Coming Clash between Man and Machine – shifts the discussion away from a broad notion of AI regulation and toward “digital ethics,” placing controls, either by government fiat or voluntary corporate action, on the data that is AI’s lifeblood.

Data regulation, of course, has already begun in Europe with adoption of GDPR, while in the U.S., Mark Zuckerberg has been on the data privacy hotseat since the 2016 presidential election. In fact, Leonhard has been a harsh critic of Facebook (he closed his account earlier this year), calling it a “perversion on what social media was meant to be” that “has used its position, in essence, to conduct experiments with artificial intelligence.”

At his NetApp Insight session, Leonhard also delved into themes related to “the singularity,” the idea that artificial superintelligence (ASI) will trigger runaway technological growth that will dwarf human capabilities, leading possibly to what he refers to as the “sofa-larity,” masses of idle people consumed by social media and virtual reality.

Call it the “AI Fear Factor,” of which GDPR is a symptom, along with the rise of data ethics officers at technology companies. Santa Cruz, CA-based Looker, for example, marketer of a business intelligence data exploration platform, recently hired a new chief privacy and data ethics officer.

“There will always be bad actors,” said Frank Bien, Looker’s CEO, “but we believe that the ethical use of data is not just a social imperative but a business imperative – and that companies who choose the right side will eventually win.

“There’s a social reckoning coming – we’re already seeing it,” Bien said. “Not just the Cambridge Analytica scandal but Unilever, the world’s second largest advertiser is threatening to pull out of Facebook and Google if they don’t make changes related to protecting children from toxic content, being more transparent about data collection and aiding in the divisiveness of the public.”

In fact, Leonhard cited Gartner’s recent pronouncement that a leading tech topic for 2019 will be “digital ethics,” a focus on compliance, values and respect for individuals' data in response to public concerns about privacy. Leonhard himself defines digital ethics as “the difference between doing whatever technological progress will enable us to do, and putting human happiness and societal flourishing first at all times.”

Though regarded as a futurist, Leonhard said he does not predict the future, “I observe it.” And one of his core observations is that humanity is on the cusp of technology innovation that will change human life more drastically in the next 20 years than in the previous 300.

“We’ve talked about some of these (advanced technologies) for 50 years,” he said, “like artificial intelligence, but it didn’t happen. We talked about 3D printing for 20 years, and not much happened. But now, we’re seeing take off, ‘escape velocity,’ as they say. In the next 10 years were going to see things that are straight out of science fiction. In 20 years, we’ll see the singularity, the convergence of man and machine. How far do we want to go with this? How far do we want to go into a world where that is becoming feasible?”

Leonhard quoted AI thought leader and investor Andrew Ng, who said: “Data is the new oil, AI is the new electricity, IoT is the new nervous system,” the combination of which consulting firm McKinsey said will generate a TMA of $62 billion.

Data-driven learning machines will result in technological meta-intelligence, systems with exponential IQ’s capable of creating what Leonhard calls “Hell-ven,” hell or heaven, or some of both.

Take, for example, healthcare. In the near future we will be able to scan our bodies with wearable devices, upload the data into the cloud, algorithms will analyze the data and conduct remote diagnostics.

“Some people are saying we can save about 85 percent on doctor visits by having diagnostics in the cloud,” Leonhard said. “That means for the first time in 50 years a decline of healthcare costs. That would be quite an accomplishment.

To be sure, Leonhard sees growth in data-driven power as “90 percent positive.” But the potential downside, as indicated by Facebook’s problems, also is very real. For him, taking action now, before AI takes on more powerful forms, is the key to minimizing the potential downside.

“Right now, this is only somewhat of an issue,” he said, “because most of the technology isn’t working that well yet. We don’t have perfect AI, we don’t have perfect IoT, we don’t have 6G network, we don’t have quantum computers. Yet.”

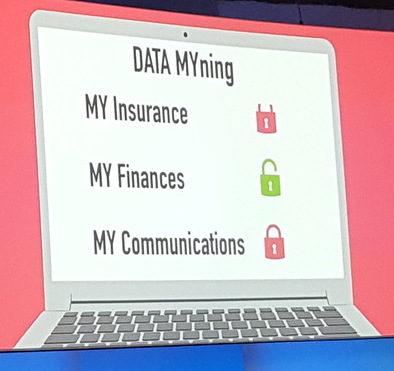

Just as Europe took an aggressive global stance on data privacy with GDPR, so too could Europe take more action on AI limitations. Leonhard said he has discussed with the European Commission (the EU organization that proposes legislation and implements EU decisions) establishment of an ethics counsel that would review the purpose, potential good and permissibility of AI as it comes to market. The idea, he said, is to build on GDPR, strengthening privacy under a rubric he called “data MYning,” putting social benefit in the use of AI as a priority ahead of business opportunity.

Just as Europe took an aggressive global stance on data privacy with GDPR, so too could Europe take more action on AI limitations. Leonhard said he has discussed with the European Commission (the EU organization that proposes legislation and implements EU decisions) establishment of an ethics counsel that would review the purpose, potential good and permissibility of AI as it comes to market. The idea, he said, is to build on GDPR, strengthening privacy under a rubric he called “data MYning,” putting social benefit in the use of AI as a priority ahead of business opportunity.

“In this world, here’s a key question…: who will be in mission control for humanity? Who decides? How do we control, how do we figure out what’s good?”

He cited the 2016 election and Facebook as the kind of problem that could come under the purview of a technology ethics counsel.

“The best example is Facebook, gunning at democracy,” he said. “You think Facebook and Mark were doing this on purpose? Were they hacked? Are they criminals? No. But what happened is unethical. And this is Facebook’s biggest problem. In fact, what happened is exactly how Facebook is designed, that’s how it works You put up the money, you run your ads. That’s what scares me the most.”

For all his concerns, the thrust of Leonhard’s remarks were optimistic, centering, as William Faulkner did in 1949, on inimitable human qualities – imagination, creativity, emotion, intuition, aesthetic appreciation – that will likely remain permanently beyond the ken of computer.

“Technology and data have no ethics,” said Leonhard. “This is a fact. Technology doesn’t care about feelings or emotions or mysteries, because it’s code. We are conscious beings, we care about relationships, engagement with people, with emotions – all the things machines hate. Machines don’t like these things because they’re not binary.

“We’re moving into a hell-ven situation, hell or heaven,” he said, “and it’s up to us to decide. Technology itself doesn’t have any interest in being heaven or hell. It’s just code. It has no interest in taking over. We have to think about this.

“What it comes down to is this: we have to find a way to use the power of technology in a good way. In a human way… In 10 years, technology will be unlimitedly powerful – infinite. Anything. 5G, 6G, 100G. Nine billion people will be on the Internet in 2030. We ain’t seen nothing yet. Were just at the beginning of this whole debate. We have to think about this, how we want to collaborate with technology. Who decides? With great power comes great responsibility.”